web-llm

web-llm copied to clipboard

web-llm copied to clipboard

Can ndarray-cache.json also be cached?

I noticed that even when all shards are cached ndarray-cache.json still gets requested from hugging face. Is there a way to skip this step once it's cached?

Thanks for the issue. There's some additional information I need to know. Is it only the json file get requested from huggingface or json+shards all get requested?

When cached only ndarray-cache.json still gets requested. But on initial load before I have it cached, everything is requested.

I've checked that it's doable to skip the step. Will fix this in incoming weeks. If you find this issue urgent, I can show you the related code and you are welcome to contribute.

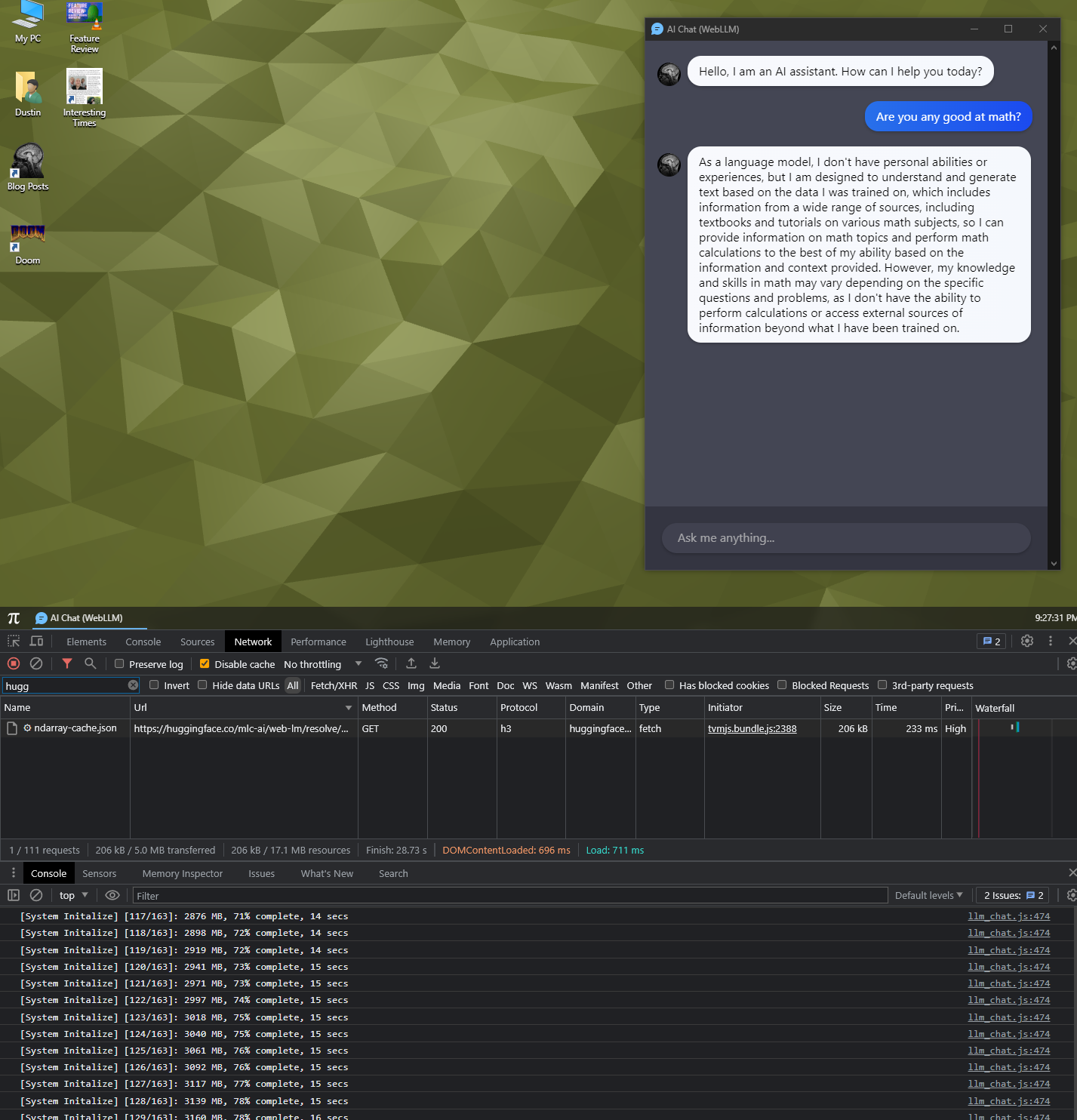

Thanks very much! I have a hack of a fix for now so it's nothing urgent, just thought I'd suggest it and make sure it made sense to do. For now in fetchNDArrayCache I am just running yield caches.has("tvmjs") to see if there is a cache and if there is I grab my local copy of ndarray-cache.json.

Ideally ndarray-cache.json would be saved to the cache too, but I didn't go that far and can wait for something more official before I mess around with that further.

I like to try and have no outside requests with any libraries I bring in, unless absolutely needed. I have a long term plan to turn my web app into a PWA that could work offline.

@DustinBrett This worked for me, fully local and offline: https://github.com/mlc-ai/web-llm/issues/19#issuecomment-1511754031

@DustinBrett This worked for me, fully local and offline: #19 (comment)

Yes indeed that would work, but seeing as I want to host this on my website, I want HuggingFace to be used the first time to grab the files as I don't want to host GB's of data. My issue was subsequent requests used the cached data but this JSON file was always grabbed remotely.

@DustinBrett Thank you for explorations. We are an open source project so you are definitely more than welcomed to contributed and we really appreciate the discussion and contribution. Likely reusing the tvmjs cache should solve the problem. The PR can be send to https://github.com/apache/tvm/tree/unity

I've gone ahead and made the PR @tqchen, thanks for suggesting I upstream it. https://github.com/apache/tvm/pull/14722