EfficientNet-PyTorch

EfficientNet-PyTorch copied to clipboard

EfficientNet-PyTorch copied to clipboard

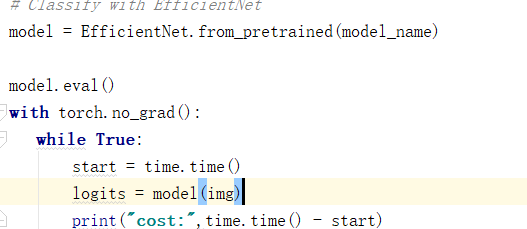

the inference speed is much slower than original TensorFlow code

hi,I ran your sample code and then tested the inference time,but I find that the inference speed is much slower than original TensorFlow code on my computer。

hi,I ran your sample code and then tested the inference time,but I find that the inference speed is much slower than original TensorFlow code on my computer。

Hello,

Yes, this is expected because grouped convolutions in PyTorch are slow (the core devs are working on making them faster). See pytorch/pytorch#18631.

hi, since the speed is too slow, is that can be modified the implementation from " self._depthwise_conv = Conv2dSamePadding( in_channels=oup, out_channels=oup, groups=oup, # groups makes it depthwise kernel_size=k, stride=s, bias=False)"

to " self._depthwise_conv_in = Conv2dSamePadding( in_channels=oup, out_channels=1, groups=1, # groups makes it depthwise kernel_size=1, stride=1, bias=False) self._depthwise_conv = Conv2dSamePadding( in_channels=1, out_channels=oup, groups=1, # groups makes it depthwise kernel_size=k, stride=s, bias=False) " thanks

Now with #44 you can export to ONNX. That may help in terms of inference speed, as it actually compiles a graph.

@semchan I set groups=1, and the size of the weight file changed from ~30m to ~900m. It is unacceptable.

@lukemelas The TensorRT engine file form onnx still slow than the engine file from tf model, it cost ~10ms in my task and tf model cost ~5ms. It seems the onnx graph will affect the build method of TensorRT engine file.

@xiaochus i also find pytorch model so slow. i want to speed up. dou you have tf model of gpu,?? official tf model is tpu, i can not use it

@qiaoyaya2011 The official tf code ,in which you can set a parameter '--use_tpu=False' ,then it will use your GPU or CPU

@freedom521jin thanks , I will try it later today, please keep in touch

Maybe this implementation is wrong and/or there are some memory transfers across devices?

I met the same problem

https://github.com/pytorch/pytorch/issues/18631#issuecomment-798815648 might be improved with last cudnn anyone checked if that's true?

but why is the difference when both torch and tf use cudnn?