vcluster

vcluster copied to clipboard

vcluster copied to clipboard

create PersistentVolume for PersistentVolumeClaim in case of cluster is not having dynamic volume provisioning support

Hello, the vCluster CLI creates a PVC but not creates PV instead it waits for creating PV via dynamic volume provisioning (IMHO) but if the cluster is only supporting static provisioning, the vCluster Pods stay in the Pending state.

It would be nice to create PV also to avoid having this kind of problems 😋

@developer-guy thanks for creating this issue! Yes thats true, but I'm not sure if we can create one automatically or if we should add this to the helm chart

@developer-guy thanks for creating this issue! Yes that's true, but I'm not sure if we can create one automatically or if we should add this to the helm chart

thank you for such a quick response @FabianKramm, ah yes, you're right ! it would be absolutely more proper way of doing it. If you enlighten me about where to look at, I can do it 🤩

@developer-guy everything chart related can be found here: https://github.com/loft-sh/vcluster/tree/main/chart.

related with #145

I couldn't decide which PV type I need to use, I think the most basic type of PV is hostPath but I'm not sure that everything will work fine when I add this feature, can you help me a bit? I can accept a flag to enable this feature and use it in getDefaultReleaseValues function to pass it to the Helm chart.

Hello, @developer-guy, @dentrax and I worked on this issue. We have a couple of alternatives to automate the creation of PV.

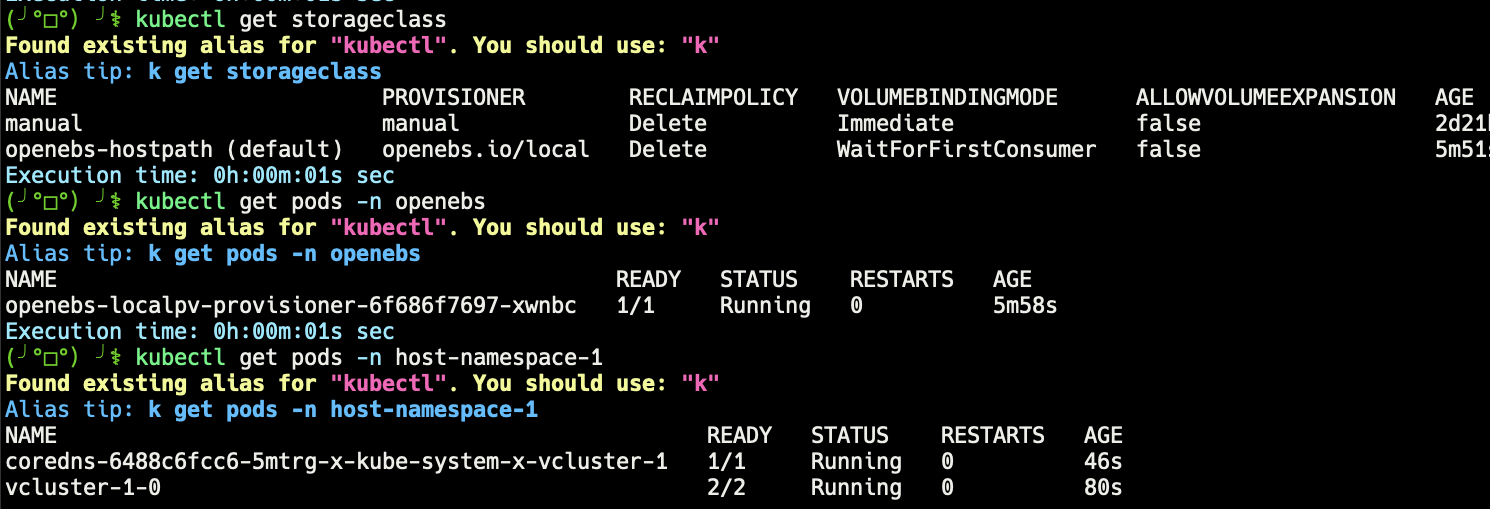

Solution: Install OpenEBS as dynamic local PV provisioner

OpenEBS Dynamic Local PV provisioner can dynamically provision Kubernetes Local Volumes using different kinds of storage available on the Kubernetes nodes.

- Add OpenEBS as a conditional sub chart:

- https://newbedev.com/helm-conditionally-install-subchart

- Add a flag to vcluster CLI to enable deploying OpenEBS Helm chart for example:

--enable-dynamic-pv - Make OpenEBS host path as default storage class

kubectl patch storageclass openebs-hostpath -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

Alternatives:

@developer-guy @eminaktas thanks for the info! While this certainly works, I'm not entirely sure that we should add this as a subchart as this sounds more like a general Kubernetes cluster setup step to me instead of something vcluster should depend on. I would be okay with adding this as a flag though where vcluster would check if OpenEBS is already installed and if not would install it as a separate helm chart and make the other adjustments, since persistent storage currently is the biggest pain point right now during installation. A separate installation would make it also possible to use this flag with every newly created vcluster in a single host cluster.

@FabianKramm My two cents on this: I would not like to see an OpenEBS installation as any part of the chart or the vcluster create command since I believe that vcluster should be requiring the least permissions possible in a cluster. Installing storage is the entire opposite. I think we should not assume that people are cluster admins when using vcluster.

I don't see why admins can't run the helm install separately themselves. Alternatively, we could add a vcluster setup-cluster command to install things like this and clearly separate it in a command for admins only or a vcluster preflight command to run checks and point users with links to docs page guides for how to add certain capabilities if they are not available in the cluster. But again, I don't think any of this should be part of vcluster create or the vcluster helm chart.

Hello @LukasGentele, thank you for the answer, and it is understandable. We've already developed our solution and opened PR, but as you said, should we create another command and move the installation OpenEBS logic into it like setup-cluster, or maybe even we can create the second command along with the setup-cluster as you said preflight for just checking the state of the cluster whether appropriate or not. WDYT?

cc: @dentrax @eminaktas

From the comments, it sounds like this issue is resolved, so I'll close it. But drop a comment if I got it wrong :)