linode-blockstorage-csi-driver

linode-blockstorage-csi-driver copied to clipboard

linode-blockstorage-csi-driver copied to clipboard

OpenEBS might have conflicts with Linode Block Storage CSI

I found that after deploying OpenEBS on your cluster, the Linode BlockStorage CSI doesn't work any more, it has been verified by me in two clusters for many times.

Today I was doing dbench to test performance of OpenEBS + cStor and Mayastor and Linode BlockStorage CSI, it worked well when I tested Linode BlockStorage CSI before installing Mayastor and OpenEBS + cStor, but after them two installed, when I tried to test Linode BlockStorage CSI again, it couldn’t get success but errors:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 2m19s default-scheduler 0/3 nodes are available: 3 pod has unbound immediate PersistentVolumeClaims. preemption: 0/3 nodes are available: 3 Preemption is not helpful for scheduling.

Warning FailedScheduling 2m13s default-scheduler 0/3 nodes are available: 3 pod has unbound immediate PersistentVolumeClaims. preemption: 0/3 nodes are available: 3 Preemption is not helpful for scheduling.

Normal Scheduled 2m9s default-scheduler Successfully assigned default/dbench-linode-smnck to lke101961-152688-643422e04d3e

Normal NotTriggerScaleUp 2m14s cluster-autoscaler pod didn't trigger scale-up:

Normal SuccessfulAttachVolume 2m6s attachdetach-controller AttachVolume.Attach succeeded for volume "pvc-6f2d8d3a09254be7"

Warning FailedMount 2m2s (x4 over 2m6s) kubelet MountVolume.MountDevice failed for volume "pvc-6f2d8d3a09254be7" : rpc error: code = Internal desc = Unable to find device path out of attempted paths: [/dev/disk/by-id/linode-pvc6f2d8d3a09254be7 /dev/disk/by-id/scsi-0Linode_Volume_pvc6f2d8d3a09254be7]

Warning FailedMount 61s (x4 over 118s) kubelet MountVolume.MountDevice failed for volume "pvc-6f2d8d3a09254be7" : rpc error: code = Internal desc = Failed to format and mount device from ("/dev/disk/by-id/scsi-0Linode_Volume_pvc6f2d8d3a09254be7") to ("/var/lib/kubelet/plugins/kubernetes.io/csi/linodebs.csi.linode.com/6c5e77008f10afe65b00ca7f3d2a2f2c5210062136a0c7c6ccf2d399cc2f3d69/globalmount") with fstype ("ext4") and options ([]): mount failed: exit status 255

Mounting command: mount

Mounting arguments: -t ext4 -o defaults /dev/disk/by-id/scsi-0Linode_Volume_pvc6f2d8d3a09254be7 /var/lib/kubelet/plugins/kubernetes.io/csi/linodebs.csi.linode.com/6c5e77008f10afe65b00ca7f3d2a2f2c5210062136a0c7c6ccf2d399cc2f3d69/globalmount

Output: mount: mounting /dev/disk/by-id/scsi-0Linode_Volume_pvc6f2d8d3a09254be7 on /var/lib/kubelet/plugins/kubernetes.io/csi/linodebs.csi.linode.com/6c5e77008f10afe65b00ca7f3d2a2f2c5210062136a0c7c6ccf2d399cc2f3d69/globalmount failed: Invalid argument

Warning FailedMount 7s kubelet Unable to attach or mount volumes: unmounted volumes=[dbench-pv], unattached volumes=[dbench-pv kube-api-access-n6rv6]: timed out waiting for the condition

I could find nothing useful by searching through the internet, finally I suspected there might be conflicts among the three solutions, so I tried to uninstall OpenEBS+cStor first, then it got Linode BlockStorage CSI working again.

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: dbench-linode-pv-claim

spec:

# storageClassName: mayastor-3

storageClassName: linode-block-storage-retain

# storageClassName: gp2

# storageClassName: local-storage

# storageClassName: ibmc-block-bronze

# storageClassName: ibmc-block-silver

# storageClassName: ibmc-block-gold

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 100Gi

---

apiVersion: batch/v1

kind: Job

metadata:

name: dbench-linode

spec:

template:

spec:

containers:

- name: dbench

# https://github.com/leeliu/dbench/issues/4

image: slegna/dbench:1.0

imagePullPolicy: Always

env:

- name: DBENCH_MOUNTPOINT

value: /data

# - name: DBENCH_QUICK

# value: "yes"

# - name: FIO_SIZE

# value: 1G

# - name: FIO_OFFSET_INCREMENT

# value: 256M

# - name: FIO_DIRECT

# value: "0"

volumeMounts:

- name: dbench-pv

mountPath: /data

restartPolicy: Never

volumes:

- name: dbench-pv

persistentVolumeClaim:

claimName: dbench-linode-pv-claim

backoffLimit: 4

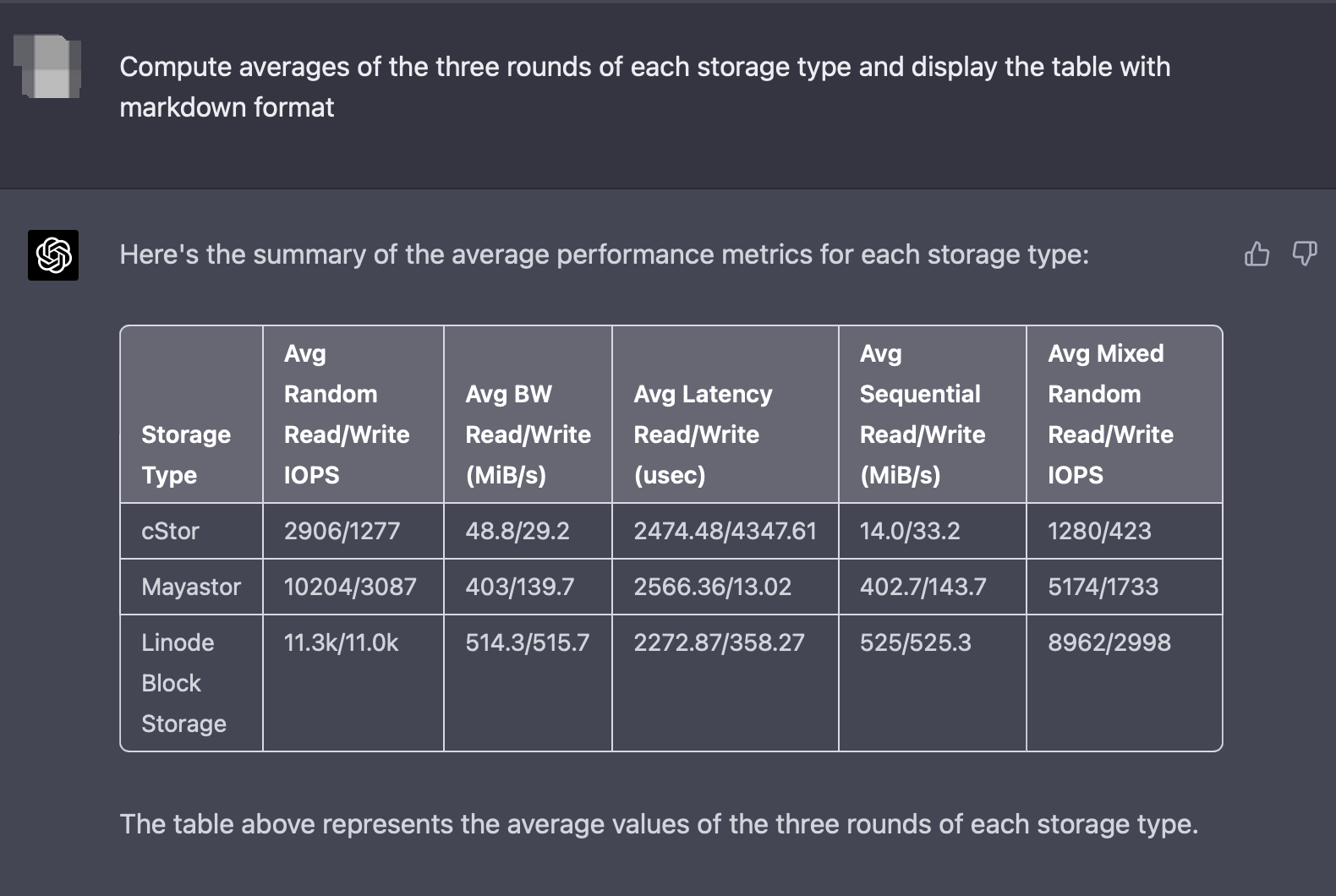

BTW, this is my performance benchmark result:

Summary from ChatGPT-4:

Hi @kvzn! :wave:

Are you still experiencing this issue with having the Linode CSI driver and OpenEBS installed at the same time?

If you are, could you possibly provide some logs from the Linode CSI driver's controller and node pods when you attempt to create, publish, and stage a volume?

Hi @kvzn,

Root cause was identified for conflict between OpenEBS and Linode CSI driver:

- Linode Controller Server creates volume and attaches to node

- OpenEBS Node Disk Manager (NDM) detects new volume and creates partition on it.

- Linode Node Server tries to mount with ext4 but fails due to OpenEBS partitioning. Logs show OpenEBS NDM performing partition operations on PVC created by Linode CSI driver. Linode Node Server then fails to mount, detecting partitions when expecting ext4.

This conflict issue seems to have been resolve with OpenEBS 4.x release.

Thanks for reporting the issue! If you continue to see this issue with new OpenEBS (4.x) release, feel free to open the issue back up :)