containerized-data-importer

containerized-data-importer copied to clipboard

containerized-data-importer copied to clipboard

HTTP import Ubuntu Server image to DataVolume is extremely slow

What happened:

Using the CDI to import from a disk image, hosted on an HTTP endpoint, is extremely slow.

What you expected to happen:

Importing a disk image is fast.

How to reproduce it (as minimally and precisely as possible): Steps to reproduce the behavior.

apiVersion: cdi.kubevirt.io/v1beta1

kind: DataVolume

metadata:

namespace: default

name: ubuntu-jammy2

spec:

source:

http:

url: "https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img"

pvc:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

Additional context:

It's only 32% completed after 21 minutes.

PS /home/ubuntu> sudo kubectl get dv

NAME PHASE PROGRESS RESTARTS AGE

ubuntu-jammy 37m

ubuntu-jammy2 ImportInProgress 32.14% 21m

Environment:

- CDI version (use

kubectl get deployments cdi-deployment -o yaml): v1.51.0 - Kubernetes version (use

kubectl version): K3S 1.23.8 - DV specification: N/A

- Cloud provider or hardware configuration: AWS

c5.metalinstance type - OS (e.g. from /etc/os-release): Ubuntu Server 22.04 LTS Jammy Jellyfish

- Kernel (e.g.

uname -a): N/A - Install tools: N/A

- Others: N/A

Related to #2058

So honestly, this has been reported before, and it turns out the cloud-images.ubuntu.com http endpoint is just slow. If you download the file manually, and put it in a different http server (one you control for instance) the import is fast.

@awels Hello, thank you for responding. I did link this issue to #2058, because I was unable to re-open it.

I use SpaceX Starlink satellite internet at home, and I am able to download the Ubuntu 22.04 LTS cloud image at ~10 MB/sec. Hence, I am reasonably confident that running the same process on a bare metal AWS cloud server should yield much better performance.

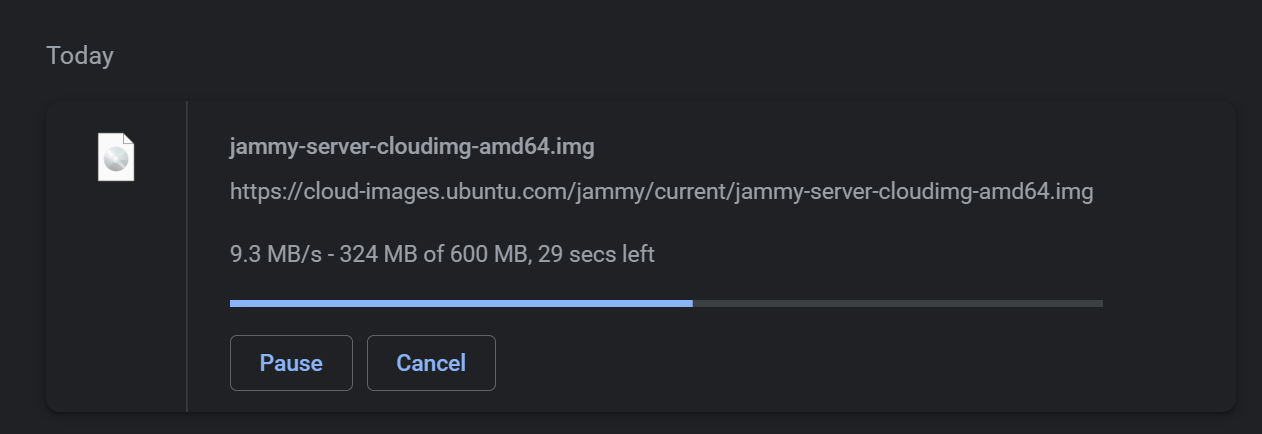

Here's the download going in Chrome, to my local developer workstation:

I'm not inclined to believe that the Canonical Ubuntu cloud image server is necessarily the root cause of this problem.

@pcgeek86 @awels

It appears that "qemu-img convert" is the culprit here. On my system:

wget https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img took 40s

qemu-img convert -p -O raw https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img disk.img took 10 minutes

This is because qemu-img convert does a bunch of small range requests rather than streaming the entire file in one go.

Also, assuming our content type detection is working correctly, it is completely unnecessary to run qemu-img convert

Ah, it appears that the file is qcow2, so conversion is necessary. I assumed by ".img" extension that it was raw

Looks like now we get into the tradeoff where it is probably faster to download the file to scratch before doing conversion. Maybe we should discuss making this configurable

Issues go stale after 90d of inactivity.

Mark the issue as fresh with /remove-lifecycle stale.

Stale issues rot after an additional 30d of inactivity and eventually close.

If this issue is safe to close now please do so with /close.

/lifecycle stale

/remove-lifecycle stale

-

The issue is still present and I was able reproduce with

v1.55.0. -

Debian is also affected: https://cloud.debian.org/images/cloud/bullseye/latest/debian-11-genericcloud-amd64.qcow2

-

Workaround:

In some special cases, the CDI importer does not go through the

nbkitcurlplugin, but instead first downloads the file to a scratch space. See https://github.com/kubevirt/containerized-data-importer/blob/main/doc/scratch-space.mdOne of the cases is when the server of the source URL uses a custom certificate authority(CA). We can force the scratch space method by providing an empty custom CA. The importer should pick up the OS cert store, append our empty CA, and helpfully not complain that the custom CA an is an empty string. I haven't verified it, but in theory the importer should still validate the certificate of the server with the OS cert store.

This workaround is risky. It relies on internal implementation details and the behavior could change between patch versions.

apiVersion: cdi.kubevirt.io/v1beta1 kind: DataVolume metadata: name: ubuntu-jammy spec: source: http: # certConfigMap with an empty cert is to work around this issue: # https://github.com/kubevirt/containerized-data-importer/issues/2358 certConfigMap: empty-cert url: https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img pvc: accessModes: - ReadWriteOnce resources: requests: storage: 5Gi --- apiVersion: cdi.kubevirt.io/v1beta1 kind: DataVolume metadata: name: debian-11 spec: source: http: # certConfigMap with an empty cert is to work around this issue: # https://github.com/kubevirt/containerized-data-importer/issues/2358 certConfigMap: empty-cert url: https://cloud.debian.org/images/cloud/bullseye/latest/debian-11-genericcloud-amd64.qcow2 pvc: accessModes: - ReadWriteOnce resources: requests: storage: 5Gi --- apiVersion: v1 kind: ConfigMap metadata: name: empty-cert data: ca.pem: ""

Issues go stale after 90d of inactivity.

Mark the issue as fresh with /remove-lifecycle stale.

Stale issues rot after an additional 30d of inactivity and eventually close.

If this issue is safe to close now please do so with /close.

/lifecycle stale

/remove-lifecycle stale

I implemented multi-conn in nbdkit-curl-plugin which considerably improves performance: https://gitlab.com/nbdkit/nbdkit/-/commit/bb0f93ad7b9de451874d0c54188bf69cd37c5409 https://gitlab.com/nbdkit/nbdkit/-/commit/ebf1ad8568b688c58c931f282db60afc2ccb981f https://gitlab.com/nbdkit/nbdkit/-/commit/38dccd848bd40cccdf012df7a606e13282aaeecb https://gitlab.com/nbdkit/nbdkit/-/commit/6c6b8c225ad3dfa07f20ca419063f5b1840bcade https://gitlab.com/nbdkit/nbdkit/-/commit/a132e242e166ff3d4688d5eb3c38b0f0c9c4d198

We're looking at the other side of this which is implementing multi-conn in qemu-img convert, will let you know what happens here.

With both changes it ought to run as fast as wget + convert.

It seems that the next step is to try the new multi-conn feature to see if it improves performance. Long term, we need to decide if we want to switch to always downloading to scratch space.

Here are some interesting performance numbers that Rich posted to the qemu mailing list, when experimenting with whether having multiple client sockets over NBD coupled with multiple curl handles to the server would make a difference: https://lists.gnu.org/archive/html/qemu-devel/2023-03/msg03320.html