cloud-provider-azure

cloud-provider-azure copied to clipboard

cloud-provider-azure copied to clipboard

Unable to set Loadbalancer Service Health Probe Port

Reopen from #1499

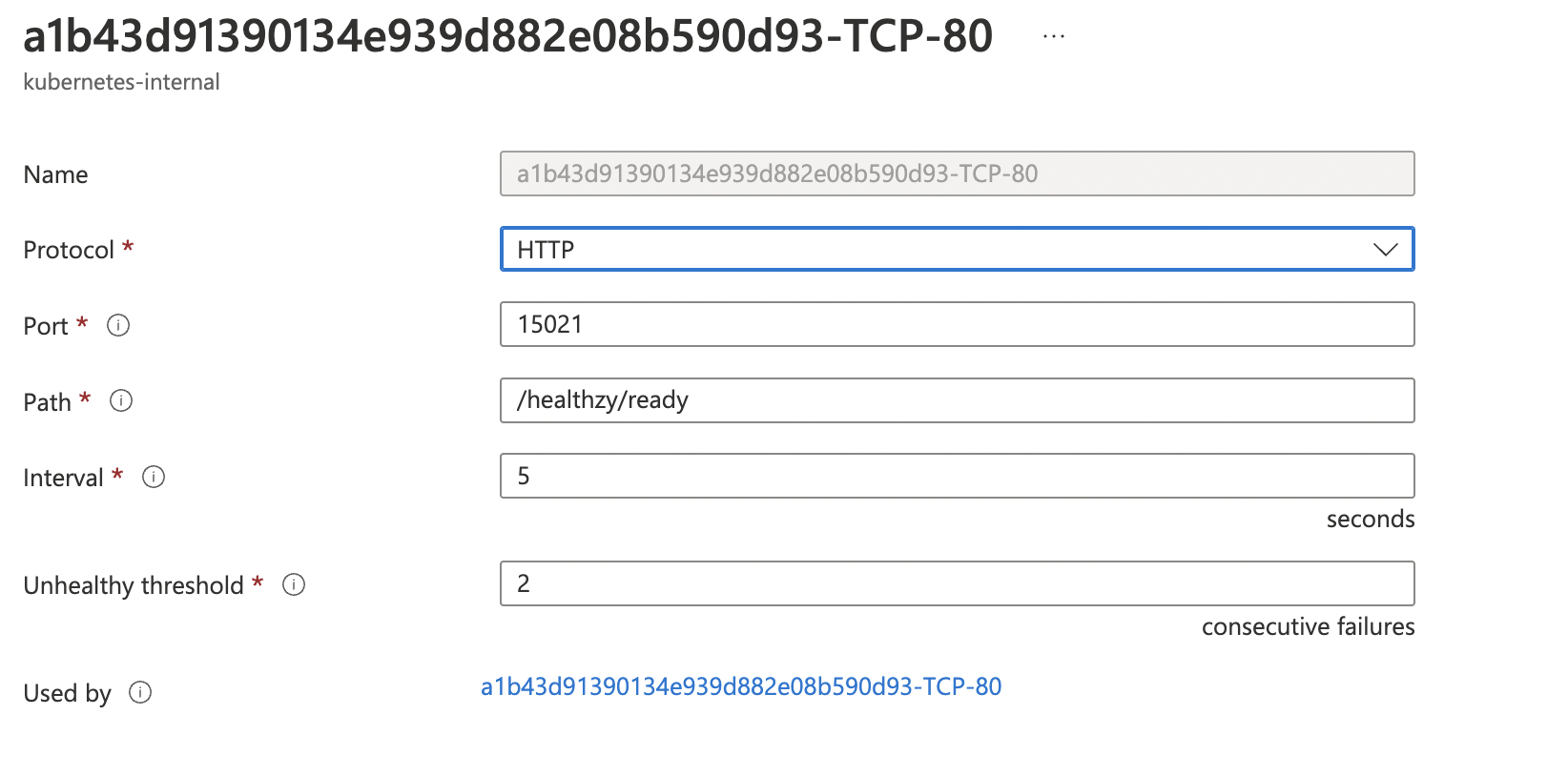

When configuring Health Checks for Azure Load Balancer you can specify the path and type, but it is not possible to specify the port of the health probe.

Istio Ingress Gateway and similar services do not expose health checks on their actual endpoints (as it can operate across multiple protocols) but instead expose a general health check on a different status port for the gateway.

In AWS this is easily possible with the service.beta.kubernetes.io/aws-load-balancer-healthcheck-port annotation but it does not seem possible in Azure.

This prevents us from configuring health check probes against Istio which need to be targeting the "Status Ready" port not the actual port of the backend.

Example

Istio hosted on two ports: Port 80 and Port 443 will not serve any traffic until a Virtual Service is deployed The health check needs to be a HTTP health check against the status port, let's say that's 15021 The probe protocol and endpoint and everything can be set, but the health check port cannot be.

For the following spec:

spec:

ports:

- name: http2

protocol: TCP

port: 80

targetPort: 8080

- name: https

protocol: TCP

port: 443

targetPort: 8443

The generated probe needs to look like this:

@MartinForReal I've started down this path: https://github.com/vsabella/cloud-provider-azure/commit/5303352c2291d3727d662a281fd0547de0d9d68f

If that looks like it makes sense for a fix I'll complete it also for the MixedLB mode /port_{num}-.... annotations

It also looks like since priority is given to port.AppProtocol we are also unable to:

- set appProtocol to the correct value (https)

- expect the health probe to be a different protocol (say http)

The ability to decouple the health check from the actual service entry is key to getting Istio ingressgateway to have correct health checks / drain behavior / etc...

if port.AppProtocol == nil {

if port.AppProtocol, err = consts.GetAttributeValueInSvcAnnotation(annotations, consts.ServiceAnnotationLoadBalancerHealthProbeProtocol); err != nil {

return nil, fmt.Errorf("failed to parse annotation %s: %w", consts.ServiceAnnotationLoadBalancerHealthProbeProtocol, err)

}

if port.AppProtocol == nil {

port.AppProtocol = to.StringPtr(string(network.ProtocolTCP))

}

}

Well, this is a pleasant surprise. :-)

It is reasonable for a fix. If we add two additional annotations, will it work in the current scenario?

port_{num}-probe-protocol

port_{num}-probe-port

Then the priority goes to two new annotations.

@feiskyer @nilo19 @lzhecheng Any advice is appreciated!

@vsabella Thanks for the contribution!

Well, this is a pleasant surprise. :-)

It is reasonable for a fix. If we add two additional annotations, will it work in the current scenario?

port_{num}-probe-protocol port_{num}-probe-portThen the priority goes to two new annotations.

@feiskyer @nilo19 @lzhecheng Any advice is appreciated!

@vsabella Thanks for the contribution!

Thats exactly what I was thinking and reasonable. I'll have a PR for you later this week.

Hi @vsabella Do you still have bandwidth to work on this?

The Kubernetes project currently lacks enough contributors to adequately respond to all issues and PRs.

This bot triages issues and PRs according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue or PR as fresh with

/remove-lifecycle stale - Mark this issue or PR as rotten with

/lifecycle rotten - Close this issue or PR with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale

/remove-lifecycle stale

Checking on this as well, as we recently encountered this when our users started migrating to 1.24. Is the fork fix still under active development, or is there any ongoing work from Microsoft to implement these annotations?

FWIW, in our case the affected HTTP services:

- are not likely to serve 200s for

GET /, as they're proxy instances that often only route requests that include some configured host header. - require a TLS client certificate before accepting any requests.