mpi-operator

mpi-operator copied to clipboard

mpi-operator copied to clipboard

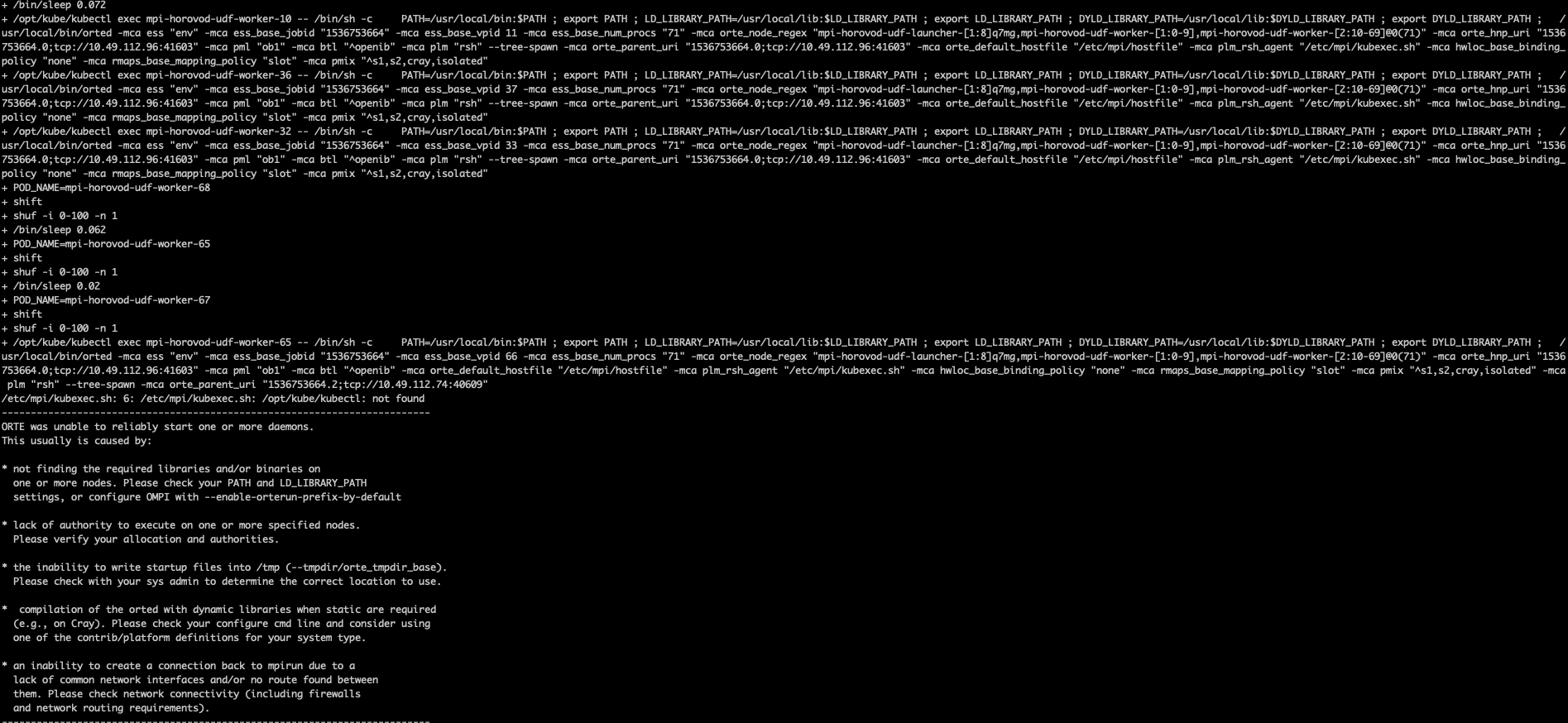

when worker num is higher,launcher failed as "kubectl not found"

we have been troubled by a wired problem. When worker num is lower,everything is ok. But when worker num is higher,in our case 70,launcher failed as "kubect not found". The belowing is detail logs:

We consulted experts and got some suggestions. Finally we put kubectl and config in the right dir of our image in advance,everything changes right. We are still confused. Welcome to discuss.

Also found the same issue in our cluster...

It's possible that deliver_kubectl.sh is not being executed successfully (or finished) on each worker so that /opt/kube/kubectl may not exist yet. If you copy kubectl to /opt/kube/kubectl in advance in this Dockerfile (similar to my offline suggestion to you earlier), this problem should be fixed.

It's possible that deliver_kubectl.sh is not being executed successfully (or finished) on each worker so that

/opt/kube/kubectlmay not exist yet. If you copykubectlto/opt/kube/kubectlin advance in this Dockerfile (similar to my offline suggestion to you earlier), this problem should be fixed.

We found that all the mount path deleted during sending clusterspec to workers , including /opt/kube and /root/.kube. After that,launcher use its own /opt/kube/kubectl. Same for kube config.

What is deleting the mount paths? You mentioned cluster spec, is this an issue with the TensorFlow version you are using? It’s weird that this only happens to some of your workers.