kcli

kcli copied to clipboard

kcli copied to clipboard

Do `kcli` throw breaking errors or not? In `Bash` it seems like - not necessarily always so.

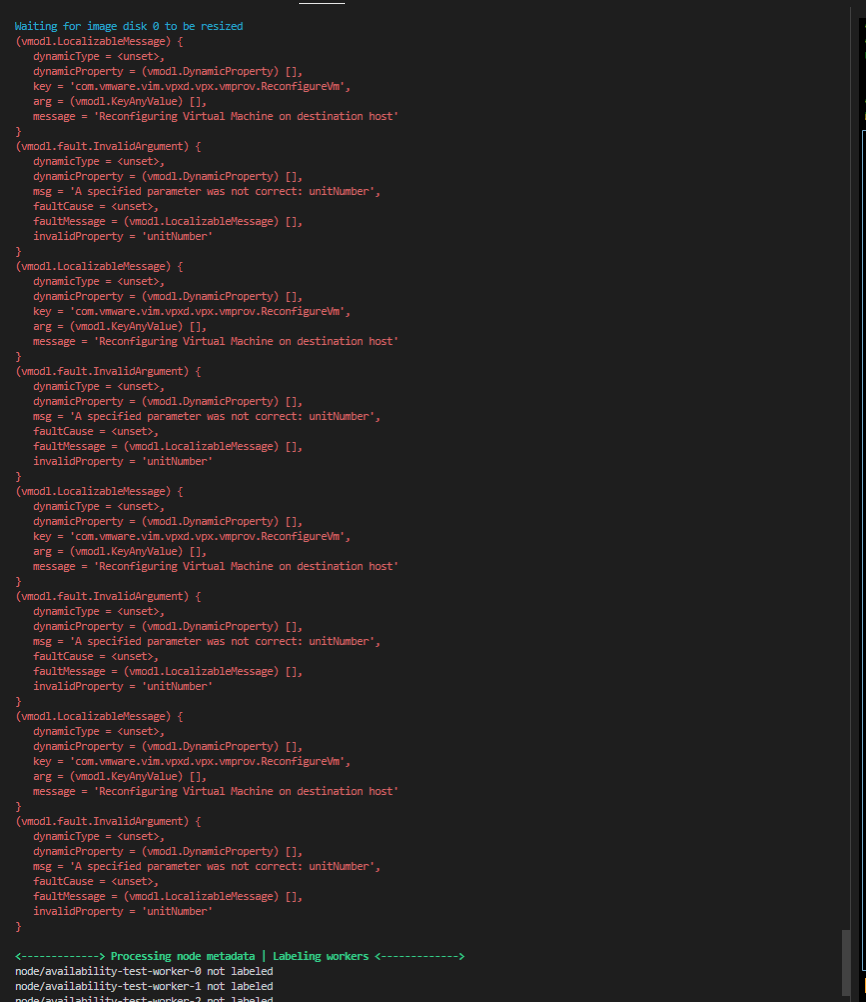

So we had the following error

When deploying a K3s cluster based on the following kcli parameter file:

#################

# KCLI VM Rules #

#################

vmrules:

#### MASTERS

- availability-test-master-0:

nets:

- name: Some_network

dns: 192.168.10.11,192.168.10.12

gateway: 192.168.22.1

ip: 192.168.23.45

mask: 255.255.254.0

nic: ens192

cmds:

- bash /root/kubernemlig-k3s-ha-master.sh

- bash /home/ubuntu/installEtcdctl

- bash /root/handleCoreDnsYaml.sh --clusterType haCluster

files:

- path: /root/kubernemlig-k3s-ha-master.sh

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/bootstrapping/kubernemlig-k3s-ha-master.sh

- path: /var/lib/rancher/audit/audit-policy.yaml

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/cluster-configuration/audit-logging/audit-policy.yaml

- path: /var/lib/rancher/audit/webhook-config.yaml

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/cluster-configuration/audit-logging/webhook-config.yaml

- path: /home/ubuntu/installEtcdctl

currentdir: True

origin: ~/iac-conductor/src/bash/installEtcdctl

- path: /root/handleCoreDnsYaml.sh

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/bootstrapping/handleCoreDnsYaml.sh

- path: /root/manifests/coredns-ha.yaml

currentdir: True

origin: ~/iac-conductor/infrastructure-services/network/dns/CoreDNS/internal/coredns-ha.yaml

unplugcd: True

- availability-test-master-1:

nets:

- name: Some_network

dns: 192.168.10.11,192.168.10.12

gateway: 192.168.22.1

ip: 192.168.23.46

mask: 255.255.254.0

nic: ens192

cmds:

- bash /root/kubernemlig-k3s-ha-master.sh

- bash /home/ubuntu/installEtcdctl

files:

- path: /root/kubernemlig-k3s-ha-master.sh

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/bootstrapping/kubernemlig-k3s-ha-master.sh

- path: /var/lib/rancher/audit/audit-policy.yaml

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/cluster-configuration/audit-logging/audit-policy.yaml

- path: /var/lib/rancher/audit/webhook-config.yaml

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/cluster-configuration/audit-logging/webhook-config.yaml

- path: /home/ubuntu/installEtcdctl

currentdir: True

origin: ~/iac-conductor/src/bash/installEtcdctl

unplugcd: True

- availability-test-master-2:

nets:

- name: Some_network

dns: 192.168.10.11,192.168.10.12

gateway: 192.168.22.1

ip: 192.168.23.47

mask: 255.255.254.0

nic: ens192

cmds:

- bash /root/kubernemlig-k3s-ha-master.sh

- bash /home/ubuntu/installEtcdctl

files:

- path: /root/kubernemlig-k3s-ha-master.sh

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/bootstrapping/kubernemlig-k3s-ha-master.sh

- path: /var/lib/rancher/audit/audit-policy.yaml

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/cluster-configuration/audit-logging/audit-policy.yaml

- path: /var/lib/rancher/audit/webhook-config.yaml

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/cluster-configuration/audit-logging/webhook-config.yaml

- path: /home/ubuntu/installEtcdctl

currentdir: True

origin: ~/iac-conductor/src/bash/installEtcdctl

unplugcd: True

#### WORKERS

- availability-test-worker-0:

nets:

- name: Some_network

dns: 192.168.10.11,192.168.10.12

gateway: 192.168.22.1

ip: 192.168.23.50

mask: 255.255.254.0

nic: ens192

cmds:

- bash /root/kubernemlig-k3s-worker.sh

files:

- path: /root/kubernemlig-k3s-worker.sh

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/bootstrapping/kubernemlig-k3s-worker.sh

- path: /etc/udev/longhorn-data-disk-add.sh

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/cluster-configuration/storage/longhorn-data-disk-add.sh

unplugcd: True

- availability-test-worker-1:

nets:

- name: Some_network

dns: 192.168.10.11,192.168.10.12

gateway: 192.168.22.1

ip: 192.168.23.51

mask: 255.255.254.0

nic: ens192

cmds:

- bash /root/kubernemlig-k3s-worker.sh

files:

- path: /root/kubernemlig-k3s-worker.sh

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/bootstrapping/kubernemlig-k3s-worker.sh

- path: /etc/udev/longhorn-data-disk-add.sh

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/cluster-configuration/storage/longhorn-data-disk-add.sh

unplugcd: True

- availability-test-worker-2:

nets:

- name: Some_network

dns: 192.168.10.11,192.168.10.12

gateway: 192.168.22.1

ip: 192.168.23.52

mask: 255.255.254.0

nic: ens192

cmds:

- bash /root/kubernemlig-k3s-worker.sh

files:

- path: /root/kubernemlig-k3s-worker.sh

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/bootstrapping/kubernemlig-k3s-worker.sh

- path: /etc/udev/longhorn-data-disk-add.sh

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/cluster-configuration/storage/longhorn-data-disk-add.sh

unplugcd: True

- availability-test-worker-3:

nets:

- name: Some_network

dns: 192.168.10.11,192.168.10.12

gateway: 192.168.22.1

ip: 192.168.23.53

mask: 255.255.254.0

nic: ens192

cmds:

- bash /root/kubernemlig-k3s-worker.sh

files:

- path: /root/kubernemlig-k3s-worker.sh

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/bootstrapping/kubernemlig-k3s-worker.sh

- path: /etc/udev/longhorn-data-disk-add.sh

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/cluster-configuration/storage/longhorn-data-disk-add.sh

unplugcd: True

- availability-test-worker-4:

nets:

- name: Some_network

dns: 192.168.10.11,192.168.10.12

gateway: 192.168.22.1

ip: 192.168.23.54

mask: 255.255.254.0

nic: ens192

cmds:

- bash /root/kubernemlig-k3s-worker.sh

files:

- path: /root/kubernemlig-k3s-worker.sh

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/bootstrapping/kubernemlig-k3s-worker.sh

- path: /etc/udev/longhorn-data-disk-add.sh

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/cluster-configuration/storage/longhorn-data-disk-add.sh

unplugcd: True

- availability-test-worker-5:

nets:

- name: Some_network

dns: 192.168.10.11,192.168.10.12

gateway: 192.168.22.1

ip: 192.168.23.55

mask: 255.255.254.0

nic: ens192

cmds:

- bash /root/kubernemlig-k3s-worker.sh

files:

- path: /root/kubernemlig-k3s-worker.sh

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/bootstrapping/kubernemlig-k3s-worker.sh

- path: /etc/udev/longhorn-data-disk-add.sh

currentdir: True

origin: ~/iac-conductor/kubernetes/deploy/cluster-configuration/storage/longhorn-data-disk-add.sh

unplugcd: True

################################

# General deploy configuration #

################################

api_ip: 192.168.23.44

#extra_args:

extra_master_args:

- "--node-taint CriticalAddonsOnly=true:NoExecute"

- "--data-dir=/k3s-data"

#- "--disable-cloud-controller"

- "--disable=coredns"

- "--disable-kube-proxy"

- "--disable=local-storage"

- "--disable-network-policy"

- "--disable=servicelb"

- "--disable=traefik"

- "--kube-apiserver-arg=audit-log-path=/var/lib/rancher/audit/audit.log"

- "--kube-apiserver-arg=audit-policy-file=/var/lib/rancher/audit/audit-policy.yaml"

- "--kube-apiserver-arg=audit-webhook-config-file=/var/lib/rancher/audit/webhook-config.yaml"

- "--kube-apiserver-arg=audit-log-maxage=30"

- "--kube-apiserver-arg=audit-log-maxsize=20"

- "--kube-apiserver-arg=audit-log-maxbackup=6"

# To be set to [true]. So that the Cilium CNI can do its magic. And for the clusters that need, it KubeVirt to work

- "--kube-apiserver-arg=allow-privileged=true"

extra_worker_args:

- "--node-label node.longhorn.io/create-default-disk=config"

- "--kubelet-arg=feature-gates=GRPCContainerProbe=true"

masters: 3

workers: 6

install_k3s_channel: v1.23 # On the v1.23 channel as we we're upgrading from v1.22 - so not to jump two minors.

install_k3s_version: v1.23.9+k3s1

pool: vmware-pool

image: ubuntu20044-20220323-hwe-5-13

network: Some_network

cluster: availability-test

domain: test.test

token: circusclowns

numcpus:

worker_numcpus: 8

master_numcpus: 6

memory:

master_memory: 12288

worker_memory: 16284

master_tpm: false

master_rng: false

disk_size: 30

worker_tpm: false

worker_rng: false

notifycmd: "kubectl get pod -A"

notify: false

numa:

numa_master:

numa_worker:

numamode:

numamode_master:

numamode_worker:

cpupinning:

cpupinning_master:

cpupinning_worker:

kubevirt_disk_size: 10

extra_disks: []

extra_master_disks:

- 15

- 15

extra_worker_disks:

- 50

- 50

extra_networks: []

extra_master_networks: []

extra_worker_networks: []

nested: false

threaded: true

# sdn needs to be "None". In other words have no value

# for us specifically. As we don't want the default K3s CNI

# flannel. And because we're handling the CNI ourselves.

# By using Cilium

sdn:

# The below value needs to always be specified.

# Furthermore, IT HAS TO BE UNIQUE across KNL clusters

# If not we risk collisions for Keepalived related network packets

virtual_router_id: 203

vmrules_strict: true

It seems that kcli did not throw a breaking/fatal exit code. So in Bash an exit code > 0. We have the following conditional around the call to kcli scale ....

if (kcli scale kube k3s --paramfile "${__kcliPlan}" -P workers="${__workersScaleAmount}" "${__clustername}"); kcliScaleWorkersErr=$?; (( kcliScaleWorkersErr )); then

echo -e "\n$(tput setaf 1)$(tput bold) #### Failed to (re)introduce workers into the ${__clustername} cluster $(tput init)"

echo -e "$(tput setaf 1)$(tput bold) #### The kcli exit code is: ${kcliScaleWorkersErr} $(tput init)"

echo -e "$(tput setaf 3)$(tput bold) :::: You'll have to get the ${__clustername} cluster into a healthy state. $(tput init)"

else

The Bash code is "saying".

;kcliScaleWorkersErr=$?catches the exit code in the first;section(( kcliScaleWorkersErr ))is aBasharithmetic conditional that should get the code into theifbranch IF theexit codeis greater than0

However, the code do not get into the if branch in the above. Rather it goes into the else branch.

Do kcli not always throw breaking exception? Or is it "just" not doing so in this specific case?

Thank you very much

this depends on the use case. error code is returned when creating a plan for instance, not sure every call has a return code though. Let me check for scale operations

Did you identify whether or not kcli scale calls properly break if something can be considered breaking? Thanks.

@karmab any thoughts?

a bit of work on this topic addressed in https://github.com/karmab/kcli/commit/d53470df1aa74d9b7b10b8b2301ec231b67b4a0d

addressed in https://github.com/karmab/kcli/commit/44d1fc7e69ade896fc940793e55df2c64b69e8eb