k3s

k3s copied to clipboard

k3s copied to clipboard

Memory metrics zero

Describe the bug The memory metrics provided by cadvisor for pods are all zero, e.g:

container_memory_usage_bytes{beta_kubernetes_io_arch="amd64",beta_kubernetes_io_os="linux",id="/kubepods/besteffort/pod6cfe2a03-4730-11e9-af77-000db94f6ae8",instance="openwrt",job="kubernetes-cadvisor",kubernetes_io_hostname="openwrt",namespace="ingress-nginx",pod_name="nginx-ingress-controller-55cfc7b5b8-x7gc4"} | 0

The pod for ingress-nginx for instance has the pod id 1701c0ef111e6 for which I find a metric that actually reflects the memory usage:

container_memory_usage_bytes{beta_kubernetes_io_arch="amd64",beta_kubernetes_io_os="linux",id="/kubepods/besteffort/pod6cfe2a03-4730-11e9-af77-000db94f6ae8/1701c0ef111e68acd2adf3bcbd01917d2b6ac3f961ed344e9c97476f424eae13",instance="openwrt",job="kubernetes-cadvisor",kubernetes_io_hostname="openwrt"} | 1417216

So it looks something with mapping the cgroup hierarchy to the right pod_name labels is broken.

Additional context

- Running 0.3.0 on openwrt

@discordianfish Can you reproduce the issue with that latest v0.5.0 rc build? We have changed things in the area of metrics that may have fixed this. We haven't been able to reproduce this issue so maybe it's fixed or there is an issue specific to openwrt.

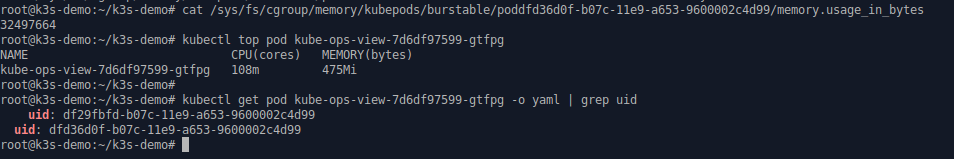

This is maybe a different bug, but I see /sys/fs/cgroup reporting 32M bytes usage while Metrics Server with K3s reports 475Mi usage

For more context see https://twitter.com/try_except_/status/1155224741680230407

The installation is straight-forward: K3s + Metrics Server (https://codeberg.org/hjacobs/k3s-demo/src/branch/master/install.sh).

I am also experiencing this. Hypervisor is reporting 75% of 5GB being used of memory on my master, but 0/5 in the Rancher Dashboard

k3s version v1.17.2+k3s1

Deployed using k3os

Closing due to age and inactivity