transformers

transformers copied to clipboard

transformers copied to clipboard

Add fast tokenizer for BARTpho

This PR is to add a "fast" BARTpho tokenizer (backed by HuggingFace's tokenizers library).

What does this PR do?

Fixes # (issue)

Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the contributor guideline, Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the forum? Please add a link to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the documentation guidelines, and here are tips on formatting docstrings.

- [ ] Did you write any new necessary tests?

Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag members/contributors who may be interested in your PR.

The docs for this PR live here. All of your documentation changes will be reflected on that endpoint.

Following: https://github.com/huggingface/transformers/pull/13788 I now add a "fast" version of the BartphoTokenizer. @sgugger , @LysandreJik, @patil-suraj , @SaulLu and @patrickvonplaten Please could you have a look and provide your feedback? Thanks.

Hi @patil-suraj and @sgugger I revised the slow and fast BartphoTokenizer variants to satisfy your requirements. Please have a look and give feedback. Thanks. cc: @SaulLu @LysandreJik

Please note that the unsuccessful checks are due to the failed test_modeling_wav2vec2_conformer.py, not related to our BartphoTokenizer. @SaulLu

Please note that the unsuccessful checks are due to the failed

test_modeling_wav2vec2_conformer.py, not related to our BartphoTokenizer. @SaulLu

@SaulLu fixed the wav2vec2_conformer tests on master

@datquocnguyen We can't merge anything that has any breaking change on the existing tokenizer, as I said before.

@sgugger Ah, I now see your point. I initially thought the code would be much nicer if I also push a new version of the slow tokenizer. But then it breaks the existing code.

Anyway, the fast tokenizer would totally work without changing the original code of the slow tokenizer (as I already developed the fast_tokenizer_file), I think. I would need a bit of time to roll back the slow tokenizer to its original version.

(cc @SaulLu , @LysandreJik , @patil-suraj and @patrickvonplaten )

Hi @SaulLu , @sgugger , @patil-suraj @LysandreJik and @patrickvonplaten

In addition to a fast BARTpho tokenizer, I also revised my code to add fast tokenizers for BERTweet and PhoBERT. Here, changes now do not break existing slow tokenizers. My hacking trick to have the same tokenization strategy for both slow and fast variants is already mentioned here.

Please have a look and provide feedback. Thanks!

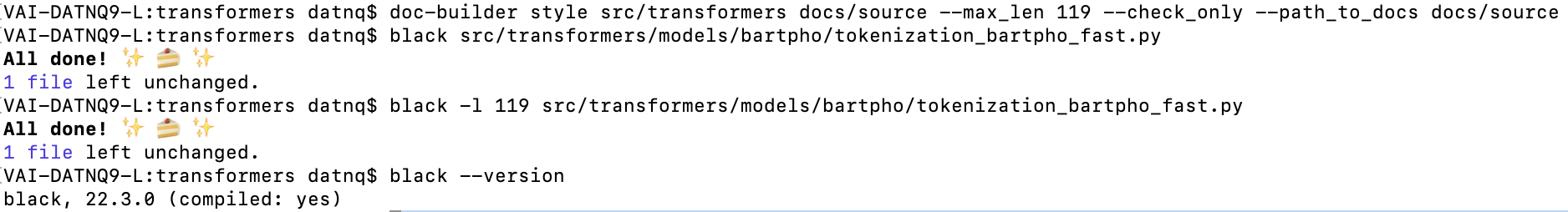

Note that I have no idea to fix the failed test check_code_quality w.r.t. black:

#!/bin/bash -eo pipefail

black --check --preview examples tests src utils

Skipping .ipynb files as Jupyter dependencies are not installed.

You can fix this by running ``pip install black[jupyter]``

would reformat src/transformers/models/bartpho/tokenization_bartpho_fast.py

Oh no! 💥 💔 💥

1 file would be reformatted, 1594 files would be left unchanged.

Exited with code exit status 1

However, the target file "tokenization_bartpho_fast.py" is left unchanged in my local machine:

I think there might be an inconsistency with black used in my local machine and in your CI, so I could not fix it from my side. It would be great if you guys could help fix it. Thanks a lot.

@SaulLu Thank you very much for your detailed feedback and suggestion. Before moving forward to revise the code w.r.t. the add_tokens feature, it would be great if you could provide some more context/clarification on the intention of using add_tokens.

Vietnamese can be considered as an isolated language, where the (monolingual) Vietnamese lexicon of syllables contains about 8K syllable types. Using a monolingual vocab of 40K types in vinai/bartpho-syllable is far more than enough to cover all possible cases of Vietnamese syllables. I am currently not sure whether the add_tokens feature is needed in our tokenizer/model when using our tokenizer/model on Vietnamese data?

@SaulLu Similarly, for monolingual models PhoBERT for Vietnamese and BERTweet for English, vocabularies of 64K subword types should be more than enough, so that we might not need to use the add_tokens feature, right?

Hi @datquocnguyen. It's amazing that you added those two new fast tokenizers. However we need PRs to be focused on one thing. Would you terribly mind splitting it in three (one for BARTpho, one for PhoBERT and one for BERTweet)?

Thanks a lot!

@SaulLu Thank you very much for your detailed feedback and suggestion. Before moving forward to revise the code w.r.t. the add_tokens feature, it would be great if you could provide some more context/clarification on the intention of using add_tokens.

@datquocnguyen I think there are many, many use cases for add_tokens. But for example, we can imagine a use case where a user would like to fine-tune the model on a task that needs to identify specific tokens: like for example "<QUESTION>" and "<ANSWER>". This method is convenient because it is unified across all tokenizers.

@SaulLu Thank you very much for your feedback.

I improved the hacking strategy to handle the issue with newly added tokens.

Assume that the sizes of the multilingual and monolingual vocabularies are X and Y, respectively (here, X > Y, X is the base_vocab_size and Y is set at mask_token_id in our hacking strategy). Added tokens A1, A2, A3, ... would have original ids of X, X+1, X+2,... that will be mapped into new ids Y, Y+1, Y+2,..., respectively.

I extended the original function get_added_vocab into get_added_vocab_hacking to extract a dictionary added_vocab {A1: Y, A2: Y+1, A3: Y+2, ...} and another dictionary id_mapping of id mapping {X: Y, X+1: Y+1, X+2: Y+2, ...}

def get_added_vocab_hacking(self):

"""

Returns the added tokens in the vocabulary as a dictionary of token to index.

Returns:

`Dict[str, int], Dict[int, int]`: The added tokens, and their original and new ids

"""

base_vocab_size = self._tokenizer.get_vocab_size(with_added_tokens=False)

full_vocab_size = self._tokenizer.get_vocab_size(with_added_tokens=True)

if full_vocab_size == base_vocab_size:

return {}, {}

# Tokens in added_vocab should have ids that are equal to or larger than the size of base_vocab

added_vocab = dict(

(self._tokenizer.id_to_token(index), index + 1 - base_vocab_size + self.mask_token_id)

for index in range(base_vocab_size, full_vocab_size)

)

id_mapping = dict((index, self._tokenizer.token_to_id(tok)) for tok, index in added_vocab.items())

return added_vocab, id_mapping

So in tokenization, the previous strategy maps all ids larger than mask_token_id to unk_token_id now is revised to also handle added tokens as follows:

ids = []

for (id, token) in zip(e.ids, e.tokens):

if id <= self.mask_token_id:

ids.append(id)

else:

if token.strip() in added_vocab: # handle added tokens

ids.append(added_vocab[token.strip()])

else:

ids.append(self.unk_token_id)

In addition, a preprocess of mapping ids Y, Y+1, Y+2, ... into X, X+1, X+2 is applied before decoding:

def _decode(

self,

token_ids: Union[int, List[int]],

skip_special_tokens: bool = False,

clean_up_tokenization_spaces: bool = True,

**kwargs

) -> str:

self._decode_use_source_tokenizer = kwargs.pop("use_source_tokenizer", False)

if isinstance(token_ids, int):

token_ids = [token_ids]

# Mapping added tokens' ids into their original values

_, id_mapping = self.get_added_vocab_hacking()

if len(id_mapping) > 0:

token_ids = [id_mapping[id] if id in id_mapping else id for id in token_ids]

text = self._tokenizer.decode(token_ids, skip_special_tokens=skip_special_tokens)

if clean_up_tokenization_spaces:

clean_text = self.clean_up_tokenization(text)

return clean_text

else:

return text

With this improved strategy, there are two tests needed to override:

def test_tokenizer_fast_store_full_signature(self):

"""

Override the original test as BartphoTokenizer requires a monolingual_vocab_file rather than a merges_file

"""

def test_add_tokens_tokenizer(self):

"""

Override the original test as in the fast tokenizer, the actual vocab_size is in fact mask_token_id + 1

"""

Hi @datquocnguyen. It's amazing that you added those two new fast tokenizers. However we need PRs to be focused on one thing. Would you terribly mind splitting it in three (one for BARTpho, one for PhoBERT and one for BERTweet)?

Thanks a lot!

@sgugger I changed the code, so that this PR is only for BARTpho. cc: @SaulLu

@SaulLu please help to review the improved strategy and give feedback. Thank you very much.

Please note that failed checks are not related to my bartpho tokenizer, except for one check using black, however black was successful in my local computer, as detailed at https://github.com/huggingface/transformers/pull/17254#issuecomment-1133932067. Could you provide information about black used in your CI?, so I can replicate the issue on my local computer, then fix it. Thanks.

cc: @sgugger

#!/bin/bash -eo pipefail

black --check --preview examples tests src utils

Skipping .ipynb files as Jupyter dependencies are not installed.

You can fix this by running ``pip install black[jupyter]``

would reformat src/transformers/models/bartpho/tokenization_bartpho_fast.py

Oh no! 💥 💔 💥

1 file would be reformatted, 1594 files would be left unchanged.

Exited with code exit status 1

You need to install black==22.3 to have the same results as the CI.

You need to install

black==22.3to have the same results as the CI.

@sgugger You might miss my https://github.com/huggingface/transformers/pull/17254#issuecomment-1133932067 I already had black version 22.3 as detailed in https://github.com/huggingface/transformers/pull/17254#issuecomment-1133932067.

@sgugger Following https://github.com/huggingface/transformers/pull/17254#issuecomment-1133932067, I was not aware that I have to include --preview into my command black -l 119 <py_file_path>. The code quality check is passed.

There are now 4 failed checks not caused by BartphoTokenizerFast, I believe:

- FAILED tests/models/layoutlmv2/test_tokenization_layoutlmv2.py::LayoutLMv2TokenizationTest::test_saving_tokenizer_trainer ====== 1 failed, 135 passed, 32 skipped, 20 warnings in 142.09s (0:02:22) ======

- FAILED tests/pipelines/test_pipelines_summarization.py::SummarizationPipelineTests::test_small_model_pt

run_tests_flax= 804 failed, 5364 passed, 11260 skipped, 7960 warnings in 1306.16s (0:21:46) ==run_tests_torch= 823 failed, 10738 passed, 6752 skipped, 5425 warnings in 1554.96s (0:25:54) ==

cc: @SaulLu , @LysandreJik, @patil-suraj and @patrickvonplaten It would be great if you guys can also help review this PR. Thanks a lot.

You will need to rebase on the main branch to fix the test failures. It's due to the botched release of Protobuf that breaks everything (the main branch has it pinned).

@sgugger I rebased the main branch with the latest commits from transformers.

There are 3 failed checks not relevant to the BartphoTokenizer:

Build PR Documentation / build / build_pr_documentation: urllib3.exceptions.ProtocolError: ('Connection aborted.', ConnectionResetError(104, 'Connection reset by peer'))run_tests_tf: FAILED tests/models/mobilebert/test_modeling_tf_mobilebert.py::TFMobileBertModelTest::test_resize_token_embeddingsrun_tests_torch: FAILED tests/models/glpn/test_feature_extraction_glpn.py::GLPNFeatureExtractionTest::test_call_pytorch

fyi, @SaulLu , @LysandreJik, @patil-suraj and @patrickvonplaten

Hey @datquocnguyen, thanks a lot for your PR and for working hard on this! I think this is one situation where the code on the hub (detailed below) would fit really well, for the following reasons:

- The tokenizer code that is defined seems to work with the vocabulary you have worked with so far, but is unlikely to work with other vocabularies. At least it won't be the case unless the approach you have taken to generate that vocabulary is very documented, as it is very complex when compared to other tokenizers.

- If I understand correctly, the approach taken could have been handled by a single vocabulary rather than 2. I definitely understand why doing it like this for BARTpho makes sense, but this is unlikely to be the case for other checkpoints leveraging this architecture.

- The code in

transformershas to be maintained for years, so we want to optimize for heavily tested code; the methods that you add, while definitely useful in order to get the BARTpho tokenization right, are not tested and havehackingin their name, which shows that they're targetting something a bit different than what we aim to solve withtransformers' internal code (but that definitely has its place on the hub!).

Finally, you're moving very fast with your implementations, which is great. However, given the backwards-compatibility approach we have chosen and the fact that we want production-ready code means that we'll be slowing things down in this case, unfortunately.

The code on the hub is explained here. It's a way to share models and configurations by sharing their modeling code directly on the hub. When doing from_pretrained, you can then fetch the code on the hub. BARTpho is exactly the kind of use-cases we had in mind when working on this feature - we just didn't get to implementing the tokenizer code yet! I think we should work to enable this ASAP and have BARTpho be a first trial.

This would enable you to move as fast as you need, while providing the same functionality to downstream transformers users, and will allow you to manage your repositories as you see fit. Would that work for you?

@LysandreJik Thanks for your detailed feedback. Before I go to answer whether the code on the hub would work for me. I am just concerning your first comment:

The tokenizer code that is defined seems to work with the vocabulary you have worked with so far, but is unlikely to work with other vocabularies. At least it won't be the case unless the approach you have taken to generate that vocabulary is very documented, as it is very complex when compared to other tokenizers.

So I would try to respond to this first comment. As detailed in #13788 (comment), regarding the use case of BartphoTokenizer: Other languages can thus simply reuse BartphoTokenizer with their monolingual_vocab_file. The goal is to reduce the model sizes of existing pre-trained XLM-RoBERTa/mBART models when applying to a smaller set of languages instead of the whole 50/100 languages. Here, you would trim XLM-RoBERTa/mBART to just dealing with subwords in the monolingual_vocab_file while not requiring retraining the corresponding multilingual sentencepiece model.

The generation process of BARTpho vocabulary is not that very complicated, as detailed in #13788 (comment). In particular, I apply a pre-trained/existing sentencepiece tokenization model from a pre-trained language model (e.g., XLM-RoBERTa/mBART/...) to segment sentences in a language/task-specific corpus, and then selected just top X (e.g. 40K) subwords to be included in a specific vocabulary for my downstream language/task (here, I named this specific vocabulary as monolingual_vocab_file). The existing sentencepiece model as well as the specific vocabulary are both required for a proper tokenization.

Regarding BartphoTokenizerFast, the process of generating the tokenizer_file is that: (1) I load the slow BartphoTokenizer, (2) call the function convert_slow_tokenizer to convert it into a fast variant, and (3) then save the fast one. This might be a bit complicated for others as it is not well-documented, but I could simply abandon the use of tokenizer_file in BartphoTokenizerFast. Thus BartphoTokenizerFast would just create and convert a slow tokenizer BartphoTokenizer to build the backend.

I believe there are many use cases in which BartphoTokenizer/BartphoTokenizerFast would fit.

@SaulLu As you have been playing around with BartphoTokenizer, is there any comment from your side regarding @LysandreJik' first point. Thank you both.

@LysandreJik

If I understand correctly, the approach taken could have been handled by a single vocabulary rather than 2.

I am not sure this is the case. The pre-trained (multilingual) sentencepiece model and the specific monolingual_vocab_file are both required for proper tokenization: the multilingual sentencepiece model is used for subword tokenization while all subwords that do not appear in the monolingual_vocab_file are converted into an unknown token.

@LysandreJik I did dig into the code on the hub, and am wondering whether I understand your approach correctly:

-

Instead of merging

tokenization_bartpho_fast.pyinto the maintransformersbranch, we now just need to upload/push it tohttps://huggingface.co/vinai/bartpho-syllable/tree/main. -

There would be an upcoming feature of

sharing a custom tokenizer, which I should register BartphoTokenizerFast fromvinai/bartpho-syllableorhttps://huggingface.co/vinai/bartpho-syllable/blob/main/tokenization_bartpho_fast.py. Then it would allow users to automatically download or importtokenization_bartpho_fast.pyand use BartphoTokenizerFast via AutoTokenizer with existing features in the maintransformersbranch.

So what I should do is to wait until you guys complete that sharing a custom tokenizer feature and then I would just need to have some piece of code for registering BartphoTokenizerFast with register_for_auto_class('AutoTokenizer') and it would run as the same as merged into the main transformers branch, wouldn't it?

Thanks.

cc: @SaulLu

For a wider context where many subwords appearing in the "merges" file do not appear in the "vocab" file as in CTRL, FlauBERT, PhoBERT and BERTweet and the like (i.e. slow tokenizers would convert those subwords into unkn_id during encoding), it is likely impossible to develop a fast tokenizer variant using documented approaches while keeping the same tokenization strategy.

Thus, the trick used in BartphoTokenizerFast would come into play, and help solve this issue. If merged, it is then straightforward to develop similar fast tokenizers for CTRL, FlauBERT, PhoBERT and BERTweet.

It would be great if @LysandreJik @SaulLu @patrickvonplaten or @sgugger could provide concrete feedback on whether this PR will have a chance to be merged. If this PR could not be merged, then what is the status of the "sharing a custom tokenizer on the hub" feature (e.g. tentative date for releasing this feature) ?

Thank you very much.

Hi @datquocnguyen , I echo Lysandre's answer: I thank you for working very hard for this PR :hugs: and I also think it would be a very good fit for the feature on the hub. And this addition will be really useful for the community!

It would run as the same as merged into the main transformers branch, wouldn't it?

Yes, the idea is that it would be (almost) identical to what you have with transformers! I don't know when it will be released (as I'm not directly working on it), but it seems to be a high-priority feature!

For a wider context where many subwords appearing in the "merges" file do not appear in the "vocab" file as in CTRL, FlauBERT, PhoBERT and BERTweet and the like (i.e. slow tokenizers would convert those subwords into unkn_id during encoding), it is likely impossible to develop a fast tokenizer variant using documented approaches while keeping the same tokenization strategy.

Indeed, you raise a very good point. I have also observed that there are tokens listed in the merge rules that do not appear in the vocabulary for FlauBERT - and I believe you that this is also the case for CTRL, PhoBERT and BERTweet. Nevertheless, from my point of view, looking at FlauBERT's code, the fix that seems to me the most suitable for our API (tokenizer slow → converter → tokenizer fast ) would be to clean up the merge file during the conversion step. This technique would indeed avoid having to modify the tokenizer fast core method(s). I've attached a snippet below that illustrates this idea. Am I missing something by thinking that this would achieve the desired final behaviour?

Snippet to illustrate the merges file "clean up" that I have in mind

To test this snippet, we need to retrieved locally the vocab.json and merges.txt files of FlauBERT, for example by doing git clone https://huggingface.co/flaubert/flaubert_base_cased.

Then, if we try to test to initialize a tokenizer fast (pure without the transformers's tokenizer wrapper for the moment), we observe that it raises an error

from tokenizers import Tokenizer

from tokenizers.models import BPE

import json

file_vocab = f"flaubert_base_cased/vocab.json"

file_merges = f"content/flaubert_base_cased/merges.txt"

with open(file_vocab) as f:

vocab = json.load(f)

with open(file_merges) as f:

merges = f.readlines()

merges = [merge.split(" ") for merge in merges]

merges = [(merge[0], merge[1]) for merge in merges if len(merge)==3]

tokenizer = Tokenizer(

BPE(

vocab,

merges,

unk_token="<unk>",

end_of_word_suffix="</w>",

fuse_unk=True,

)

)

Error message:

---------------------------------------------------------------------------

Exception Traceback (most recent call last)

[<ipython-input-26-b70d9b50bd17>](https://localhost:8080/#) in <module>()

5 unk_token="<unk>",

6 end_of_word_suffix="</w>",

----> 7 fuse_unk=True,

8 )

9 )

Exception: Error while initializing BPE: Token `trouvécap` out of vocabulary

But by cleaning the merges file we can initialize the tokenizer without errors

# ------ Clean up step ------

new_merges = []

for token_1, token_2 in merges:

if token_1 not in vocab or token_2 not in vocab or f"{token_1}{token_2}" not in vocab:

print(token_1, token_2)

continue

new_merges.append((token_1, token_2))

# ---------------------------

tokenizer = Tokenizer(

BPE(

vocab,

new_merges,

unk_token="<unk>",

end_of_word_suffix="</w>",

fuse_unk=True,

)

)

@SaulLu Thanks for your response.

Am I missing something by thinking that this would achieve the desired final behaviour?

Cleaning the "merges" file will definitely result in different encoding outputs from the slow and fast tokenizers. For example, in the case of FlauBERT, the slow and fast tokenizers will encode/tokenize any word containing the sub-string trouvécap differently.

This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the contributing guidelines are likely to be ignored.

I am still looking forward to using the upcoming "sharing a custom tokenizer" feature =)

Cleaning the "merges" file will definitely result in different encoding outputs from the slow and fast tokenizers. For example, in the case of FlauBERT, the slow and fast tokenizers will encode/tokenize any word containing the sub-string trouvécap differently.

I'm sorry, I didn't react to your message! You are right, my proposal will not be exactly the same as the current slow version.

One specific thing to know about this particular case of FlauBERT is that currently the slow tokenizer doesn't behave exactly like FlauBERT's original tokenizer which used FastBPE.

For example, trouvécaptivantes is not tokenized in the same way:

Transformers version: ['<s>', '<unk>', 'tiv', 'antes</w>', '</s>']

FastBPE version: ['<s>', 'trouv', 'écap', 'tiv', 'antes</w>', '</s>']

Ideally, we would like to have an exact match, but in this case I think the changes that would have to be made to achieve this would be very cumbersome compared to the difference observed (trouvécaptivantes is not a word in French but the concatenation of 2 words, without typography we should have had trouvé captivantes). All that to say, it's very very complicated to have perfect matching between different tokenization libraries and maintaining long-term hacks is not easy and that's why I think the sharing feature is really a perfect use case for your proposal!

This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the contributing guidelines are likely to be ignored.

I am still looking forward to using the upcoming "sharing a custom tokenizer" feature =)

https://github.com/datquocnguyen/transformers/tree/fast_tokenizers_BARTpho_PhoBERT_BERTweet also contains fast tokenizer implementations of PhoBERT and BERTweet, which others might find them useful to develop similar fastBPE-based tokenizers for other models such as CTRL & FlauBERT.