wav2letter

wav2letter copied to clipboard

wav2letter copied to clipboard

Slow training with novograd

hi ,there

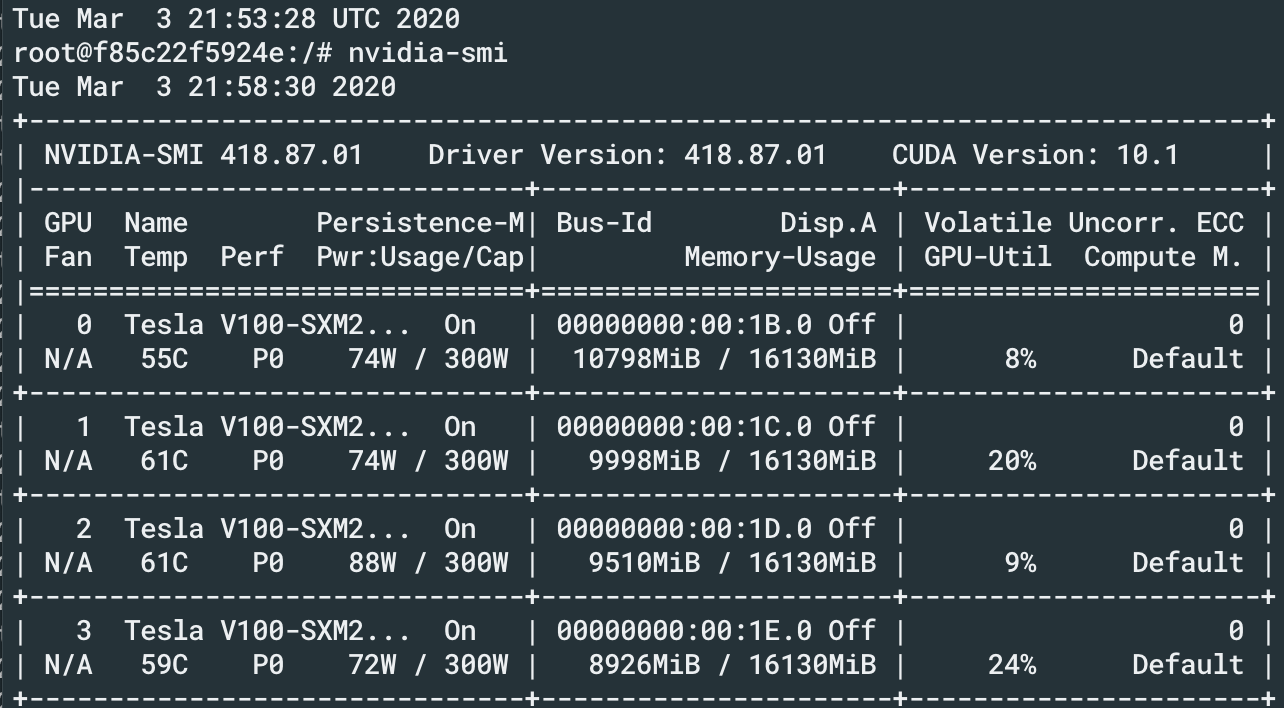

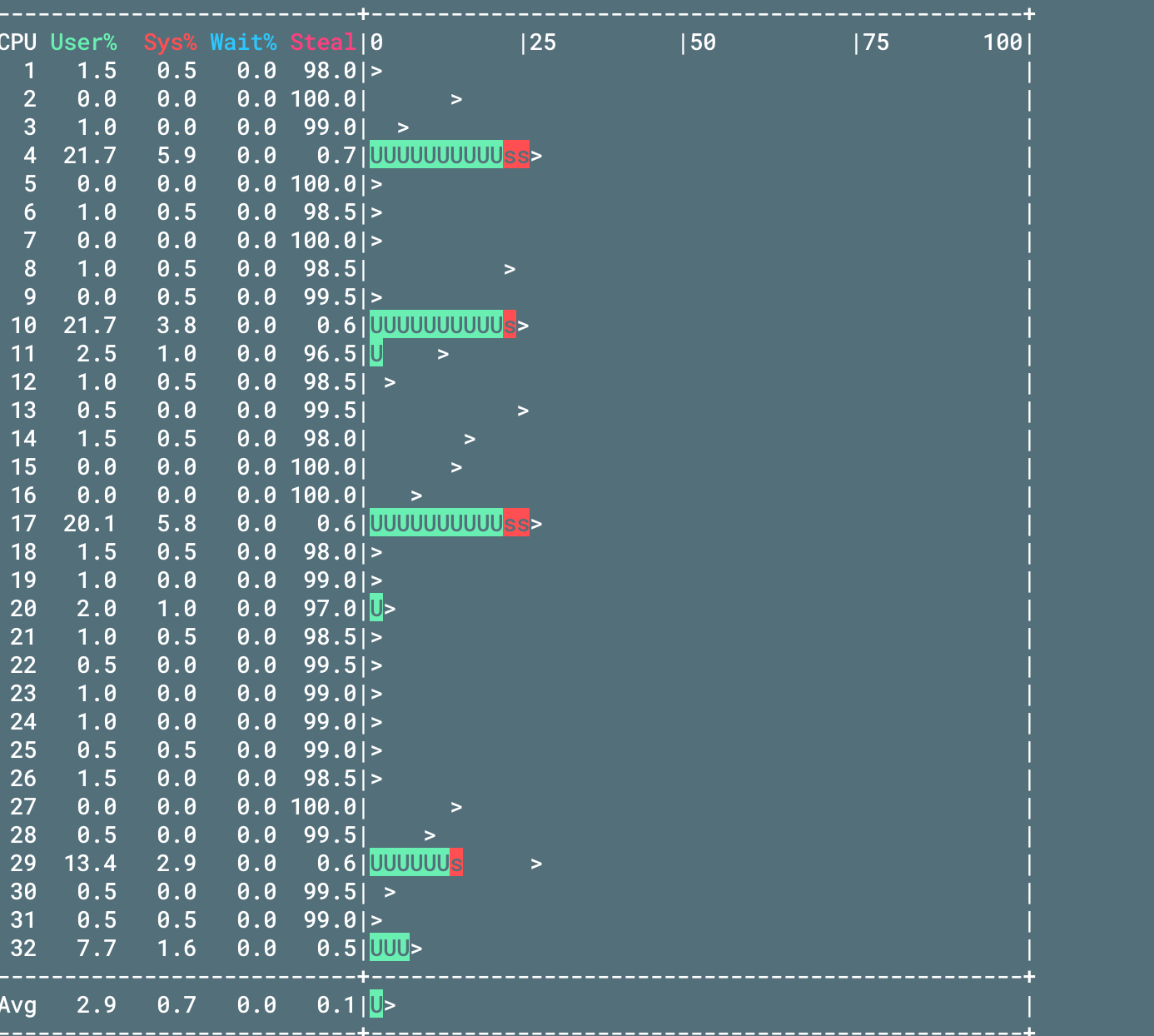

i'm training an AM on 4 TeslaV100 gpus initially using sgd each epoch is done in ~ 12 min, since I'm having some problems with the lr, I wanted to move to novograd optimizer unfortunatly is runnnig very slow ,also is kind of strange that my gpus usage drops to 50 % per each gpu while SGD seems to use around 80-98 % also my CPU usage has drop down , is this suppose to happen?

What's your batch size?

Hello ,thanks to reply in this sample is 6 , originally I tried with a bs of 4 and SGD (I get 12 min per epoch) then I have tried with novograd and it goes very slow so I look at the gou usage and it were very low ,then I increase the batch size until reach out of memory (16 in my case ) and I didn't get to work the GPU usage to a reasonable amount (around 80% each gpu) it still to use maximum 30-40% each , I also tried with adam and adadelta I have also comproved my disk usage and I still have 240 GB free since I saw in a similar issue that was related with disk usage