libs

libs copied to clipboard

libs copied to clipboard

wip: Add e2e tests based on sinsp-example

What type of PR is this?

/kind feature

Any specific area of the project related to this PR?

/area tests

What this PR does / why we need it: This PR adds the e2e tests previously held under Molter73/falco-libs-qa into the repository.

The way the tests work is by building the sinsp-example binary and embedding it into a container. Said container expects the binary to be built in an environment similar to the one used by the CI (so a debian:buster image). Then a separate container is built to run the actual tests using pytest and spawning containers using Docker-in-Docker, this approach was chosen as an OK way to limit events to the ones coming from a specific container, which should help diminish failures because of interference of other syscalls from processes running in the system. Everything is built and run from cmake, these are the commands I've been using to compile and run the tests:

mkdir build && cd build

cmake -DUSE_BUNDLED_DEPS=OFF -DBUILD_BPF ..

make e2e-tests

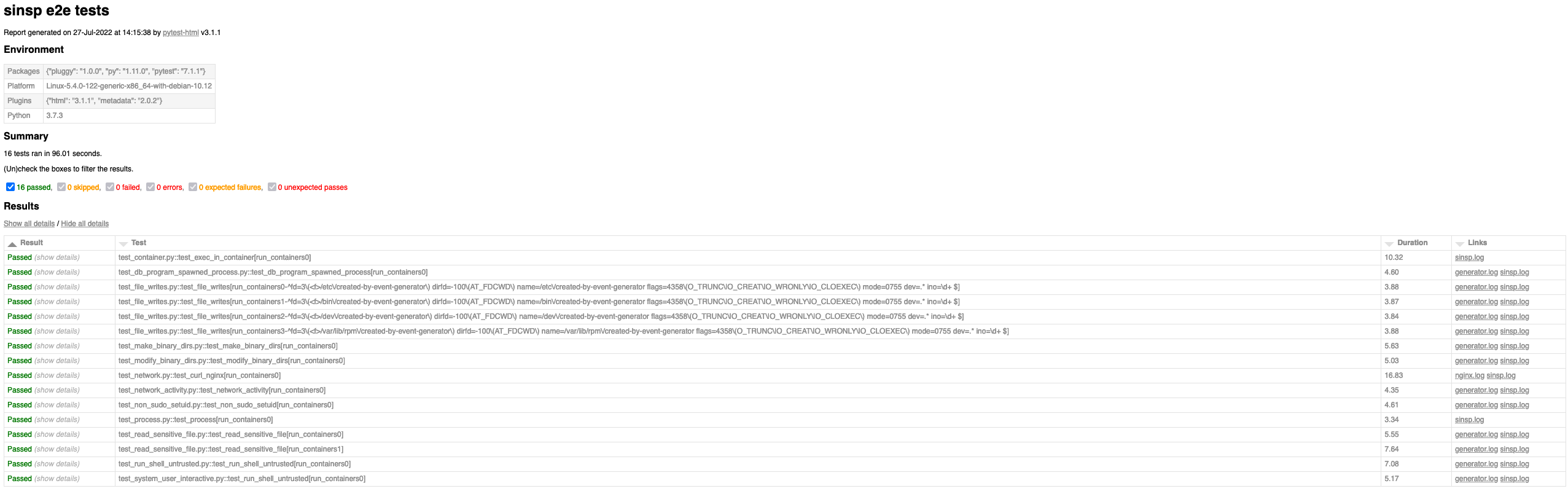

Pytest was chosen because of my familiarity with it, but also due to the great range of flexibility it provides with its fixtures and the fact that Python makes it very easy and quick to draft and implement changes, this also includes the ease of working with containers through the Docker SDK for Python. Pytest also has the added bonus it generates a nice HTML user-friendly report in the build directory, after running the test you should find them under build/test/e2e/report and they look a little something like this:

The logs for all the containers used on each test can be accessed from the report, which should come in handy when debugging.

The logs for all the containers used on each test can be accessed from the report, which should come in handy when debugging.

From the previously mentioned PoC repository, these tests have successfully been run on GHA, so if this PR is accepted it should be possible to integrate it with the existing CI in a follow up.

Finally, sinsp-example has been modified so it can load and remove the kernel module driver, this is mostly done to make the behavior of the container closer to what it is when running with eBPF, but can easily be rolled back in favor of loading and removing the kernel module from the tests container.

Important!! This PR is a WIP and open to anyone that may want to comment on it. Any and all thoughts are welcomed.

It would also be great to have input on how easy or hard people find it to build and run the tests. IMO, they should be aimed to not only run on CI, but also locally on any developer's machine for debugging any potential issues.

Which issue(s) this PR fixes:

Fixes #271

Special notes for your reviewer:

Does this PR introduce a user-facing change?:

NONE

THIS IS WHAT I WAS LIVING FOR, FOR THE LAST COUPLE OF WEEKS :rofl: Thanks Mauro!!

WOW, that is what we are waiting for!! Amazing work @Molter73 :tada:!!! I will take a look ASAP :eyes:

This is fantastic Mauro! Looking forward to see how this evolves.

After a first look, two quick points:

- Maybe the

falco-e2e-testerimage might be renamed intosinsp-e2e-tester? This is very useful for Falco but does test sinsp effectively - I think it would be very valuable to add formatting options to sinsp-example, so that you could decide which fields you want to print out in the json output of each event. I see that right now sinsp-example uses the default formatters and you're asserting only those fields, but I think the great additional value of this e2e tests would be to reliably test the expected fields values for each generated events. This would make a great protection from

<NA>-related regression, and would also assert the correct state reconstruction of sinsp. What does everyone think?

Hi @jasondellaluce!

Maybe the falco-e2e-tester image might be renamed into sinsp-e2e-tester? This is very useful for Falco but does test sinsp effectively

I completely agree, naming has never been my strong suit so it definitely has room for improvement, I'll get it renamed.

I think it would be very valuable to add formatting options to sinsp-example, so that you could decide which fields you want to print out in the json output of each event. I see that right now sinsp-example uses the default formatters and you're asserting only those fields, but I think the great additional value of this e2e tests would be to reliably test the expected fields values for each generated events. This would make a great protection from <NA>-related regression, and would also assert the correct state reconstruction of sinsp. What does everyone think?

This makes a lot of sense to me, I think it would be a great addition but I feel it is also a non-trivial change to the sinsp-example binary. I believe it would add great value to both the binary and the tests so unless someone objects, I can look into getting that implemented in a follow up PR, this one is already quite swamped with things 😅.

This makes a lot of sense to me, I think it would be a great addition but I feel it is also a non-trivial change to the sinsp-example binary. I believe it would add great value to the both the binary and the tests so unless someone objects, I can look into getting that implemented in a follow up PR, this one is already quite swamped with things sweat_smile.

Agree, we can work on that later! Yet Jason's point is very valid :)

naming has never been my strong suit

Same here 😂

I believe it would add great value to both the binary and the tests so unless someone objects, I can look into getting that implemented in a follow up PR, this one is already quite swamped with things 😅.

Totally agree! This is already a lot.

Thanks for taking the time going through the PR and testing it out @Stringy!

when building the e2e containers, the context sent to docker was huge (~4.2Gb) and took a long while to build, and it seems to be sent to the daemon every time I try to run the tests. Not sure if there's much that can be done about that, but perhaps something to note

In order to build the sinsp-example container, I'm using the build directory as the context, in my case it sent 1GB to the docker daemon, which is pretty big but not a huge deal IMO. 4.2GB is definitely too much, could it be that the build directory had some left over files from something else? We only really need the sinsp-example binary, the kernel module and eBPF probe, I'll try to come up with a way to create a smaller context holding just those files.

if the tests fail, there can be some residual containers left behind which are a little annoying to clean up - is there a way we can ensure that all the containers are always removed?

The Python fixtures should be taking care of removing everything, even if the test fails 🤔. The only way I found that left containers up is by interrupting the tests with ctrl+c. I'll add a custom e2e-cleanup target so we can make e2e-cleanup and remove all containers, but we will need to remember to add any containers we add in future tests to this target.

I've rebased the PR on a newer commit to make sure everything is still working.

I've also addressed the comments made by @Stringy and opened the PR up for reviews.

/cc @LucaGuerra :D

Just a quick FYI, I will rebase and fix the conflict once I'm done going through a few problems I'm getting with the e2e tests

I've rebased on top of master, fixed the conflicts and also fix some minor details that were causing the tests themselves to fail with the latest changes, but nothing major.

I was eager to try these tests, but alas my dev environment toolchain is "too new" and so I'm unable to run make e2e-tests. The error is sadly not clear at first glance

=========================== short test summary info ============================

ERROR test_container.py::test_exec_in_container[kmod] - TimeoutError

ERROR test_container.py::test_exec_in_container[ebpf] - docker.errors.APIErro...

ERROR test_db_program_spawned_process.py::test_db_program_spawned_process[kmod-syscall.DbProgramSpawnedProcess]

...

Followed by a bunch of errors about the fact that "sinsp" container already exists

The actual error is:

$ docker run sinsp-example -j -a -f "evt.category=process and not container.id=host"

sinsp-example: /usr/lib/x86_64-linux-gnu/libstdc++.so.6: version `GLIBCXX_3.4.26' not found (required by sinsp-example)

sinsp-example: /lib/x86_64-linux-gnu/libm.so.6: version `GLIBC_2.29' not found (required by sinsp-example)

This is not @Molter73 's fault of course, as it's a well known problem when dealing with GLIBC. However I thought about a couple of things:

- It looks like the container is not removed because

TimeoutErroris raised before the fixture cleanup code is able to run (I will add an inline comment to show what I mean) - I wonder if it's possible to run

sinsp-example(or any other binary which are direct products of the compilation) directly on the system instead of using a container to ensure compatibility with the current system. Another (definitely less than perfect) option would be to use a base image which is very new and so backwards-compatible with a potentially older compilation environment.

edit: to clarify the last point, the problem here is that on one side we don't want to install all the Python dependencies on the system (and so we use a container, and rightly so), on the other we want Python to run a native binary on the native system. I can only think of hackish ways to do this, defeinitely not clean... 🤔

And of course, just wanted to repeat in writing as well that I really love this effort! ❤️ 🚀 I also support the language choice, it's great to use a real programming language for tests as well 😄

Hey @LucaGuerra, thanks for the review!

As you correctly stated, the whole idea behind running tests as a container is to not have to install python dependencies in the host, similarly, running sinsp-example on the host would require it to be compiled for every system it needs to run on, and so I decided a better approach would be to dockerize it so that we can run the same binary with different drivers on different systems.

As stated on the PR, if you want to try the tests, you need to build sinsp-example with a set of dependencies similar to the ones on the CI (used to be a debian:buster image). If you really want to test the tests, this dockerfile should give you a container that builds a compatible binary: https://github.com/Molter73/miscellaneuos-scripts/blob/master/devcontainers/falco-libs/debian.Dockerfile

And this script should build and run the image (just adjust the DEVCONTAINER_DIR and FALCO_DIR variables according to your dev environment and run with run-falco-builder.sh debian): https://github.com/Molter73/miscellaneuos-scripts/blob/master/scripts/run-falco-builder.sh

Also, the container ran by that script should allow you to directly run make e2e-tests from inside it.

same binary with different drivers on different systems

Hmm, that could speed up testing several distributions. So, if I really want to run e2e test on any system I could simply provide my own base image that matches whatever builder I used (most likely, my machine). Still an automated/semi automated way to do that is not that easy to do (I thought about changing the FROM but then there's a bunch of apt-get ...)

This is great :rocket: I was able to run them by modifying the sinsp dockerfile for my machine:

FROM debian:bookworm

RUN apt-get update && \

apt-get install -y \

libcurl4 \

libgrpc++1 \

jq \

libjsoncpp25 \

openssl \

libb64-0d \

libre2-9 \

libtbb12 \

libelf1

COPY /sinsp-example /usr/local/bin/sinsp-example

COPY /probe.o /driver/probe.o

COPY /scap.ko /driver/scap.ko

ENTRYPOINT [ "sinsp-example", "-j", "-a" ]

Writing reliable e2e tests is hard and this is the first big effort we need to actually have some upstream. I believe we can merge them because:

- We can all start playing with them and working on them to add testcases, and the upcoming 0.33.0 release is a great opportunity to test-drive them!

- We can start identifying where problems/flakiness may lie and what we think needs improvements

- They don't affect regular CI yet so it won't break anything

So I'm going to approve the PR! Great work as always @Molter73 !

LGTM label has been added.

@Molter73 question: is this going to be introduced in the CI in a follow-up PR?

Yes, I wanted to split the work on adding the tests to the repo and adding them to CI, otherwise this PR could bloat a little too much

[APPROVALNOTIFIER] This PR is APPROVED

This pull-request has been approved by: FedeDP, LucaGuerra, Molter73

The full list of commands accepted by this bot can be found here.

The pull request process is described here

- ~~OWNERS~~ [FedeDP,LucaGuerra,Molter73]

Approvers can indicate their approval by writing /approve in a comment

Approvers can cancel approval by writing /approve cancel in a comment