etcd

etcd copied to clipboard

etcd copied to clipboard

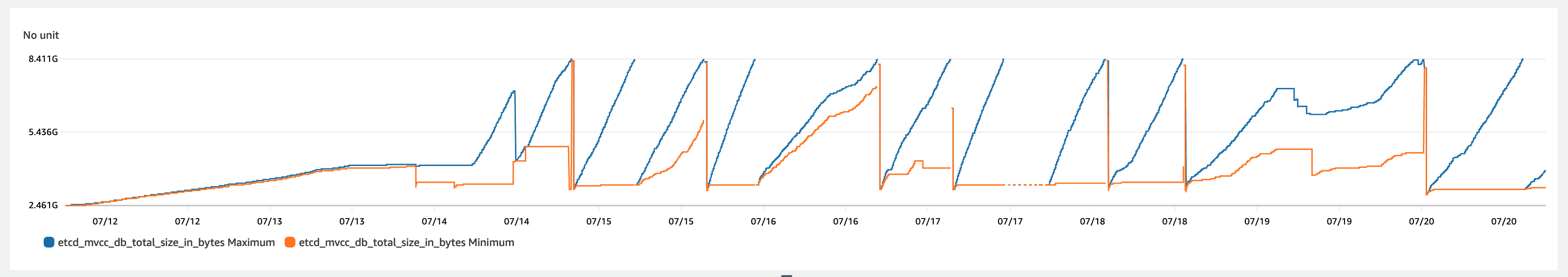

DB size diverged but kvhash were consistent across 3 nodes

What happened?

The DB size diff can be as high as 3GiB. Here is one screenshot of the db size metric. The drop of the blue line is actually our automation workflow replacing a new node with member removal/add.

node1

# HELP etcd_mvcc_db_total_size_in_bytes Total size of the underlying database physically allocated in bytes.

# TYPE etcd_mvcc_db_total_size_in_bytes gauge

etcd_mvcc_db_total_size_in_bytes 5.299228672e+09

# HELP etcd_mvcc_db_total_size_in_use_in_bytes Total size of the underlying database logically in use in bytes.

# TYPE etcd_mvcc_db_total_size_in_use_in_bytes gauge

etcd_mvcc_db_total_size_in_use_in_bytes 5.24292096e+09

node2

# HELP etcd_mvcc_db_total_size_in_bytes Total size of the underlying database physically allocated in bytes.

# TYPE etcd_mvcc_db_total_size_in_bytes gauge

etcd_mvcc_db_total_size_in_bytes 3.873603584e+09

# HELP etcd_mvcc_db_total_size_in_use_in_bytes Total size of the underlying database logically in use in bytes.

# TYPE etcd_mvcc_db_total_size_in_use_in_bytes gauge

etcd_mvcc_db_total_size_in_use_in_bytes 3.866206208e+09

node3

# HELP etcd_mvcc_db_total_size_in_bytes Total size of the underlying database physically allocated in bytes.

# TYPE etcd_mvcc_db_total_size_in_bytes gauge

etcd_mvcc_db_total_size_in_bytes 5.16868096e+09

# HELP etcd_mvcc_db_total_size_in_use_in_bytes Total size of the underlying database logically in use in bytes.

# TYPE etcd_mvcc_db_total_size_in_use_in_bytes gauge

etcd_mvcc_db_total_size_in_use_in_bytes 5.153357824e+09

Interestingly, multiple run of the hashkv command output indicates there are no data inconsistency.

REVISION=$(etcdctl get foo -w json | jq -r '.header.revision')

etcdctl endpoint hashkv \

--endpoints IP_1,IP_2,IP_3 \

--rev $REVISION -w table

+---------------------------+-----------+

| ENDPOINT | HASH |

+---------------------------+-----------+

| xxxxx IP_1 | 637567879 |

| xxxxx IP_2 | 637567879 |

| xxxxx IP_3 | 637567879 |

+---------------------------+-----------+

ClientURLs are obfuscated.

After taking the db snapshot of node1 and node2. I ran some bbolt inspection commands to gain more insights.

node1

$ sudo bbolt page /investigate-node-a/datadir/member/snap/db 0

Page ID: 0

Page Type: meta

Total Size: 4096 bytes

Version: 2

Page Size: 4096 bytes

Flags: 00000000

Root: <pgid=686251>

Freelist: <pgid=18446744073709551615>

HWM: <pgid=956035>

Txn ID: 171026

Checksum: 34db10a3202fae85

$ sudo ls -lh /investigate-node-a/datadir/member/snap/db

-rw------- 1 root root 3.7G Jul 20 05:31 /investigate-node-a/datadir/member/snap/db

$ sudo bbolt stats /investigate-node-a/datadir/member/snap/db

Aggregate statistics for 10 buckets

Page count statistics

Number of logical branch pages: 6722

Number of physical branch overflow pages: 0

Number of logical leaf pages: 696527

Number of physical leaf overflow pages: 233880

Tree statistics

Number of keys/value pairs: 2018088

Number of levels in B+tree: 4

Page size utilization

Bytes allocated for physical branch pages: 27533312

Bytes actually used for branch data: 23318078 (84%)

Bytes allocated for physical leaf pages: 3810947072

Bytes actually used for leaf data: 3087101146 (81%)

Bucket statistics

Total number of buckets: 10

Total number on inlined buckets: 8 (80%)

Bytes used for inlined buckets: 874 (0%)

node2

sudo bbolt page /investigate-node-b/datadir/member/snap/db 0

Page ID: 0

Page Type: meta

Total Size: 4096 bytes

Version: 2

Page Size: 4096 bytes

Flags: 00000000

Root: <pgid=1277606>

Freelist: <pgid=18446744073709551615>

HWM: <pgid=1277607>

Txn ID: 159180

Checksum: cf97efb8536103bf

$ sudo ls -lh /investigate-node-b/datadir/member/snap/db

-rw------- 1 root root 4.9G Jul 20 05:38 /investigate-node-b/datadir/member/snap/db

$ sudo bbolt stats /investigate-node-b/datadir/member/snap/db

Aggregate statistics for 10 buckets

Page count statistics

Number of logical branch pages: 7970

Number of physical branch overflow pages: 0

Number of logical leaf pages: 834707

Number of physical leaf overflow pages: 434922

Tree statistics

Number of keys/value pairs: 2411556

Number of levels in B+tree: 4

Page size utilization

Bytes allocated for physical branch pages: 32645120

Bytes actually used for branch data: 27944661 (85%)

Bytes allocated for physical leaf pages: 5200400384

Bytes actually used for leaf data: 4192003086 (80%)

Bucket statistics

Total number of buckets: 10

Total number on inlined buckets: 8 (80%)

Bytes used for inlined buckets: 874 (0%)

Note: our etcd operator rans defrag periodically in randomized (+- 10mins) 6 hours exclusively which will shrink/reclaim the un-used free page. But it does not explain the above situation.

What did you expect to happen?

I expect DB size diff can not be diverged like above. It cannot monotonically grow without decrease.

How can we reproduce it (as minimally and precisely as possible)?

The issue is still ongoing.

Anything else we need to know?

N/A

Etcd version (please run commands below)

sh-4.2$ etcdctl version

etcdctl version: 3.5.4

API version: 3.5

sh-4.2$ etcd --version

etcd Version: 3.5.4

Git SHA: b4d0ba964

Go Version: go1.16.15

Go OS/Arch: linux/amd64

Etcd configuration (command line flags or environment variables)

/usr/bin/etcd --name node-1 \

--data-dir /mnt/xvdb/datadir \

--wal-dir /mnt/xvdb/datadir/member/wal --snapshot-count 10000 \

--initial-cluster-token 8e84c0ec-ef6d-4616-98fc-008b0f120f4f \

--advertise-client-urls http://IP_1:2379 --listen-client-urls http://0.0.0.0:2379 \

--listen-peer-urls http://0.0.0.0:2380 --initial-advertise-peer-urls http://IP_1:2380 \

--quota-backend-bytes 8388608000 --max-request-bytes 1572864 \

--grpc-keepalive-interval 2h0m0s --pre-vote=true --logger zap

Etcd debug information (please run commands blow, feel free to obfuscate the IP address or FQDN in the output)

$ etcdctl member list -w table

# paste output here

$ etcdctl --endpoints=<member list> endpoint status -w table

# paste output here

Relevant log output

No response

I haven't looked to deep why this happens (expect you don't run defrag on all members), but please remember that db_total_size is different than db_in_use_size.

https://github.com/etcd-io/etcd/blob/6d27a42b7d6191da43d27930282de5b9e54ead7c/server/storage/backend/backend.go#L621-L630

The diff is the number of freePage * page size (4k). I think it might be because of compaction (need to confirm).

But in the manual scraping /metric result, even db_in_use_size diverged as big as 2G.

The diff is the number of freePage * page size (4k). I think it might be because of compaction (need to confirm).

Compaction windows is negotiated on raft, so I would not expect compaction could cause members diverging. Only option would be that compaction running in background bugged out on one of the members, but that would be strange.

But in the manual scraping /metric result, even db_in_use_size diverged as big as 2G.

This definitely is unexpected. Would love to see boltdb dump diff. To rule out compaction as a culprit could to scan revision space?

This issue has been automatically marked as stale because it has not had recent activity. It will be closed after 21 days if no further activity occurs. Thank you for your contributions.

This issue has been automatically marked as stale because it has not had recent activity. It will be closed after 21 days if no further activity occurs. Thank you for your contributions.

感谢,这边也遇到相同的问题

root@k8s-master01 17:19:10 ~ # etcdctl --endpoints="10.10.11.99:2379,10.10.11.100:2379,10.10.11.102:2379" --cacert=/etc/kubernetes/pki/etcd/etcd-ca.pem --cert=/etc/kubernetes/pki/etcd/etcd.pem --key=/etc/kubernetes/pki/etcd/etcd-key.pem endpoint status --write-out=table +-------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS | +-------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | 10.10.11.99:2379 | ce473dddcf114fa6 | 3.5.6 | 129 MB | true | false | 81 | 127683790 | 127683790 | | | 10.10.11.100:2379 | 8c3d1a8f0a5bc682 | 3.5.6 | 127 MB | false | false | 81 | 127683790 | 127683790 | | | 10.10.11.102:2379 | 2a25a0237b706a0d | 3.5.6 | 127 MB | false | false | 81 | 127683790 | 127683790 | | +-------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ root@k8s-master01 17:20:53 ~ # REVISION=$(etcdctl --endpoints="10.10.11.99:2379,10.10.11.100:2379,10.10.11.102:2379" --cacert=/etc/kubernetes/pki/etcd/etcd-ca.pem --cert=/etc/kubernetes/pki/etcd/etcd.pem --key=/etc/kubernetes/pki/etcd/etcd-key.pem get foo -w json | jq -r '.header.revision') root@k8s-master01 17:26:20 ~ # etcdctl --endpoints="10.10.11.99:2379,10.10.11.100:2379,10.10.11.102:2379" --cacert=/etc/kubernetes/pki/etcd/etcd-ca.pem --cert=/etc/kubernetes/pki/etcd/etcd.pem --key=/etc/kubernetes/pki/etcd/etcd-key.pem endpoint hashkv --rev $REVISION -w table +-------------------+------------+ | ENDPOINT | HASH | +-------------------+------------+ | 10.10.11.99:2379 | 1979968429 | | 10.10.11.100:2379 | 1979968429 | | 10.10.11.102:2379 | 1979968429 | +-------------------+------------+ root@k8s-master01 17:26:47 ~ # etcdctl --endpoints="10.10.11.99:2379,10.10.11.100:2379,10.10.11.102:2379" --cacert=/etc/kubernetes/pki/etcd/etcd-ca.pem --cert=/etc/kubernetes/pki/etcd/etcd.pem --key=/etc/kubernetes/pki/etcd/etcd-key.pem endpoint status --write-out=table +-------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS | +-------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | 10.10.11.99:2379 | ce473dddcf114fa6 | 3.5.6 | 129 MB | true | false | 81 | 127689679 | 127689679 | | | 10.10.11.100:2379 | 8c3d1a8f0a5bc682 | 3.5.6 | 128 MB | false | false | 81 | 127689679 | 127689679 | | | 10.10.11.102:2379 | 2a25a0237b706a0d | 3.5.6 | 128 MB | false | false | 81 | 127689679 | 127689679 | | +-------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ root@k8s-master01 17:27:00 ~ #

This issue has been automatically marked as stale because it has not had recent activity. It will be closed after 21 days if no further activity occurs. Thank you for your contributions.