mini.nvim

mini.nvim copied to clipboard

mini.nvim copied to clipboard

Beta-testing 'mini.test'

Please leave your feedback about new 'mini.test' module here. Feel free to either add new comment or positively upvote existing one.

Some things that I am interested to find out (obviously, besides bugs):

- Are configuration and function names intuitive enough?

- Are default values of settings convenient for you?

- Is documentation (both help file and 'TESTING.md') clear enough?

getting a problem, and when i try to clone it back in i get this invalid path thing.

is this because I'm on windows?

~#@❯ git reset ❮ error: invalid path 'tests/screenshots/tests-test_completion.lua-|-Autocompletion-|-works-with-LSP-client' fatal: make_cache_entry failed for path 'tests/screenshots/tests-test_completion.lua-|-Autocompletion-|-works-with-LSP-client'

Thanks for bringing this!

Although 'mini.test' doesn't seem to work yet on Windows, it should be possible to clone/checkout whole plugin on latest main branch. Would you mind check that?

That is what I get by neglecting setting up Continuous Integration for Windows and forgetting that it requires extra path sanitization :(

Yeah, a no go, it will not let me check out main, only stable. The path thing is quite a bear, haha.

Hmmm... I tried fresh clone and checkout on Windows in Github Actions. Here is the full job result. It had warnings about several paths being identical on case insensitive system, but besides that seemed to properly checked out. I did full clone inside my Neovim config with git clone https://github.com/echasnovski/mini.nvim deps/mini.nvim and later checked if everything is up to date.

Relevant text results:

Text results

git clone https://github.com/echasnovski/mini.nvim deps/mini.nvim

Cloning into 'deps/mini.nvim'...

warning: the following paths have collided (e.g. case-sensitive paths

on a case-insensitive filesystem) and only one from the same

colliding group is in the working tree:

'tests/screenshots/tests-test_jump.lua---Delayed-highlighting---respects-`config.delay.highlight`---test-+-args-{-'F'-}'

'tests/screenshots/tests-test_jump.lua---Delayed-highlighting---respects-`config.delay.highlight`---test-+-args-{-'f'-}'

'tests/screenshots/tests-test_jump.lua---Delayed-highlighting---respects-`config.delay.highlight`---test-+-args-{-'F'-}-002'

'tests/screenshots/tests-test_jump.lua---Delayed-highlighting---respects-`config.delay.highlight`---test-+-args-{-'f'-}-002'

'tests/screenshots/tests-test_jump.lua---Delayed-highlighting---respects-`config.delay.highlight`---test-+-args-{-'T'-}'

'tests/screenshots/tests-test_jump.lua---Delayed-highlighting---respects-`config.delay.highlight`---test-+-args-{-'t'-}'

'tests/screenshots/tests-test_jump.lua---Delayed-highlighting---respects-`config.delay.highlight`---test-+-args-{-'T'-}-002'

'tests/screenshots/tests-test_jump.lua---Delayed-highlighting---respects-`config.delay.highlight`---test-+-args-{-'t'-}-002'

'tests/screenshots/tests-test_jump.lua---Delayed-highlighting---works---test-+-args-{-'F'-}'

'tests/screenshots/tests-test_jump.lua---Delayed-highlighting---works---test-+-args-{-'f'-}'

'tests/screenshots/tests-test_jump.lua---Delayed-highlighting---works---test-+-args-{-'F'-}-002'

'tests/screenshots/tests-test_jump.lua---Delayed-highlighting---works---test-+-args-{-'f'-}-002'

'tests/screenshots/tests-test_jump.lua---Delayed-highlighting---works---test-+-args-{-'T'-}'

'tests/screenshots/tests-test_jump.lua---Delayed-highlighting---works---test-+-args-{-'t'-}'

'tests/screenshots/tests-test_jump.lua---Delayed-highlighting---works---test-+-args-{-'T'-}-002'

'tests/screenshots/tests-test_jump.lua---Delayed-highlighting---works---test-+-args-{-'t'-}-002'

cd deps/mini.nvim && git log -10 --oneline

1ec280c (mini.test) Update buffer reporter to remove unnecessary highlighting.

f3e20fb (mini.test) Add note about reasons to not prefer "busted" style.

a6d8d3a (mini.test) Use more strict screenshot path sanitization.

5c4425c (mini.trailspace tests) Use screen tests and directory name 'dir-*'.

67a0dc4 (mini.tabline tests) Use screen tests and directory name 'dir-*'.

296b1ab (mini.surround tests) Use screen tests.

8ebbdcb (mini.statusline tests) Use screen tests and directory name 'dir-*'.

576ca90 (mini.starter tests) Use screen tests and directory name 'dir-*'.

27d5028 (mini.sessions tests) Use directory name 'dir-*'.

5750681 (mini.misc tests) Use screen tests.

I am not sure how to resolve this here. Are you certain that you are on latest main? It should have a6d8d3a commit in output of git log -10.

In 807f068db267053b7b534ad7fe925d49f8937831 I removed the name collision as well. If you have any problems with main branch, I hope this should help:

git reset c820abfe --hard && git pull origin main.

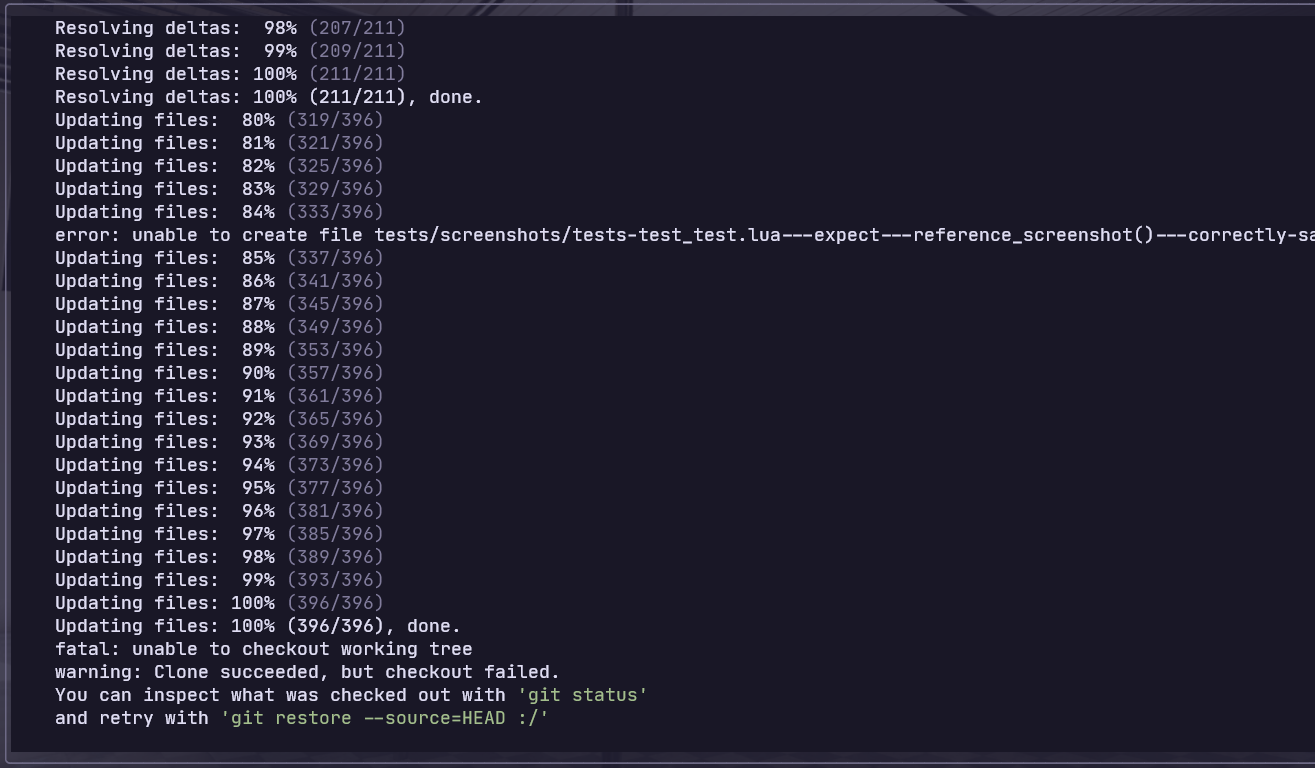

when i delete the folder completely, then have packer sync, i get this on updating files

"error: unable to create file tests/screenshots/tests-test_test.lua---expect---reference_screenshot()---correctly-sanitizes-path--+{}()[]''----'-------------test-+-args-{-'-+{}()[]'-'-----'-------t--0-1-31'-}: Filename too long"

windows is stupid about this sort of thing, but path filenames really need to be short, by default nvim-data gets shoved way in the back of things to begin with. mine is STARTING at 'C:\Users\Will.ehrendreich\AppData\Local\nvim-data\site\pack\packer\opt\mini.nvim'

that's really edging close to the limit to begin with, i wish this wasn't where neovim decided to put stuff.

I personally can look up how to support large filepaths, but that doesn't mean that other people on windows systems are going to be able to. that further introduces problems for some people if they work on a network of all windows systems, because some things that work fine with one guy's machine with long file path support don't work fine without it and it becomes a really hard thing to track down what the problem is, because no one thinks that it's going to be something so absurd. lol.

I might try to move my data path or something.. it's just such a pain because so much defaults to this place on the drive to begin with.

That is... somewhat expected from Windows :)

As quick search showed, does setting this git option helps?

git config --system core.longpaths true

If yes, I think I'd just add this to "Installation" section.

I just sidestepped the problem entirely and set XDG_* environment variables to their respective places at the root of my drive (c:/.config, c:/.local/share, etc.)

works fine now.

Thank you for you patience! This gave me a lot of new information to be aware about. I think I'll still try to do something with this long file name.

Hey,

Love the project. I was looking at the tests for mini.surround to get an idea of how tests are written to maybe see about contributing to the project. When running the tests it took a while to execute and I noticed they didn't execute in parallel which I assume is the cause Whereas projects like plenary.nvim executes in parallel.

What are the benefits compared plenary.nvim tests? Why not use plenary.nvim?

Thank you and keep up the good work!

Hi! Thanks!

I did write initially all tests with 'plenary.nvim'. Had several observations which I either didn't like (but fixable upstream with an effort) or was against 'plenary.nvim' design (like its running each test file in separate headless Neovim instance). Main points are outlined in help file. Extra one is that 'mini.test' allows writing parametrized tests, which proved to be really useful after I converted all tests from 'plenary.nvim' to 'mini.test'.

Some personal ones:

- 'mini.test' allows writing tests in not "nested" way, as in "busted-style". When using 'plenary.nvim' tests I found it inconvenient to go to the line with error and actually lacking context of what function it was testing (initial description in error tests is forgotten quite quickly). Also saves indentation which becomes quite visible after just two nesting calls.

- I've got a desire to make 'mini.nvim' to have no dependencies apart from Neovim itself. Some other plugins enhance experience but not strictly necessary.

Tests take a while to execute because every test case is executed in fresh Neovim instance, which needs some time to set up. Initially I tried to reuse Neovim instances to increase test execution speed, but it proved to be a source of some difficult test errors to debug.

There is no parallel execution mostly because it is not that straightforward to execute and 'mini.test' is already too big. Decided to compromise here. And also parallel test execution was a cause for instability of some timing tests (like "test that some action is executed exactly after delay milliseconds), which are crucial for some modules.

When a test fails due to an error, is there a way to display the full stacktrace? Currently, only the error message + the line in the test itself is displayed.

When a test fails due to an error, is there a way to display the full stacktrace? Currently, only the error message + the line in the test itself is displayed.

Not at the moment, no. It was intentional to limit the size of error message, as I didn't really find it useful. Going to the line of failing test and then interactively debugging was always more useful.

More precisely, when using module expectations it should show only those entries in callback that are from test file itself. As there is already a lot in the output, I figured it is a good compromise. Would you find it useful to have more verbose traceback?

Edit: after thinking a bit about it, I am struggling to come up with an example where it is possible to show more traceback, but 'mini.test' doesn't do it. At the moment, it should show those elements in call stack which originated from a file, but not from 'lua/mini/test.lua'. If you can share an example of failing test with traceback you'd like to improve, it would be great.

Going to the line of failing test and then interactively debugging was always more useful.

What's your workflow for interactive debugging?

As there is already a lot in the output, I figured it is a good compromise. Would you find it useful to have more verbose traceback?

Such a flag would be useful.

I am struggling to come up with an example where it is possible to show more traceback, but 'mini.test' doesn't do it. At the moment, it should show those elements in call stack which originated from a file, but not from 'lua/mini/test.lua'. If you can share an example of failing test with traceback you'd like to improve, it would be great.

I'll try to come with a simple example

What's your workflow for interactive debugging?

As all tests involve child process, the best I could find and which I am fairly comfortable using is the following:

- Verify that state of child process is the one I assume it is. Like "Are lines the same that I expect?", "Is mode is the one I want it to be?", etc. This check I do by prepending failing line with a

eq()call. Likeeq(child.get_lines())oreq(child.fn.mode()). Expectations will fail if output is notniland show what the value actually is. - Debug Lua code itself by updating it. This is a "print statement debugging" but assigning something to a global variable which later is checked in a fashion from previous step. Like if I want to verify that cache is the one I expect it to be, I'll add line

_G.cache = cachein appropriate place, update failing test witheq()call, and rerun it.

Yes, this is not very advanced compared to some conventional debugging (like breakpoints, stepping in/out, etc.), but it does the job.

Ok, here's an example where the stack trace is omitted.

Given a simple module foo.lua:

function f() error("foobar") end

function g() f() end

function h() g() end

And this test:

local foo = require("foo")

local T = MiniTest.new_set()

T['h'] = function() h() end

return T

I would expect the test to contain the full stcktrace demonstrating the calls to h, g, and f. But instead, minitest gives me:

FAIL in tests/test_test.lua | h: ...re/nvim/site/pack/packer/start/vim-ctrlspace/lua/foo.lua:3: foobar

Which is just the toplevel error message.

Thanks, this is entirely valid use case.

The reason this is happening is because xpcall() is used when executing test case. It calls on_error callback with argument being only error message.

I think I know how to incorporate it into current code. Need some time to do it cleanly.

@rgrinberg, thanks again for suggestion! It does seem to be a more appropriate form of output.

Should be implemented in latest main.

Hey! I really like this framework so far!

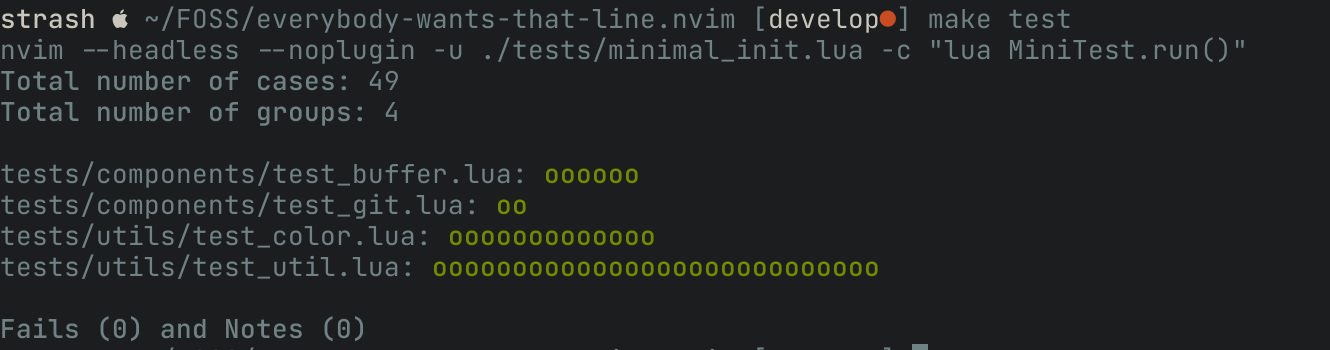

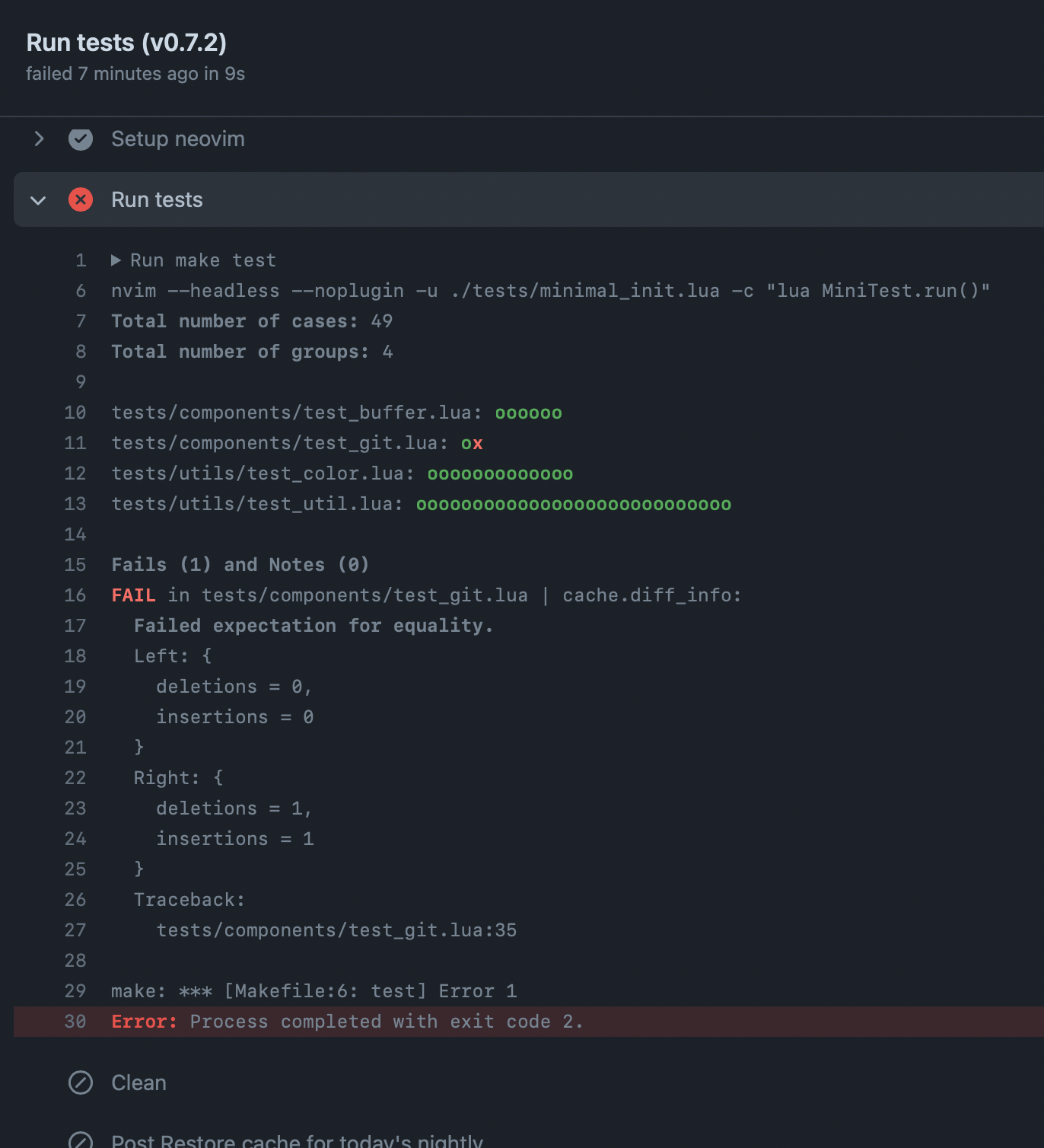

But I faced a very strange situation. All tests passed on my machine, but on GitHub one test fails. I have no idea why this is happening. I thought maybe you could tell me something.

local eq = MiniTest.expect.equality

local child = MiniTest.new_child_neovim()

local T = MiniTest.new_set({

hooks = {

pre_once = function()

child.start({ "-u", "tests/minimal_init.lua" })

child.fn.system([[cd dependencies && git init -b "test_branch" && echo "" >> test_file && git add test_file && git commit -m "init"]])

child.lua([[require("everybody-wants-that-line").setup()]])

child.lua([[M = require("everybody-wants-that-line.components.git")]])

child.cmd("cd dependencies | e test_file")

end,

post_once = function()

child.cmd("cd ..")

child.fn.system("cd dependencies && rm -rf .git test_file")

child.stop()

end,

},

})

-- this works

T["cache.branch"] = function()

eq(child.lua_get("M.cache.branch"), "test_branch")

end

-- this fails

T["cache.diff_info"] = function()

child.api.nvim_buf_set_text(0, 0, 0, 0, 0, { "text" })

child.cmd("w | sleep")

local diff_info = {

insertions = 1,

deletions = 1,

}

eq(child.lua_get("M.cache.diff_info"), diff_info)

end

return T

So what I'm trying to do is to create a repository in one of the directories, add a file, commit it, make changes and then check diff stat (number of changes). The directory is in .gitignore of the main repository just in case.

Local tests

GitHub Action

Hey! I really like this framework so far!

Glad to hear it!

But I faced a very strange situation. All tests passed on my machine, but on GitHub one test fails. I have no idea why this is happening. I thought maybe you could tell me something.

Nothing catches an eye from 'mini.test' perspective. Some thoughts:

- I found restarting child on every test case to be a useful trade-off for stability sacrificing test execution speed.

- On CI tests involving testing some kind of delay might break from time to time. Doesn't seem to be the case here, but who knows.

- When it comes to getting the latest child state, sometimes it might involve forcing to clear a

libuveventloop. For that I use `poke_eventloop() helper which I found in Neovim source code. But this usually helps when something breaks locally in test but works interactively. - Debugging this kind of test failures is hard and involves a lot of head banging against the wall and a decent amount of "Try fix CI tests" pushes. Usually it boils down to incorrect assumptions about what is being done on CI side of things. For example, different Neovim or git version. How I would go about debugging this is to verify step by step that it works the way I intend it to. Like, add

eq(vim.fn.isdirectory(".git"))inside yourT["cache.diff_info"]and verify it is not zero on CI side. Then, make sure that yourlocal statis computed the way you expect, and so on. I can't see a better solution here.

With release of version 0.5.0, 'mini.test' is now out of beta-testing. Thank you all for your insight!