insightface

insightface copied to clipboard

insightface copied to clipboard

Export insightface models to ONNX and inference with TensorRT

Hello everyone, here are some scripts that can convert insightface params to onnx model. These scripts have been sorted out various methods of exporting MXNet params or insightface params on the GitHub or CSDN, and can export various models of insightface, RetinaFace, arcface, 2d106det and gender-age models are all supported. The repo address is: https://github.com/linghu8812/tensorrt_inference

| supported models | scripts |

|---|---|

| RetinaFace | export_retinaface |

| arcface | export_arcface |

| 2d106det | export_2d106det |

| gender-age | export_gender-age |

Export RetinaFace params to ONNX

For RetinaFace model, RetinaFace-R50, RetinaFace-MobileNet0.25 and RetinaFaceAntiCov are both supported. copy project/RetinaFace/export_onnx.py to ./detection/RetinaFace or ./detection/RetinaFaceAntiCov, with the following comman can export those models.

- export resnet50 model

python3 export_onnx.py

- export mobilenet 0.25 model

python3 export_onnx.py --prefix ./model/mnet.25

- export RetinaFaceAntiCov model

python3 export_onnx.py --prefix ./model/mnet_cov2 --network net3l

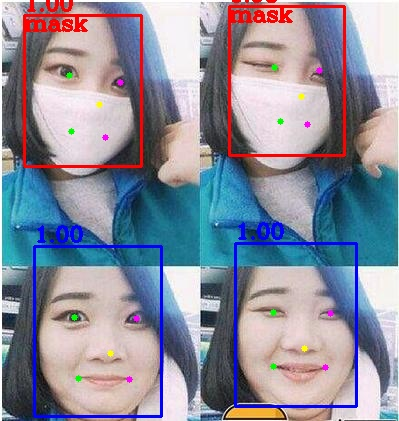

An inference code with tensorrt has also been supplied, the following are inference results:

- RetinaFace-R50 result

- RetinaFaceAntiCov result

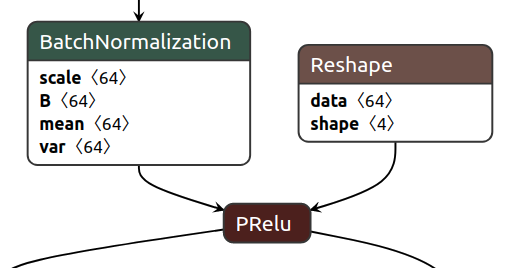

Export arcface params to ONNX

For arcface model, it has added reshape the shape of PRelu params, so the exported PRelu node structure is shown as following:

Export gender-age and 2d106det params to ONNX

The following is a TensorRT result for 2d106det model, now it's run alone, not with retinaface

Hi! Great work @linghu8812! I've got a bit overlapping project, though more focused on deployment of TensorRT face recognition pipeline over REST API. I hope it won't be too rude to share it here too, instead of spamming issues: InsightFace-REST

Hi, I have successfully converted the model to onnx and was able to load it. But when I tried to use it for inference, it throws a runtime error:

Traceback (most recent call last):

File "predict_generate_detections_for_map.py", line 181, in

https://github.com/linghu8812/tensorrt_inference/issues/12#issuecomment-768060742

this might help

Where can I download mnet_cov2? Thank you

@linghu8812 I have an arcface r100 which is identical to the insightface r100 arcface model, with the exception that the output feature vector is 500 elements instead of 512. When I use your conversion script, the onnx model always produces the same output regardless of input. Do I need to adapt it somehow to make it work?