celery

celery copied to clipboard

celery copied to clipboard

Worker stops working every 60 seconds

Checklist

- [x] I have verified that the issue exists against the

masterbranch of Celery. - [ ] This has already been asked to the discussions forum first.

- [x] I have read the relevant section in the contribution guide on reporting bugs.

- [x] I have checked the issues list for similar or identical bug reports.

- [x] I have checked the pull requests list for existing proposed fixes.

- [x] I have checked the commit log to find out if the bug was already fixed in the master branch.

- [x] I have included all related issues and possible duplicate issues in this issue (If there are none, check this box anyway).

Mandatory Debugging Information

- [x] I have included the output of

celery -A proj reportin the issue. (if you are not able to do this, then at least specify the Celery version affected). - [x] I have verified that the issue exists against the

masterbranch of Celery. - [x] I have included the contents of

pip freezein the issue. - [x] I have included all the versions of all the external dependencies required to reproduce this bug.

Optional Debugging Information

- [x] I have tried reproducing the issue on more than one Python version and/or implementation.

- [] I have tried reproducing the issue on more than one message broker and/or result backend.

- [x] I have tried reproducing the issue on more than one version of the message broker and/or result backend.

- [x] I have tried reproducing the issue on more than one operating system.

- [x] I have tried reproducing the issue on more than one workers pool.

- [x] I have tried reproducing the issue with autoscaling, retries, ETA/Countdown & rate limits disabled.

- [x] I have tried reproducing the issue after downgrading and/or upgrading Celery and its dependencies.

Related Issues and Possible Duplicates

Related Issues

- None

Possible Duplicates

- None

Environment & Settings

Celery version: 5.3.0a1 (dawn-chorus)

celery report Output:

Steps to Reproduce

Required Dependencies

- Minimal Python Version: Python 3.10.4

- Minimal Celery Version: 5.3.0a1

- Minimal Kombu Version: 5.3.0a1

- Minimal Broker Version: RabbitMQ 3.9.1

- Minimal Result Backend Version: Memory Cache

- Minimal OS and/or Kernel Version: Ubuntu 22.04

- Minimal Broker Client Version: 3.9.1

- Minimal Result Backend Client Version: N/A or Unknown

Python Packages

software -> celery:5.3.0a1 (dawn-chorus) kombu:5.3.0a1 py:3.10.4 billiard:3.6.4.0 py-amqp:5.1.1 platform -> system:Linux arch:64bit, ELF kernel version:5.15.0-25-generic imp:CPython loader -> celery.loaders.default.Loader settings -> transport:amqp results:cache

broker_pool_limit: 32768 broker_url: 'amqp://guest:********@localhost:1928//' cache_backend: 'memory' enable_utc: True imports: ('services',) result_backend: 'cache' result_expires: 10800 task_acks_late: True task_annotations: { 'services.a_service': {'rate_limit': '7000/m'}, 'services.b_service': {'rate_limit': '7000/m'}, 'services.c_service': {'rate_limit': '7000/m'}, 'services.d_service': {'rate_limit': '7000/m'}, 'services.e_service': {'rate_limit': '7000/m'}, 'services.f_service': {'rate_limit': '1/m'}, 'services.g_service': {'rate_limit': '1/m'}, 'services.h_service': {'rate_limit': '1/m'}, 'services.i_service': {'rate_limit': '7000/m'}, 'services.j_service': {'rate_limit': '7000/m'}, 'services.xx_service': {'rate_limit': '7000/m'}, 'services.zz_service': {'rate_limit': '7000/m'}, 'services.vc_service': {'rate_limit': '7000/m'}} task_ignore_result: True deprecated_settings: None

pip freeze Output:

aiocron==1.8

aiofiles==0.8.0

aiohttp==3.8.1

aiohttp-socks==0.7.1

aioredis==2.0.1

aiosignal==1.2.0

amqp==5.1.1

anyio==3.5.0

asgiref==3.5.0

async-timeout==4.0.2

asyncpg==0.25.0

attrs==21.4.0

beautifulsoup4==4.11.1

billiard==3.6.4.0

blessed==1.19.0

bs4==0.0.1

celery @ https://github.com/celery/celery/archive/refs/heads/master.zip

certifi==2021.10.8

chardet==4.0.0

charset-normalizer==2.0.12

click==8.1.2

click-didyoumean==0.3.0

click-plugins==1.1.1

click-repl==0.2.0

coverage==6.3.2

crc16==0.1.1

croniter==1.3.4

cryptg==0.3.1

DateTime==4.5

dbus-python==1.2.18

Deprecated==1.2.13

distro-info===1.1build1

Django==4.0.4

dnspython==2.2.1

eventlet==0.33.1

Faker==13.3.5

fastapi==0.75.2

flower==1.0.0

frozenlist==1.3.0

future==0.18.2

gevent==21.12.0

greenlet==1.1.2

gyp==0.1

h11==0.13.0

humanize==0.0.0

idna==3.3

image==1.5.33

iniconfig==1.1.1

jdatetime==4.1.0

kombu==5.3.0a1

lxml==4.8.0

motor @ https://github.com/mongodb/motor/archive/refs/heads/master.zip

multidict==6.0.2

netifaces==0.11.0

nose==1.3.7

packaging==21.3

persiantools==3.0.0

pg-activity==2.2.1

phonenumbers==8.12.47

Pillow==9.1.0

pluggy==1.0.0

pprintpp==0.4.0

prometheus-client==0.14.1

prompt-toolkit==3.0.29

psutil==5.9.1

psycopg2==2.9.3

py==1.11.0

pyaes==1.6.1

pyArango==2.0.1

pyasn1==0.4.8

pycountry==22.3.5

pycountry-convert==0.7.2

pydantic==1.9.0

Pygments==2.11.2

PyGObject==3.42.0

pymongo==4.1.1

pyparsing==3.0.8

Pyrogram==2.0.19

PySocks==1.7.1

pytest==7.1.1

pytest-cov==3.0.0

pytest-mock==3.7.0

python-apt==2.3.0+ubuntu2

python-dateutil==2.8.2

python-debian===0.1.43ubuntu1

python-dotenv==0.20.0

python-multipart==0.0.5

python-socks==2.0.3

pytz==2022.1

pytz-deprecation-shim==0.1.0.post0

PyYAML==5.4.1

qrcode==7.3.1

Random-Word==1.0.7

redis==4.2.2

repoze.lru==0.7

requests==2.27.1

rsa==4.8

six==1.16.0

sniffio==1.2.0

socks==0

soupsieve==2.3.2.post1

speedtest-cli==2.1.3

SQLAlchemy==1.4.35

sqlalchemy2-stubs==0.0.2a22

sqlmodel==0.0.6

sqlparse==0.4.2

starlette==0.17.1

TgCrypto==1.2.3

tomli==2.0.1

tornado==6.1

typing_extensions==4.2.0

tzdata==2022.1

tzlocal==4.2

ubuntu-advantage-tools==27.9

ufw==0.36.1

urllib3==1.26.9

uvicorn==0.17.6

vine==5.0.0

wcwidth==0.2.5

wrapt==1.14.0

yarl==1.7.2

zope.event==4.5.0

zope.interface==5.4.0

Other Dependencies

N/A

Minimally Reproducible Test Case

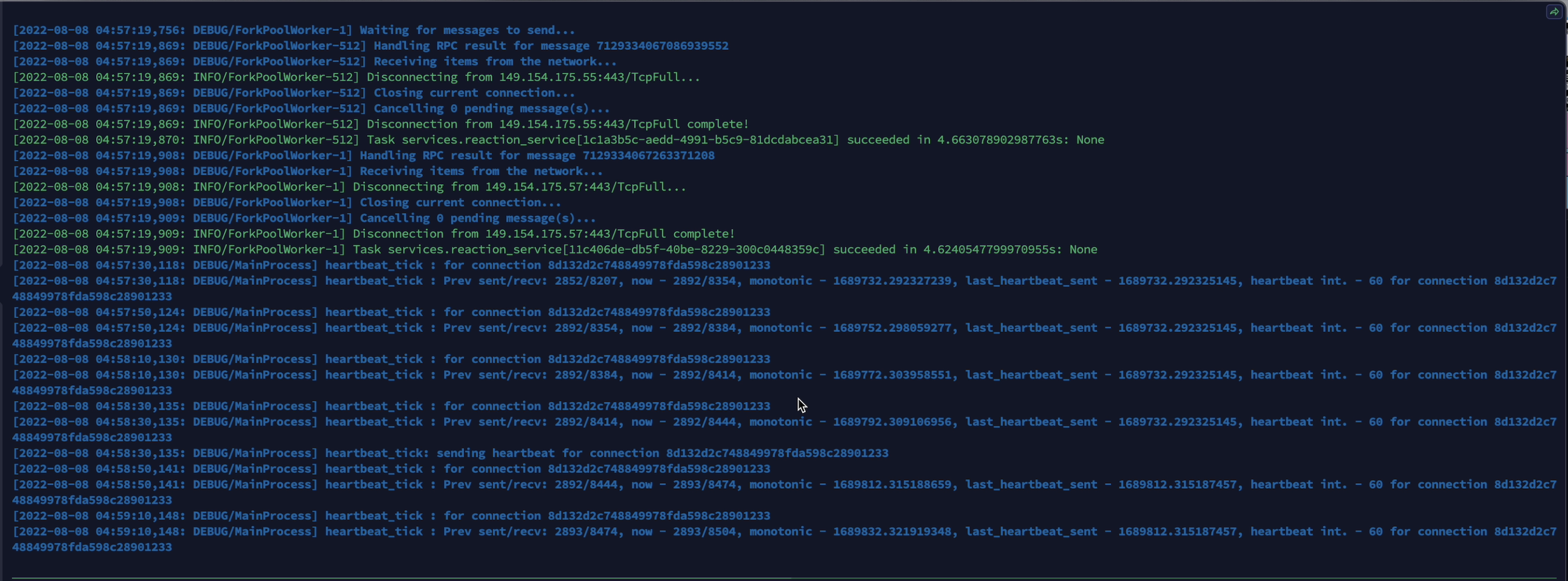

[2022-08-08 03:58:25,725: DEBUG/ForkPoolWorker-522] Closing current connection...

[2022-08-08 03:58:25,725: DEBUG/ForkPoolWorker-522] Cancelling 0 pending message(s)...

[2022-08-08 03:58:25,725: INFO/ForkPoolWorker-522] Disconnection from 149.154.175.58:443/TcpFull complete!

[2022-08-08 03:58:25,725: INFO/ForkPoolWorker-522] Task services.a_service[64f77116-f3f6-4bc1-ace2-5b994258cfd1] succeeded in 2.5671544598881155s: None

[2022-08-08 03:58:25,833: DEBUG/ForkPoolWorker-513] Handling RPC result for message 7129318888505874252

[2022-08-08 03:58:25,833: DEBUG/ForkPoolWorker-513] Receiving items from the network...

[2022-08-08 03:58:25,833: INFO/ForkPoolWorker-513] Disconnecting from 149.154.175.54:443/TcpFull...

[2022-08-08 03:58:25,833: DEBUG/ForkPoolWorker-513] Closing current connection...

[2022-08-08 03:58:25,834: DEBUG/ForkPoolWorker-513] Cancelling 0 pending message(s)...

[2022-08-08 03:58:25,834: INFO/ForkPoolWorker-513] Disconnection from 149.154.175.54:443/TcpFull complete!

[2022-08-08 03:58:25,834: INFO/ForkPoolWorker-513] Task services.a_service[f83856d0-bdae-43f0-8f5f-0272c2f5fc0f] succeeded in 2.6757159510161728s: None

[2022-08-08 03:58:34,700: DEBUG/MainProcess] heartbeat_tick : for connection 158072e042fd43e2982921507c4090df

[2022-08-08 03:58:34,700: DEBUG/MainProcess] heartbeat_tick : Prev sent/recv: 529/751, now - 605/775, monotonic - 1686196.873902797, last_heartbeat_sent - 1686196.873901675, heartbeat int. - 60 for connection 158072e042fd43e2982921507c4090df

[2022-08-08 03:58:54,706: DEBUG/MainProcess] heartbeat_tick : for connection 158072e042fd43e2982921507c4090df

[2022-08-08 03:58:54,706: DEBUG/MainProcess] heartbeat_tick : Prev sent/recv: 605/775, now - 605/805, monotonic - 1686216.879871576, last_heartbeat_sent - 1686196.873901675, heartbeat int. - 60 for connection 158072e042fd43e2982921507c4090df

[2022-08-08 03:59:14,711: DEBUG/MainProcess] heartbeat_tick : for connection 158072e042fd43e2982921507c4090df

[2022-08-08 03:59:14,711: DEBUG/MainProcess] heartbeat_tick : Prev sent/recv: 605/805, now - 605/835, monotonic - 1686236.885361101, last_heartbeat_sent - 1686196.873901675, heartbeat int. - 60 for connection 158072e042fd43e2982921507c4090df

[2022-08-08 03:59:34,716: DEBUG/MainProcess] heartbeat_tick: sending heartbeat for connection 158072e042fd43e2982921507c4090df

[2022-08-08 03:59:54,720: DEBUG/MainProcess] heartbeat_tick : for connection 158072e042fd43e2982921507c4090df

[2022-08-08 03:59:54,720: DEBUG/MainProcess] heartbeat_tick : Prev sent/recv: 605/865, now - 606/895, monotonic - 1686276.894446629, last_heartbeat_sent - 1686276.894445928, heartbeat int. - 60 for connection 158072e042fd43e2982921507c4090df

[2022-08-08 04:00:14,726: DEBUG/MainProcess] heartbeat_tick : for connection 158072e042fd43e2982921507c4090df

[2022-08-08 04:00:14,726: DEBUG/MainProcess] heartbeat_tick : Prev sent/recv: 606/895, now - 606/925, monotonic - 1686296.899726603, last_heartbeat_sent - 1686276.894445928, heartbeat int. - 60 for connection 158072e042fd43e2982921507c4090df

Expected Behavior

Actual Behavior

Hello

i must to handle 5 million tasks daily

i have 100 Queues and number of concurrency in worker is 1000 and i use multi quques like celery -A proj worker -Q Qa,Qb,Qc ... ( i dont know if its effective to say )

sometimes my worker stuck

and this is my logs

worker stuck for 60 ~ 100 seconds then continue working

sometimes it can handle it very fast and sometimes i faced with this and my worker stucking every 60 ~ 100 seconds

did i panic ? is evenrything is ok

Hey @arashm404 :wave:, Thank you for opening an issue. We will get back to you as soon as we can. Also, check out our Open Collective and consider backing us - every little helps!

We also offer priority support for our sponsors. If you require immediate assistance please consider sponsoring us.

Did you use rate_limit with periodic task?

When I did this before, I encountered the same situation.

The other tasks seems await the periodic task.

Now I change them, I won't have this problem again.

My Celery version is 5.2.7.

Did you use

rate_limitwith periodic task? When I did this before, I encountered the same situation. The other tasks seems await the periodic task. Now I change them, I won't have this problem again. My Celery version is 5.2.7.

did you find out the part of celery that is causing this issue?

These days, I focused on my own project. So, I didn't find this issue in source code. Maybe after a while, I will try it. If I catch it, I will send the PR. : )