parseq

parseq copied to clipboard

parseq copied to clipboard

TypeError: __init__() missing 21 required positional arguments: [When running test.py and read.py]

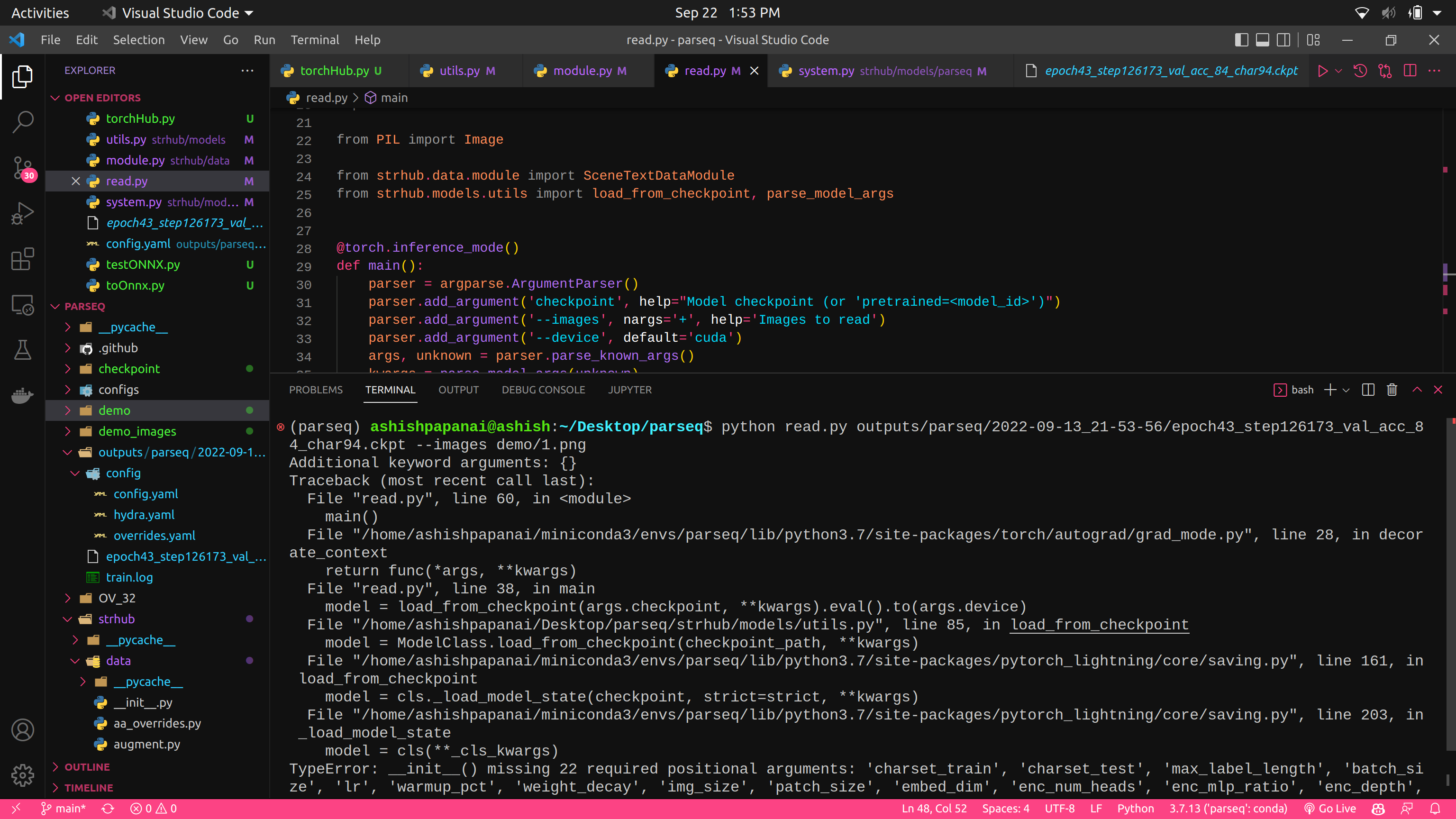

I am getting the following errors when I am trying to reproduce the code, and run test.py and read.py

Additional keyword arguments: {'charset_test': '0123456789abcdefghijklmnopqrstuvwxyz'}

Traceback (most recent call last):

File "test.py", line 133, in <module>

main()

File "/home/ashishpapanai/miniconda3/envs/parseq/lib/python3.7/site-packages/torch/autograd/grad_mode.py", line 28, in decorate_context

return func(*args, **kwargs)

File "test.py", line 87, in main

model = load_from_checkpoint(args.checkpoint, **kwargs).eval().to(args.device)

File "/home/ashishpapanai/Desktop/parseq/strhub/models/utils.py", line 85, in load_from_checkpoint

model = ModelClass.load_from_checkpoint(checkpoint_path, **kwargs)

File "/home/ashishpapanai/miniconda3/envs/parseq/lib/python3.7/site-packages/pytorch_lightning/core/saving.py", line 161, in load_from_checkpoint

model = cls._load_model_state(checkpoint, strict=strict, **kwargs)

File "/home/ashishpapanai/miniconda3/envs/parseq/lib/python3.7/site-packages/pytorch_lightning/core/saving.py", line 203, in _load_model_state

model = cls(**_cls_kwargs)

TypeError: __init__() missing 21 required positional arguments: 'charset_train', 'max_label_length', 'batch_size', 'lr', 'warmup_pct', 'weight_decay', 'img_size', 'patch_size', 'embed_dim', 'enc_num_heads', 'enc_mlp_ratio', 'enc_depth', 'dec_num_heads', 'dec_mlp_ratio', 'dec_depth', 'perm_num', 'perm_forward', 'perm_mirrored', 'decode_ar', 'refine_iters', and 'dropout'

Using the following command helps:

./read.py pretrained=parseq refine_iters:int=2 decode_ar:bool=false --images demo_images/*

Is there any way to specify the weights path? Instead of using the weights provided by the author?

The pretained parameter is provided for easy fine-tuning of the released weights. If you want to load your own checkpoint, use the ckpt_path parameter. This expects a Lightning checkpoint (weights + training params). The released .pt files contain the model weights only.

For any other use-case, you can just manually edit the code. See the create_model() function for more info.

Thanks @baudm,

How to use ckpt_path, is there any documentation for it?

How to obtain the lightning parameters?

I am not looking forward to fine-tune the model, I want to run the read.py file which I think focuses on single image inference, by using fine-tuned weights obtained from my team. Could you please guide me in fixing the missing arguments issue when I try to load weights (.pt) from path.

Sorry didn't catch the note about test and read, ckpt_path is for train.py only.

For test and read, see: https://github.com/baudm/parseq#evaluation

The very first sample command shows how to use your own Lightning checkpoint.

@baudm, Tried using PyTorch Lightning Checkpoint (.ckpt), still getting the same error.

Do you have any .ckpt checkpoint for me to test on?

I think you're using a wrong checkpoint. You can check by manually loading the checkpoint using torch.load and checking the values stored in the hyperparameters key.

This works, Thanks @baudm