community

community copied to clipboard

community copied to clipboard

FieldExport does not export field in configmap

Describe the bug Following this tutorial we are trying to export the endpoint of a RDS database to a configmap in order to provide it to our pods as an env variable.

However the configmap does not contain the value after applying the configuration.

Steps to reproduce

- Apply this configuration

apiVersion: rds.services.k8s.aws/v1alpha1

kind: DBInstance

metadata:

name: account-applications-api-db

namespace: account-applications-api

spec:

allocatedStorage: 20

backupRetentionPeriod: 14

dbInstanceClass: db.t3.medium

dbInstanceIdentifier: account-applications-api

dbName: account_applications_api

dbSubnetGroupName: account-applications-api-db-subnets

deletionProtection: true

engine: postgres

engineVersion: "13"

masterUsername: account_applications_api

# TODO: preferredBackupWindow?

# preferredMaintenanceWindow?

masterUserPassword:

name: db-secrets

key: password

namespace: account-applications-api

multiAZ: true

publiclyAccessible: false

storageEncrypted: true

vpcSecurityGroupIDs:

- sg-0ccf9607832ded7fe

---

apiVersion: v1

kind: ConfigMap

metadata:

name: postgres-database-cm

namespace: account-applications-api

data: {}

---

apiVersion: services.k8s.aws/v1alpha1

kind: FieldExport

metadata:

name: export-database-url

namespace: account-applications-api

spec:

from:

path: ".status.endpoint.address"

resource:

group: rds.services.k8s.aws

kind: DBInstance

name: account-applications-api-db

to:

kind: configmap

name: postgres-database-cm

namespace: account-applications-api

and Run

kubectl describe configmap/postgres-database-cm -n account-applications-api

worth noting that

kubectl describe fieldexport/export-database-url -n account-applications-api

does not return any status nor events.

Expected outcome

It should display data with the export-database-url field.

Environment

- Kubernetes version 1.22

- Using EKS (yes/no), if so version? yes, eks.2

- AWS service targeted (S3, RDS, etc.) RDS

Added more logs from the rds controller

{"level":"debug","ts":1656512130.7295468,"logger":"ackrt","msg":"> r.patchResourceStatus","account":"196530702500","role":"","region":"ap-southeast-1","kind":"DBInstance","namespace":"account-applications-api","name":"account-applications-api-db","is_adopted":false,"generation":8}

{"level":"debug","ts":1656512130.7295513,"logger":"ackrt","msg":">> kc.Patch (status)","account":"196530702500","role":"","region":"ap-southeast-1","kind":"DBInstance","namespace":"account-applications-api","name":"account-applications-api-db","is_adopted":false,"generation":8}

{"level":"debug","ts":1656512130.7465212,"logger":"ackrt","msg":"patched resource status","account":"196530702500","role":"","region":"ap-southeast-1","kind":"DBInstance","namespace":"account-applications-api","name":"account-applications-api-db","is_adopted":false,"generation":8}

{"level":"debug","ts":1656512130.7465491,"logger":"ackrt","msg":"<< kc.Patch (status)","account":"196530702500","role":"","region":"ap-southeast-1","kind":"DBInstance","namespace":"account-applications-api","name":"account-applications-api-db","is_adopted":false,"generation":8}

{"level":"debug","ts":1656512130.7465549,"logger":"ackrt","msg":"< r.patchResourceStatus","account":"196530702500","role":"","region":"ap-southeast-1","kind":"DBInstance","namespace":"account-applications-api","name":"account-applications-api-db","is_adopted":false,"generation":8}

{"level":"debug","ts":1656512130.7465625,"logger":"ackrt","msg":"requeue needed after error","account":"196530702500","role":"","region":"ap-southeast-1","kind":"DBInstance","namespace":"account-applications-api","name":"account-applications-api-db","is_adopted":false,"generation":8,"error":"DB Instance in 'rebooting' state, cannot be modified until 'available'.","after":30}

{"level":"debug","ts":1656512130.7565234,"logger":"exporter.field-export-reconciler","msg":"error did not need requeue","error":"the source resource is not synced yet"}

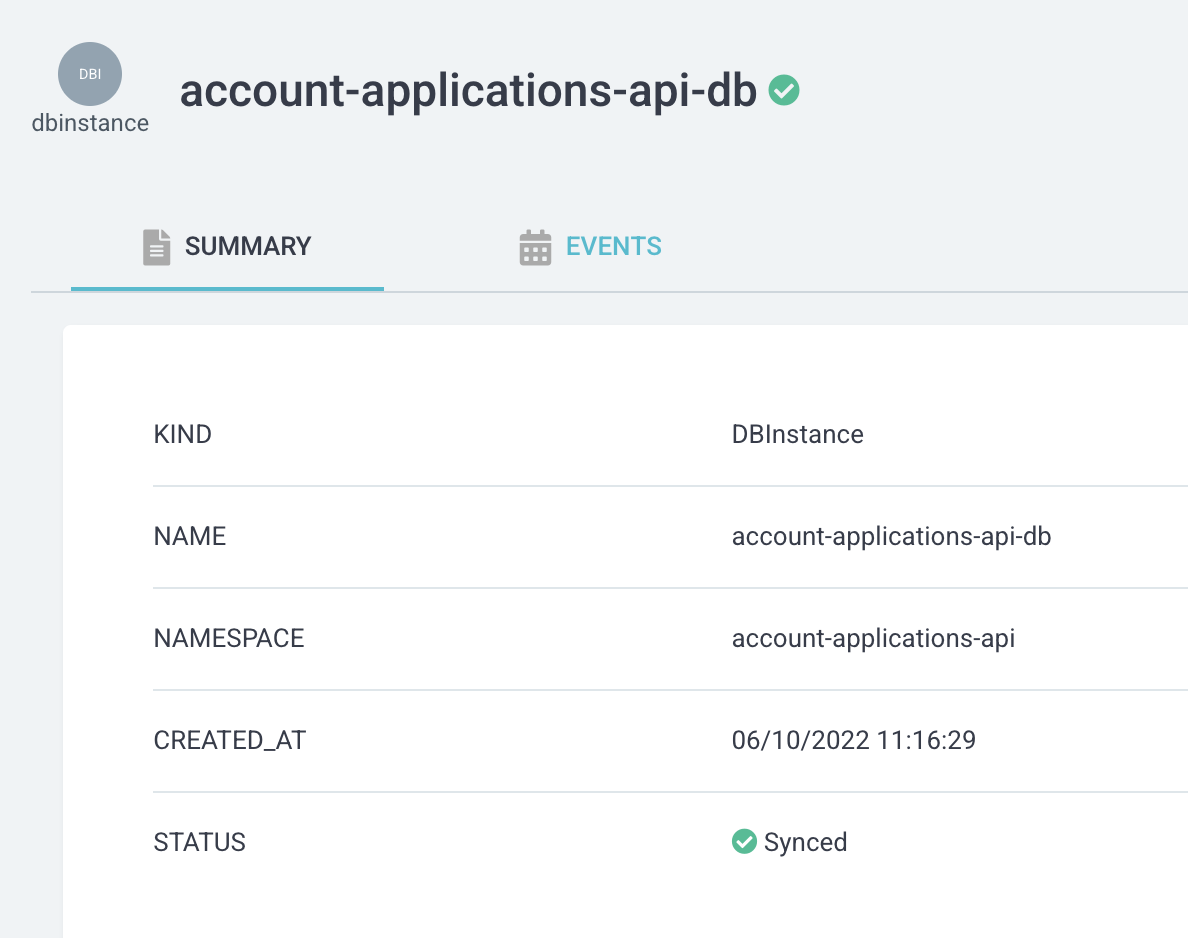

however the DB instance appears synced on argo-cd

however the DB instance appears synced on argo-cd

The Synced you're seeing here indicates that ArgoCD has successfully synced the object from Git. We need the ACK condition on the object to be synced before the FieldExport will work.

As discussed over Slack:

The RDS DBInstance resource has been faulty lately, struggling to reach ACK.ResourceSynced = true because of looping calls to ModifyDBInstance. The RDS maintainers and the ACK core team have been working together to figure out the best way to fix it, and have made a few commits towards getting it to work under all conditions. You upgraded to the latest version of the RDS controller, but it seems to still be looping. I will try to reproduce your spec and see if we can find a solution

Alright. I've messed around with it a bit to see what's happening and I have some updates. The RDS controller has issues reconciling DBInstance resources with multiAZ: true because of strange interactions from the RDS APIs - they're asynchronous sometimes, synchronous other times. We have already been looking into this, but we are still trying to figure out how to handle the mix of these two responses within the code. If you disable multiAZ and re-create the instance, it should be able to reach a steady state after it is created. If that doesn't work, something else that I've identified is performanceInsightsEnabled is initialised by the service after we expect it to be, so explicitly setting that to be false might help as well.

related #1376

Issues go stale after 90d of inactivity.

Mark the issue as fresh with /remove-lifecycle stale.

Stale issues rot after an additional 30d of inactivity and eventually close.

If this issue is safe to close now please do so with /close.

Provide feedback via https://github.com/aws-controllers-k8s/community.

/lifecycle stale

/remove-lifecycle stale

Issues go stale after 90d of inactivity.

Mark the issue as fresh with /remove-lifecycle stale.

Stale issues rot after an additional 30d of inactivity and eventually close.

If this issue is safe to close now please do so with /close.

Provide feedback via https://github.com/aws-controllers-k8s/community.

/lifecycle stale

Issues go stale after 90d of inactivity.

Mark the issue as fresh with /remove-lifecycle stale.

Stale issues rot after an additional 30d of inactivity and eventually close.

If this issue is safe to close now please do so with /close.

Provide feedback via https://github.com/aws-controllers-k8s/community.

/lifecycle stale

Stale issues rot after 60d of inactivity.

Mark the issue as fresh with /remove-lifecycle rotten.

Rotten issues close after an additional 60d of inactivity.

If this issue is safe to close now please do so with /close.

Provide feedback via https://github.com/aws-controllers-k8s/community.

/lifecycle rotten

/remove-lifecycle rotten

Should be resolved by https://github.com/aws-controllers-k8s/rds-controller/pull/151