kube-arangodb

kube-arangodb copied to clipboard

kube-arangodb copied to clipboard

Resource requests/limits not reflected in pods

I have specified some resource requests and limits for each of the components in my ArangoDeployment yaml definition, however those requests are not reflected in the actual DB cluster pods.

Here's my ArangoDeployment definition:

apiVersion: "database.arangodb.com/v1alpha"

kind: "ArangoDeployment"

metadata:

name: arangodb-cluster

namespace: graph

spec:

mode: Cluster

environment: Production

image: "arangodb/arangodb:3.4.1"

imagePullPolicy: IfNotPresent

externalAccess:

type: None

# disable TLS

tls:

caSecretName: None

agents:

count: 3

storageClassName: storageclass-slow-pv

resources:

requests:

cpu: "500m"

memory: "3Gi"

storage: 8Gi

limits:

memory: "8Gi"

dbservers:

count: 3

storageClassName: storageclass-slow-pv

resources:

requests:

cpu: "500m"

memory: "3Gi"

storage: 8Gi

limits:

memory: "8Gi"

coordinators:

count: 3

resources:

requests:

memory: "500Mi"

cpu: "200m"

limits:

memory: 2Gi

Here's the output of kubectl describe arangodeployment arangodb-cluster:

Name: arangodb-cluster

Namespace: graph

Labels: <none>

Annotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"database.arangodb.com/v1alpha","kind":"ArangoDeployment","metadata":{"annotations":{},"name":"arangodb-cluster","namespace":"graph"},"sp...

API Version: database.arangodb.com/v1alpha

Kind: ArangoDeployment

Metadata:

Cluster Name:

Creation Timestamp: 2019-01-15T18:13:05Z

Finalizers:

database.arangodb.com/remove-child-finalizers

Generation: 1

Resource Version: 170432

Self Link: /apis/database.arangodb.com/v1alpha/namespaces/graph/arangodeployments/arangodb-cluster

UID: 343fc03c-18f1-11e9-a02e-42010a8e0025

Spec:

Agents:

Count: 3

Resources:

Limits:

Memory: 8Gi

Requests:

Cpu: 500m

Memory: 3Gi

Storage: 8Gi

Storage Class Name: storageclass-slow-pv

Auth:

Jwt Secret Name: arangodb-cluster-jwt

Chaos:

Interval: 60000000000

Kill - Pod - Probability: 50

Coordinators:

Count: 3

Resources:

Limits:

Memory: 2Gi

Requests:

Cpu: 200m

Memory: 500Mi

Dbservers:

Count: 3

Resources:

Limits:

Memory: 8Gi

Requests:

Cpu: 500m

Memory: 3Gi

Storage: 8Gi

Storage Class Name: storageclass-slow-pv

Environment: Production

External Access:

Type: None

Image: arangodb/arangodb:3.4.1

Image Pull Policy: IfNotPresent

License:

Mode: Cluster

Rocksdb:

Encryption:

Single:

Resources:

Requests:

Storage: 8Gi

Storage Engine: RocksDB

Sync:

Auth:

Client CA Secret Name: arangodb-cluster-sync-client-auth-ca

Jwt Secret Name: arangodb-cluster-sync-jwt

External Access:

Monitoring:

Token Secret Name: arangodb-cluster-sync-mt

Tls:

Ca Secret Name: arangodb-cluster-sync-ca

Ttl: 2610h

Syncmasters:

Resources:

Syncworkers:

Resources:

Tls:

Ca Secret Name: None

Ttl: 2610h

Status:

Accepted - Spec:

Agents:

Count: 3

Resources:

Limits:

Memory: 8Gi

Requests:

Cpu: 500m

Memory: 3Gi

Storage: 8Gi

Storage Class Name: storageclass-slow-pv

Auth:

Jwt Secret Name: arangodb-cluster-jwt

Chaos:

Interval: 60000000000

Kill - Pod - Probability: 50

Coordinators:

Count: 3

Resources:

Limits:

Memory: 2Gi

Requests:

Cpu: 200m

Memory: 500Mi

Dbservers:

Count: 3

Resources:

Limits:

Memory: 8Gi

Requests:

Cpu: 500m

Memory: 3Gi

Storage: 8Gi

Storage Class Name: storageclass-slow-pv

Environment: Production

External Access:

Type: None

Image: arangodb/arangodb:3.4.1

Image Pull Policy: IfNotPresent

License:

Mode: Cluster

Rocksdb:

Encryption:

Single:

Resources:

Requests:

Storage: 8Gi

Storage Engine: RocksDB

Sync:

Auth:

Client CA Secret Name: arangodb-cluster-sync-client-auth-ca

Jwt Secret Name: arangodb-cluster-sync-jwt

External Access:

Monitoring:

Token Secret Name: arangodb-cluster-sync-mt

Tls:

Ca Secret Name: arangodb-cluster-sync-ca

Ttl: 2610h

Syncmasters:

Resources:

Syncworkers:

Resources:

Tls:

Ca Secret Name: None

Ttl: 2610h

Arangodb - Images:

Arangodb - Version: 3.4.1

Image: arangodb/arangodb:3.4.1

Image - Id: arangodb/arangodb@sha256:506de08ffff39efceee73ad784d43f383d33acff28bc5e5fcaf7871b68598af6

Conditions:

Last Transition Time: 2019-01-15T18:13:48Z

Last Update Time: 2019-01-15T18:13:48Z

Status: True

Type: Ready

Current - Image:

Arangodb - Version: 3.4.1

Image: arangodb/arangodb:3.4.1

Image - Id: arangodb/arangodb@sha256:506de08ffff39efceee73ad784d43f383d33acff28bc5e5fcaf7871b68598af6

Members:

Agents:

Conditions:

Last Transition Time: 2019-01-15T18:13:32Z

Last Update Time: 2019-01-15T18:13:32Z

Reason: Pod Ready

Status: True

Type: Ready

Created - At: 2019-01-15T18:13:09Z

Id: AGNT-aq7bpo1h

Initialized: true

Persistent Volume Claim Name: arangodb-cluster-agent-aq7bpo1h

Phase: Created

Pod Name: arangodb-cluster-agnt-aq7bpo1h-cd6817

Recent - Terminations: <nil>

Conditions:

Last Transition Time: 2019-01-15T18:13:35Z

Last Update Time: 2019-01-15T18:13:35Z

Reason: Pod Ready

Status: True

Type: Ready

Created - At: 2019-01-15T18:13:09Z

Id: AGNT-gikhke17

Initialized: true

Persistent Volume Claim Name: arangodb-cluster-agent-gikhke17

Phase: Created

Pod Name: arangodb-cluster-agnt-gikhke17-cd6817

Recent - Terminations: <nil>

Conditions:

Last Transition Time: 2019-01-15T18:13:35Z

Last Update Time: 2019-01-15T18:13:35Z

Reason: Pod Ready

Status: True

Type: Ready

Created - At: 2019-01-15T18:13:09Z

Id: AGNT-qlqxr0wb

Initialized: true

Persistent Volume Claim Name: arangodb-cluster-agent-qlqxr0wb

Phase: Created

Pod Name: arangodb-cluster-agnt-qlqxr0wb-cd6817

Recent - Terminations: <nil>

Coordinators:

Conditions:

Last Transition Time: 2019-01-15T18:13:48Z

Last Update Time: 2019-01-15T18:13:48Z

Reason: Pod Ready

Status: True

Type: Ready

Created - At: 2019-01-15T18:13:09Z

Id: CRDN-bbzgsjgx

Initialized: true

Phase: Created

Pod Name: arangodb-cluster-crdn-bbzgsjgx-cd6817

Recent - Terminations: <nil>

Conditions:

Last Transition Time: 2019-01-15T18:13:48Z

Last Update Time: 2019-01-15T18:13:48Z

Reason: Pod Ready

Status: True

Type: Ready

Created - At: 2019-01-15T18:13:09Z

Id: CRDN-eemikwqt

Initialized: true

Phase: Created

Pod Name: arangodb-cluster-crdn-eemikwqt-cd6817

Recent - Terminations: <nil>

Conditions:

Last Transition Time: 2019-01-15T18:13:48Z

Last Update Time: 2019-01-15T18:13:48Z

Reason: Pod Ready

Status: True

Type: Ready

Created - At: 2019-01-15T18:13:09Z

Id: CRDN-rvtlbpcd

Initialized: true

Phase: Created

Pod Name: arangodb-cluster-crdn-rvtlbpcd-cd6817

Recent - Terminations: <nil>

Dbservers:

Conditions:

Last Transition Time: 2019-01-15T18:13:45Z

Last Update Time: 2019-01-15T18:13:45Z

Reason: Pod Ready

Status: True

Type: Ready

Created - At: 2019-01-15T18:13:09Z

Id: PRMR-cfnpziua

Initialized: true

Persistent Volume Claim Name: arangodb-cluster-dbserver-cfnpziua

Phase: Created

Pod Name: arangodb-cluster-prmr-cfnpziua-cd6817

Recent - Terminations: <nil>

Conditions:

Last Transition Time: 2019-01-15T18:13:45Z

Last Update Time: 2019-01-15T18:13:45Z

Reason: Pod Ready

Status: True

Type: Ready

Created - At: 2019-01-15T18:13:09Z

Id: PRMR-twj3x9cp

Initialized: true

Persistent Volume Claim Name: arangodb-cluster-dbserver-twj3x9cp

Phase: Created

Pod Name: arangodb-cluster-prmr-twj3x9cp-cd6817

Recent - Terminations: <nil>

Conditions:

Last Transition Time: 2019-01-15T18:13:38Z

Last Update Time: 2019-01-15T18:13:38Z

Reason: Pod Ready

Status: True

Type: Ready

Created - At: 2019-01-15T18:13:09Z

Id: PRMR-yyfotd3d

Initialized: true

Persistent Volume Claim Name: arangodb-cluster-dbserver-yyfotd3d

Phase: Created

Pod Name: arangodb-cluster-prmr-yyfotd3d-cd6817

Recent - Terminations: <nil>

Phase: Running

Secret - Hashes:

Auth - Jwt: f4d2be72324436cf66abb583ae4b0eb0337f424557c5932a4d1c2a2890e84f5c

Service Name: arangodb-cluster

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal New Coordinator Added 10m arango-deployment-operator-664c5d8498-kmdwl New coordinator CRDN-bbzgsjgx added to deployment

Normal New Agent Added 10m arango-deployment-operator-664c5d8498-kmdwl New agent AGNT-aq7bpo1h added to deployment

Normal New Agent Added 10m arango-deployment-operator-664c5d8498-kmdwl New agent AGNT-gikhke17 added to deployment

Normal New Dbserver Added 10m arango-deployment-operator-664c5d8498-kmdwl New dbserver PRMR-yyfotd3d added to deployment

Normal New Dbserver Added 10m arango-deployment-operator-664c5d8498-kmdwl New dbserver PRMR-cfnpziua added to deployment

Normal New Dbserver Added 10m arango-deployment-operator-664c5d8498-kmdwl New dbserver PRMR-twj3x9cp added to deployment

Normal New Coordinator Added 10m arango-deployment-operator-664c5d8498-kmdwl New coordinator CRDN-eemikwqt added to deployment

Normal New Coordinator Added 10m arango-deployment-operator-664c5d8498-kmdwl New coordinator CRDN-rvtlbpcd added to deployment

Normal New Agent Added 10m arango-deployment-operator-664c5d8498-kmdwl New agent AGNT-qlqxr0wb added to deployment

Normal Pod Of Agent Created 9m arango-deployment-operator-664c5d8498-kmdwl Pod arangodb-cluster-agnt-gikhke17-cd6817 of member agent is created

Normal Pod Of Agent Created 9m arango-deployment-operator-664c5d8498-kmdwl Pod arangodb-cluster-agnt-aq7bpo1h-cd6817 of member agent is created

Normal Pod Of Agent Created 9m arango-deployment-operator-664c5d8498-kmdwl Pod arangodb-cluster-agnt-qlqxr0wb-cd6817 of member agent is created

Normal Pod Of Dbserver Created 9m arango-deployment-operator-664c5d8498-kmdwl Pod arangodb-cluster-prmr-cfnpziua-cd6817 of member dbserver is created

Normal Pod Of Dbserver Created 9m arango-deployment-operator-664c5d8498-kmdwl Pod arangodb-cluster-prmr-twj3x9cp-cd6817 of member dbserver is created

Normal Pod Of Dbserver Created 9m arango-deployment-operator-664c5d8498-kmdwl Pod arangodb-cluster-prmr-yyfotd3d-cd6817 of member dbserver is created

Normal Pod Of Coordinator Created 9m arango-deployment-operator-664c5d8498-kmdwl Pod arangodb-cluster-crdn-bbzgsjgx-cd6817 of member coordinator is created

Normal Pod Of Coordinator Created 9m arango-deployment-operator-664c5d8498-kmdwl Pod arangodb-cluster-crdn-eemikwqt-cd6817 of member coordinator is created

Normal Pod Of Coordinator Created 9m arango-deployment-operator-664c5d8498-kmdwl Pod arangodb-cluster-crdn-rvtlbpcd-cd6817 of member coordinator is created

And finally, here's the description for one of the cluster pods (a dbserver):

Name: arangodb-cluster-prmr-cfnpziua-cd6817

Namespace: graph

Node: gke-resource-requests-pool-12d906ca-764w/10.142.15.215

Start Time: Tue, 15 Jan 2019 10:13:21 -0800

Labels: app=arangodb

arango_deployment=arangodb-cluster

role=dbserver

Annotations: <none>

Status: Running

IP: 10.56.0.31

Controlled By: ArangoDeployment/arangodb-cluster

Init Containers:

init-lifecycle:

Container ID: docker://bcbbeb10d995ce0aef58db76b50705743345584f3a4ea2314898f544b69c867f

Image: arangodb/kube-arangodb:0.3.7

Image ID: docker-pullable://arangodb/kube-arangodb@sha256:ae991f94aef9ff25c09b59aa7243155be0ee554a6cc252c59fe3a63d070af42b

Port: <none>

Host Port: <none>

Command:

/usr/bin/arangodb_operator

lifecycle

copy

--target

/lifecycle/tools

State: Terminated

Reason: Completed

Exit Code: 0

Started: Tue, 15 Jan 2019 10:13:40 -0800

Finished: Tue, 15 Jan 2019 10:13:40 -0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/lifecycle/tools from lifecycle (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-s67m7 (ro)

uuid:

Container ID: docker://c77b4fa17c9c8e5bd78dfc5472175466aa67132989b8ca296623f355b77da019

Image: alpine:3.7

Image ID: docker-pullable://alpine@sha256:accb17fd002f68aa8fdcc32e106869b5b72fcfde288dd79d0aca18cd1dd73ac2

Port: <none>

Host Port: <none>

Command:

/bin/sh

-c

test -f /data/UUID || echo 'PRMR-cfnpziua' > /data/UUID

State: Terminated

Reason: Completed

Exit Code: 0

Started: Tue, 15 Jan 2019 10:13:41 -0800

Finished: Tue, 15 Jan 2019 10:13:41 -0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/data from arangod-data (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-s67m7 (ro)

Containers:

server:

Container ID: docker://53adc2e35571b15c4db438e657716a2f904de2ceb181f732a6f9c74f9585d620

Image: arangodb/arangodb@sha256:506de08ffff39efceee73ad784d43f383d33acff28bc5e5fcaf7871b68598af6

Image ID: docker-pullable://arangodb/arangodb@sha256:506de08ffff39efceee73ad784d43f383d33acff28bc5e5fcaf7871b68598af6

Port: 8529/TCP

Host Port: 0/TCP

Command:

/usr/sbin/arangod

--cluster.agency-endpoint=tcp://arangodb-cluster-agent-aq7bpo1h.arangodb-cluster-int.graph.svc:8529

--cluster.agency-endpoint=tcp://arangodb-cluster-agent-gikhke17.arangodb-cluster-int.graph.svc:8529

--cluster.agency-endpoint=tcp://arangodb-cluster-agent-qlqxr0wb.arangodb-cluster-int.graph.svc:8529

--cluster.my-address=tcp://arangodb-cluster-dbserver-cfnpziua.arangodb-cluster-int.graph.svc:8529

--cluster.my-role=PRIMARY

--database.directory=/data

--foxx.queues=false

--log.level=INFO

--log.output=+

--server.authentication=true

--server.endpoint=tcp://[::]:8529

--server.jwt-secret=$(ARANGOD_JWT_SECRET)

--server.statistics=true

--server.storage-engine=rocksdb

State: Running

Started: Tue, 15 Jan 2019 10:13:42 -0800

Ready: True

Restart Count: 0

Liveness: http-get http://:8529/_api/version delay=30s timeout=2s period=10s #success=1 #failure=3

Environment:

ARANGOD_JWT_SECRET: <set to the key 'token' in secret 'arangodb-cluster-jwt'> Optional: false

MY_POD_NAME: arangodb-cluster-prmr-cfnpziua-cd6817 (v1:metadata.name)

MY_POD_NAMESPACE: graph (v1:metadata.namespace)

Mounts:

/data from arangod-data (rw)

/lifecycle/tools from lifecycle (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-s67m7 (ro)

Conditions:

Type Status

Initialized True

Ready True

PodScheduled True

Volumes:

arangod-data:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: arangodb-cluster-dbserver-cfnpziua

ReadOnly: false

lifecycle:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

default-token-s67m7:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-s67m7

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.alpha.kubernetes.io/unreachable:NoExecute for 300s

node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 14m default-scheduler Successfully assigned arangodb-cluster-prmr-cfnpziua-cd6817 to gke-resource-requests-pool-12d906ca-764w

Normal SuccessfulMountVolume 14m kubelet, gke-resource-requests-pool-12d906ca-764w MountVolume.SetUp succeeded for volume "lifecycle"

Normal SuccessfulMountVolume 14m kubelet, gke-resource-requests-pool-12d906ca-764w MountVolume.SetUp succeeded for volume "default-token-s67m7"

Normal SuccessfulAttachVolume 14m attachdetach-controller AttachVolume.Attach succeeded for volume "pvc-38dd05a8-18f1-11e9-a02e-42010a8e0025"

Normal SuccessfulMountVolume 13m kubelet, gke-resource-requests-pool-12d906ca-764w MountVolume.SetUp succeeded for volume "pvc-38dd05a8-18f1-11e9-a02e-42010a8e0025"

Normal Pulled 13m kubelet, gke-resource-requests-pool-12d906ca-764w Container image "arangodb/kube-arangodb:0.3.7" already present on machine

Normal Created 13m kubelet, gke-resource-requests-pool-12d906ca-764w Created container

Normal Started 13m kubelet, gke-resource-requests-pool-12d906ca-764w Started container

Normal Pulled 13m kubelet, gke-resource-requests-pool-12d906ca-764w Container image "alpine:3.7" already present on machine

Normal Created 13m kubelet, gke-resource-requests-pool-12d906ca-764w Created container

Normal Started 13m kubelet, gke-resource-requests-pool-12d906ca-764w Started container

Normal Pulled 13m kubelet, gke-resource-requests-pool-12d906ca-764w Container image "arangodb/arangodb@sha256:506de08ffff39efceee73ad784d43f383d33acff28bc5e5fcaf7871b68598af6" already present on machine

Normal Created 13m kubelet, gke-resource-requests-pool-12d906ca-764w Created container

Normal Started 13m kubelet, gke-resource-requests-pool-12d906ca-764w Started container

with no mention of resource requests/limits.

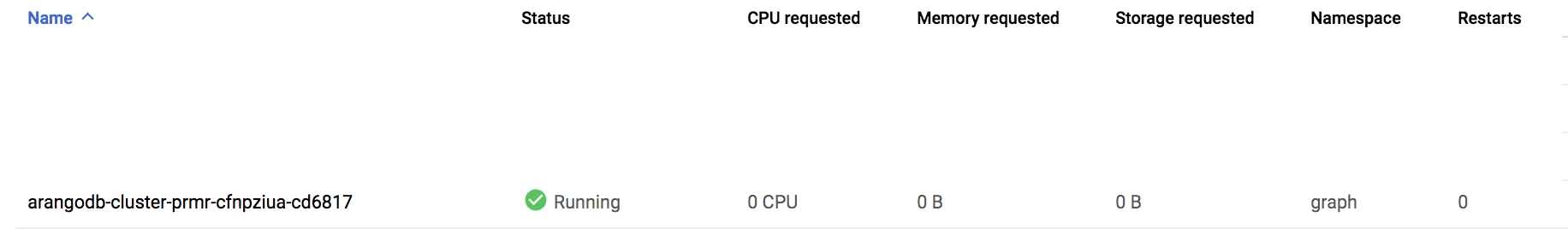

Also, on GKE I can see there are not requests for the given pod -

(sorry for the long logs - I tried the collapsible <details> but I couldn't get it to work and moved on)

Am I defining anything incorrectly or is this a bug?

@rglyons it's been fixed in 0.3.10 (https://github.com/arangodb/kube-arangodb/commit/fd917007c0845a033472bc97e86c1e389cf77f51).

This still seems to be an issue in 1.0.1.

This still seems to be an issue in 1.0.1.

@jebbench I haven’t tested 1.0.1 specifically yet, though can say that, from my experience deploying to multiple clusters with ResourceQuotas enabled, you’re likely to have issues only with init lifecycle containers, the rest should work just fine. As a workaround, you might want to use a LimitRange for now.

I've been able to get it to work by passing both limits and requests for memory storage; I'm not sure if I had something wrong previously or it's required to specify all of the arguments.

agents:

count: 3

storageClassName: mercury-production-local-storage

resources:

requests:

memory: 1Gi

storage: 8Gi

limits:

memory: 1Gi

storage: 8Gi

nodeSelector:

cloud.google.com/gke-local-ssd: "true"

single:

count: 2

storageClassName: mercury-production-local-storage

resources:

requests:

memory: 8Gi

storage: 150Gi

limits:

memory: 8Gi

storage: 150Gi

nodeSelector:

cloud.google.com/gke-local-ssd: "true"

Results in:

Agent

name: server

resources:

limits:

memory: 1Gi

requests:

memory: 1Gi

Single

name: server

resources:

limits:

memory: 8Gi

requests:

memory: 8Gi

Single PVC

resources:

limits:

storage: 150Gi

requests:

storage: 150Gi

@jebbench I'm happy to hear that it works now :) As for the previous attempt, it's hard to say as we don't seem to have the exact test conditions recorded.