crawlee

crawlee copied to clipboard

crawlee copied to clipboard

CheerioCrawler cookies not set by 302 redirect response. Expected?

Describe the bug

When a CheerioCrawler request results in a redirect, the set-cookie header from the 302 response is not put into the cookie header of the subsequent request to the redirected-to URL. Many sites use a redirect to validate that a browser supports cookies, so crawling these sites will fail using CheerioCrawler, even if useSessionPool and persistCookiesPerSession are both true.

It's possible that I'm misunderstanding useSessionPool and persistCookiesPerSession. My assumption was that setting both of them to true would produce cookie behavior that emulates a real browser, where each response can set cookies that will automatically be sent back to the server in subsequent requests in the same session.

Is this assumed behavior how it should work? If not, how can I get response cookies into the next request, both in the case where the "next request" is a redirect (so there's no call to handlePageFunction) and when it's a regular response. The Session.setCookiesFromResponse method looks promising, but I'm not sure where the session instance is accessible and when to call that method.

BTW, retaining cookies across requests is such a common use case that it'd be good to document it beyond this GitHub issue.

To Reproduce

import Apify from 'apify';

const { main, openRequestQueue, CheerioCrawler, utils } = Apify;

utils.log.setLevel(utils.log.LEVELS.ERROR);

main(async () => {

const requestQueue = await openRequestQueue();

await requestQueue.addRequest(

{ url: 'https://www.ventureloop.com/ventureloop/login.php' }

);

const crawler = new CheerioCrawler({

maxRequestRetries: 1,

requestQueue,

useSessionPool: true,

persistCookiesPerSession: true,

preNavigationHooks: [

async (cc) => {

const url = cc.request.url;

const cookie = cc.request.headers['cookie'];

console.log(`pre-nav: cookie is '${cookie}'`);

}],

postNavigationHooks: [

async (cc) => {

const url = cc.request.url;

const cookie = cc.request.headers['cookie'];

console.log(`post-nav: cookie is '${cookie}'`);

}],

handlePageFunction: async ({ request, $ }) => {

console.log('original URL: ', request.url);

console.log('loaded URL: ', request.loadedUrl);

console.log('Site output indicating cookies not retained:');

console.log($('#formContainer').text().trim());

},

handleFailedRequestFunction: async (x) => {

console.log('handleFailedRequestFunction: ', x.request.url);

console.log(JSON.stringify(x.request.errorMessages));

}

});

await crawler.run();

});

Expected Cookies set by the first request are sent back to the server when the redirected-to URL is requested.

Actual

The server complains that cookies are disabled on the client. See output below from the code above:

pre-nav: cookie is 'undefined'

post-nav: cookie is 'undefined'

original URL: https://www.ventureloop.com/ventureloop/login.php

loaded URL: https://www.ventureloop.com/ventureloop/message.php?source=login.php

Site output indicating cookies not retained:

We have detected that you do not have cookies enabled on your browser. Our system requires the use of cookies in order to proceed. Please contact us at Help if you have further questions (...more text is omitted)

System information:

- OS: MacOS

- Node.js version v16.7.0

- Apify SDK version 2.2.0

CheerioCrawler only gets access to the request and starts manipulating cookies after redirects. This could be deeper. @szmarczak got-scraping maybe?

We will look into this. Definitely a bug.

the response headers should be set-cookie instead of cookie

Hah, nice catch @pocesar. It indeed does display the cookies when set-cookie is used.

Might have nothing to do with how we handle cookies, but we should investigate anyway. Maybe there's just some JavaScript that would normally remove that warning, but since it's not being executed, the warning sticks.

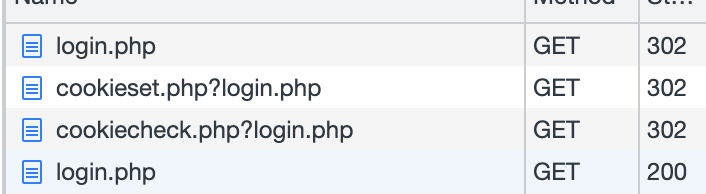

@pocesar Using cookie was intentional. The fact that it's undefined demonstrates the bug. In a normal browser, the cookie header is filled on that request because it was set by a previous redirected request. In a browser, loading that URL will actually require four HTTP requests:

- The first page detects that there's no cookie and redirects to cookieset.php

- cookieset.php sets the cookie and redirects to cookiecheck.php

- cookiecheck.php verifies that the session cookie exists. If it exists, then it redirects back to the original URL. However, if it doesn't exist, then the user agent doesn't support cookies and it redirects to an error page.

- Finally, either the original URL or the error page is returned to the user agent with a 200 code.

From tracing into the lower-level code that actually does the HTTP fetch, the cookies set by step 2 aren't being sent back to the server in step 3. options.cookieJar is undefined at line 714 in the code linked below.

https://github.com/sindresorhus/got/blob/3a84454208e39aae7f2bae0bf68b7ede2872f317/source/core/index.ts#L713-L715

Note that I found this in a debug session in my own app (not the repro code in the OP), so it's possible that the repro has some other reason for cookies not being set, but given that the observable behavior is the same I'm assuming that the cookieJar option is also missing in the repro code too.

Maybe there's just some JavaScript that would normally remove that warning, but since it's not being executed, the warning sticks.

@mnmkng Unless I'm misunderstanding how HTTP redirects work, once a browser sees the 302 response and the location header, it stops loading the page and doesn't execute any JS in the body of the request. So I'm not sure how what you're describing could be happening here.

I only gave it a minute, so I'm sure I missed things.

We will investigate this for sure.

IIRC the Apify SDK uses its own cookieJar to set the Cookie header instead of passing it to Got, so options.cookieJar being undefined is expected.

I found a workaround for the issue:

preNavigationHooks: [

async (crawlingContext, requestAsBrowserOptions) => {

if (!requestAsBrowserOptions.cookieJar) requestAsBrowserOptions.cookieJar = new CookieJar();

}

]

When I add this hook, the page loads as expected and the "browser doesn't accept cookies" message doesn't show up in the output.

I haven't yet verified that Apify will retain the cookie for the next request. If it doesn't, then I assume that can work around that problem by extracting the cookie from the set-cookie header of the response and manually pushing that into later requests. (Although perhaps not in the pre-navigation hook because of #1266).

so

options.cookieJarbeingundefinedis expected.

@szmarczak For initial requests this makes sense because headers are produced by Apify. But for redirected requests, this cookieJar-free approach isn't working in 2.2.0. That doesn't mean that the right long-term solution is to use a CookieJar. For example, there may be some other got hook that allows reading redirect response headers and transforming those into request headers for the redirected-to URL. But if got doesn't offer such a hook, then perhaps Apify could put its cookies into a CookieJar, set that as the cookieJar of the request, and then extract the cookies from the jar after the response (including all redirects) is complete.

Using

cookiewas intentional.

Actually, @pocesar is correct that responses will only have set-cookie. cookie was intentional but response. was a mistake in my repro code. It should have been request.cookie and I adjusted the OP code accordingly.

BTW, this may have exposed another bug, or at least a feature gap. What I'm looking to log is the cookie header sent in the last of the 4 different HTTP requests that are executed by loading that original URL. But even using the workaround above (which is successfully—meaning "like a browser"—handling cookies across redirects) request.cookie in the post-nav hook is still undefined. I'd have expected a way to get the cookie (and all the headers, for that matter) of the final request, not the initial request. Just like there's a url and originalUrl property, IMHO the same capability should exist for headers too.

I think we never really thought about redirect cookies so this is an omission on the SDK end. We wrongly assumed that redirect cookies would be sent by got, if it automatically handles redirects, but it also makes sense that it wouldn't do it, if you don't provide a cookieJar. A bit unexpected behavior, but not incorrect.

So we need to fix this on our end. Most likely similar to how you're doing it in the hot fix. Provide some temporary cookieJar for all requests and then extract the cookies out of there. @B4nan The important thing is to keep the cookies mapped to sessions and not leak them across requests.

Minimal repro:

// $ rm -rf apify_storage && node index.js

import http from 'http';

import { CheerioCrawler } from './packages/cheerio-crawler/dist/index.mjs';

http.createServer((request, response) => {

response.setHeader('content-type', 'text/html');

if (request.url === '/') {

response.statusCode = 302;

response.setHeader('set-cookie', 'foo=bar');

response.setHeader('location', 'foo');

response.end();

return;

}

response.end(JSON.stringify(request.headers['cookie']));

}).listen(8888).unref();

const crawler = new CheerioCrawler({

useSessionPool: true,

persistCookiesPerSession: true,

handlePageFunction: async ({ body }) => {

console.log(body);

},

});

await crawler.run(['http://localhost:8888']);

So the issue is that Crawlee allows the modification of the cookie header. However, in order to support cookies for redirects, we'd have to use the cookieJar Got option, which will make it impossible to modify the cookie header outside of the cookie jar.