SwiftPackageIndex-Server

SwiftPackageIndex-Server copied to clipboard

SwiftPackageIndex-Server copied to clipboard

Package Ratings/Quality Score

We should use our score to indicate a package rating between 1 to 5 stars and indicate it through the JSON-LD data so that packages get a little rating in Google search results, like this:

Ummm, are we sure we want to step into the ratings ring? 😅

In some ways, we're already rating packages with our score. Yes, that score isn't being published right now but we have plans to expose that in some way, at some point with the public quality score.

I can see an argument that says this is weird if we do this work before the quality score, but it's not completely out there, I don't think.

To be clear, I'm not suggesting user-contributed ratings at all (other than stars as part of the score). My thought here was that we'd split the range of valid scores up into 5 segments and that's the rating. So for example if our max score is something like 88 (I'm not sure 100%, but it's that order of magnitude) then the bands look something like:

- 0-17 - 1 star

- 17-34 - 2 stars

- 34-51 - 3 stars

- 51-68 - 4 stars

- Above 68 - 5 stars

Or, given that I don't think it's possible to score zero we could adjust that, but also it'd be fine if it wasn't possible to award one star. Details are most definitely TBD, but this is some more of what I was thinking about this feature.

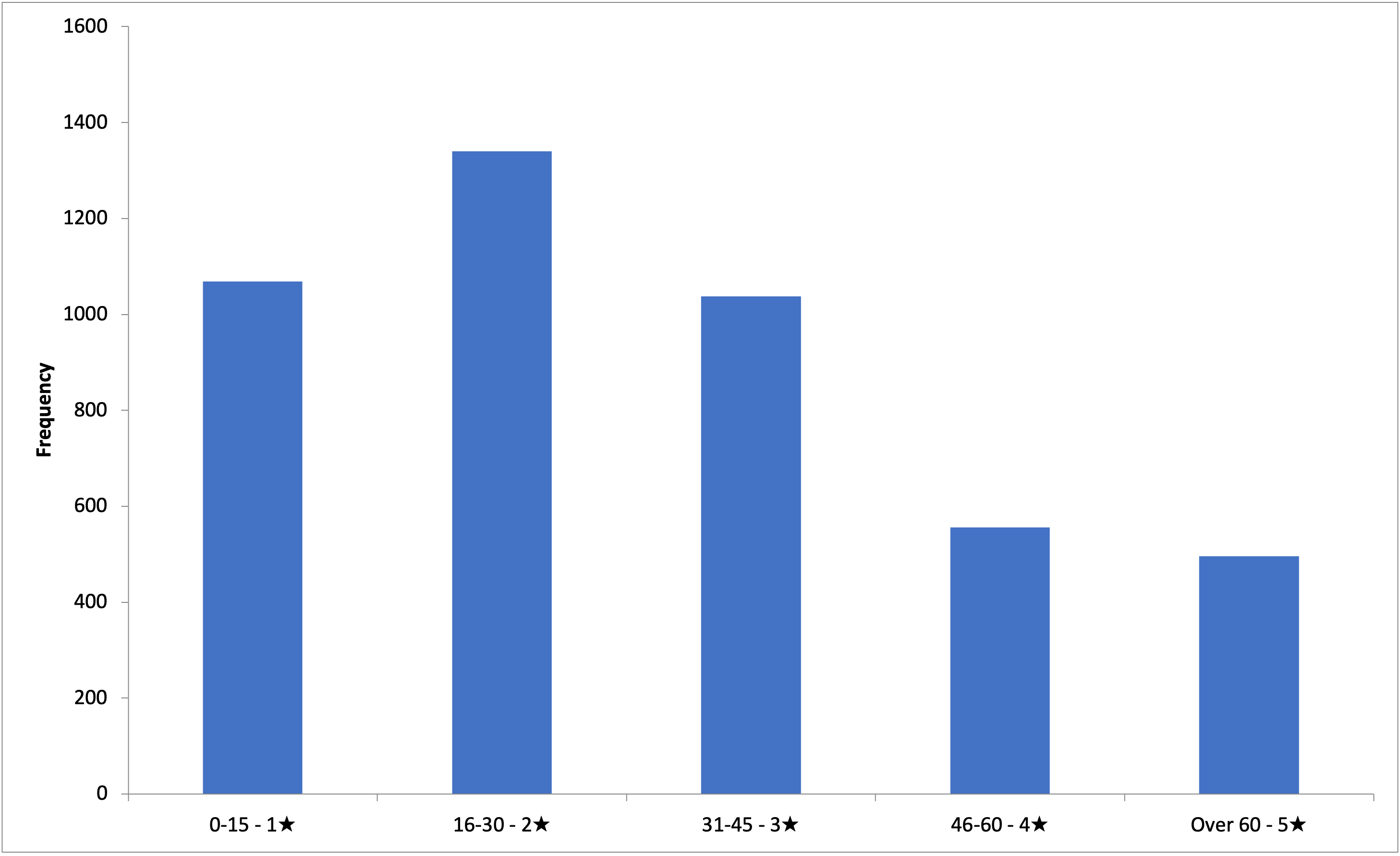

Alright, I just did some more investigation into this and here's what it'd look like. Our top score is currently 87 points, but there are very few packages that make it that high.

I started with some investigation into the score distribution across all packages in the index. After a bit of tweaking, I ended up with the following bins:

| Range | Stars |

|---|---|

| 0-15 | ⭐️ |

| 16-30 | ⭐️⭐️ |

| 31-45 | ⭐️⭐️⭐️ |

| 46-60 | ⭐️⭐️⭐️⭐️ |

| Over 60 | ⭐️⭐️⭐️⭐️⭐️ |

Which looks like this:

Next up, I had a look at some packages at the top end of each bin to see if I agreed with their star rating:

Score 15 - 1 Star

Of these, PerfectZip is the best as it still compiles with Swift 5.6, but with 20 stars and no commits in four years. I don’t think 1 star is unfair.

Score 30 - 2 Star

The first package here seems out of line. Yes, it has a low number of stars but other than that this package looks good. It has tests (which we don’t check for), it compiles with recent versions of Swift (which we don’t currently check for), it has a lengthy README file, and it was updated less than a month ago. This feels wrong.

The other packages here don’t feel quite as wrong, but I think there’s work we could do to make these better.

Score 45 - 3 Star

These don’t feel completely out of line. They’re all reasonable packages by the look of things. Two have fairly low numbers of stars, and the other has not had a release in about 18 months.

Score 60 - 4 Star

Argo seems out of line here, primarily having this score because of the number of stars it has. It hasn’t seen a commit in two years and the README still talks about Swift 4.x compatibility.

Score 60+ - 5 Star

I won’t comment here. No one is going to be upset to be ranked 5 stars.

Conclusions

Overall, before we implement this we need to give people a way they can improve their ratings without it being so dependent on number of stars, which is hard to get and is not the most important indicator of a package’s quality.

I suggest we look back at this when the following tickets are complete:

- #1513 - Supports latest Swift version

- #317 - Does a package include tests?

- #1691 - README length/complexity

- #1692 - Number of contributors

I predict that these changes would shift the distribution of the histogram to the right by quite a bit, which would be a good thing.

I also just renamed this issue. The more I've thought about this today the more I'm convinced that this is our "Package Quality Score" that we've been talking about. Yes, it would then be used as a "rating" for SEO, but it's more than that.