Neuraxle

Neuraxle copied to clipboard

Neuraxle copied to clipboard

Feature: BaseStep.introspect()

As pitched in the Neuraxle video on youtube, it should be possible to introspect models.

Upon calling .introspect() on a step, a step that has been fitted might be able to return information about its current state.

- Default return value: None. Or maybe an empty dict or an empty list or an empty object of type

FeatureDataContainerwould be cool.

Example usage:

- Someone train a model: model.fit(X, y).

- Someone call

some_info_about_model = model.introspect(X, y). Thesome_info_about_modelcould be for example the mean of the weights of each of the neural network's layer, or the mean and std of each neuron's activation, a list of train scores and a list of validation scores, and so on.

Wild idea: make introspect also score the model and bundles that in the container. Example:

f: FeatureDataContainer = model.introspect(X, y, score_method). The FeatureDataContainer.data_inputs contains some_info_about_model, and the FeatureDataContainer.expected outputs contains the score obtained from score_method(y, y_pred) or something like that. So the FeatureDataContainer would in fact be an aggregate of other FeatureDataContainers(as it may come from a nested pipeline of pipeline).

Another idea: whatever the FeatureDataContainer contains, it would be able to call FeatureDataContainers.flatten() to reduce the data to 1D features. As a FeatureDataContainers might be an aggregate of other FeatureDataContainers, it could combine them by flattening them and concatenating them on the feature axis for instance. So a FeatureDataContainers containing a list of train and val scores would be featurisable from 2D lists to 1D features such as min, max, std, slope information, length, amin, amax, etc.

This allows for neat AutoML algorithms that can also truly introspect the models to be able to better guess the next model to train by having "features of the model" (an introspection).

@Eric2Hamel and @jeromebedard12, this might interest you.

Might be related: https://github.com/Neuraxio/Neuraxle-TensorFlow/issues/7

Update:

introspection_features: RecursiveDict[str, float] = Pipeline.introspect()

def BaseStep.introspect(self, context):

return self.apply("_introspect", context)

And then, in the AutoML loop as a callback:

trial.log_something(pipeline, introspection_features)

Example:

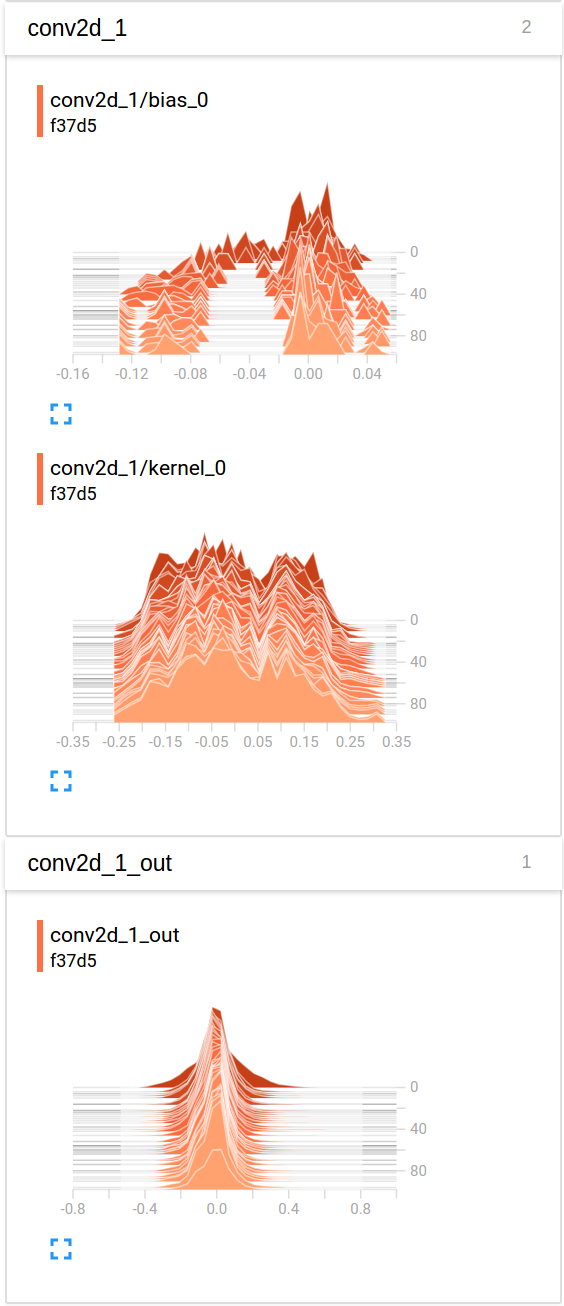

Would contain introspection features like:

{

"Pipeline__ModelWrapper__TensorflowV2Step__conv2d_1/bias_0_0": 0.00142,

"Pipeline__ModelWrapper__TensorflowV2Step__conv2d_1/bias_0_1": 0.00342,

"Pipeline__ModelWrapper__TensorflowV2Step__conv2d_1/bias_0_2": 0.00142,

"Pipeline__ModelWrapper__TensorflowV2Step__conv2d_1/bias_0_3": 0.5,

"Pipeline__ModelWrapper__TensorflowV2Step__conv2d_1/bias_0_4": 0.00142,

"Pipeline__ModelWrapper__TensorflowV2Step__conv2d_1/bias_0_5": -0.8,

"Pipeline__ModelWrapper__TensorflowV2Step__conv2d_1/bias_0__mean": 0.00142,

"Pipeline__ModelWrapper__TensorflowV2Step__conv2d_1/bias_0__variance": 0.3224,

"Pipeline__ModelWrapper__TensorflowV2Step__conv2d_1/weights_0__mean": 0.00142,

"Pipeline__ModelWrapper__TensorflowV2Step__conv2d_1/weights_0__variance": 0.3224,

...

}

Idea: create a data structure that inherits from RecursiveDict and that also specifies how to preprocess the introspection to features or have an introspect_features class that returns this already.

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs in the next 180 days. Thank you for your contributions.