torchmetrics

torchmetrics copied to clipboard

torchmetrics copied to clipboard

More image metrics

🚀 Feature

just found this package and seems we have not yet all Image metrics :]

some inspiration came from https://github.com/andrewekhalel/sewar but we aim on own implementation with torch

Alternatives

- ~Mean Squared Error (MSE)~

- ~Root Mean Sqaured Error (RMSE)~

- ~Peak Signal-to-Noise Ratio (PSNR)~

- ~Structural Similarity Index (SSIM)~

- ~Universal Quality Image Index (UQI)~

- ~Multi-scale Structural Similarity Index (MS-SSIM)~

- ~Erreur Relative Globale Adimensionnelle de Synthèse (ERGAS)~

- [ ] Spatial Correlation Coefficient (SCC) -> #800

- [ ] Relative Average Spectral Error (RASE) -> #816

- ~Spectral Angle Mapper (SAM)~

- ~Spectral Distortion Index (D_lambda)~

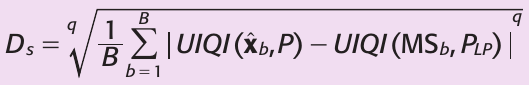

- [ ] Spatial Distortion Index (D_S)

- [ ] Quality with No Reference (QNR)

- [ ] Visual Information Fidelity (VIF)

- [ ] Block Sensitive - Peak Signal-to-Noise Ratio (PSNR-B)

Additional context

any of these metrics would be a separate PR/addition :]

i would like to work on this can you assign it to me

Hi, @Borda

Thanks for creating the issue tracker. Would love to help in this, as I also look forward to contributing to the lightning framework.

I would like to begin with MSE and RMSE, if that's fine with you and everyone else. Please let me know if I can start working on these two metrics.

Thanks!

@ankitaS11 Please note we already have MSE and RMSE in the regression package:

https://torchmetrics.readthedocs.io/en/latest/references/modules.html#meansquarederror (this takes an squared argument)

So I do not think we should re-implement them in the image domain.

i would like to work on this can you assign it to me

cool, which one do you want to take? (we have many :zap:)

@ankitaS11 Please note we already have MSE and RMSE in the regression package: https://torchmetrics.readthedocs.io/en/latest/references/modules.html#meansquarederror (this takes an

squaredargument) So I do not think we should re-implement them in the image domain.

Hi, @SkafteNicki - Got it. Thank you for updating the issue as well, I can try Quality with No Reference then, just want to also make sure that it doesn't collide with @nishant42491. Nishant, curious to know if you have any metric in mind to pick up first? I can pick the others then, if that's okay.

@Borda @SkafteNicki are we aiming for an integration or a direct torch reimplementation here?

@Borda @SkafteNicki are we aiming for an integration or a direct torch reimplementation here?

reimplementation, I put this package just as inspiration for what other image metrics exist...

reimplementation, I put this package just as inspiration for what other image metrics exist...

I'd think so as well :)

@ankitaS11 @nishant42491 You can however use this package for testing :)

@Borda @ankitaS11 may I start with implementing spatial correlation coefficient first?

@nishant42491 sure, I'll add you to it. Open a draft PR early and ping us there if you need help :)

@nishant42491 sure, I'll add you to it. Open a draft PR early and ping us there if you need help :)

thank you :)

I would like to work on Relative Average Spectrul Error, it would be a great way for me to learn new stuff.

Hi, @Borda

I was going through the referenced repo (sewar) for QNR, and found that it depends on: D_LAMBDA and D_S - and both of them depend on UQI. So, probably it will be easier and more sensible to implement UQI first, and then go to D_LAMBDA, D_S and finally QNR.

Do you have any opinions on this? Just wanted to confirm if I'm moving in a right direction here. In a gist, I would like to implement UQI first, and then other metrics if that's okay with you and everyone.

Hey @ankitaS11 in that case it definitely makes sense to go with your proposed way :) I updated the issue. Just let us know here, when I should assign new metrics to you :)

Hey @ankitaS11 in that case it definitely makes sense to go with your proposed way :) I updated the issue. Just let us know here, when I should assign new metrics to you :)

Hi @justusschock, I am done with the implementation of UQI metric, now I would like to work on D_Lambda.

@Borda I will work on Spectral Angle Mapper (SAM)

@vumichien if I'm not mistaken, SAM is just the arccos of the cosine similarity which is implemented here.

@vumichien My bad, SAM being an image metric and being about spectral characteristics, it is needed to assume NCHW shape, and using torchmetrics.functional.cosine_similarity with those assumptions will require some useless reshaping.

Here is some code as an excuse and to explain myself:

Code

import torch

import torchmetrics

import einops

SPECTRAL_DIM = 1

N, C, H, W = 13, 5, 32, 64

def main():

x = torch.randn(N, C, H, W)

y = torch.randn(N, C, H, W)

sam_index1 = sam_from_torchmetrics_cossim(x, y, reduction="mean")

sam_index2 = sam_from_scratch(x, y, reduction="mean")

assert torch.allclose(sam_index1, sam_index2)

def sam_from_torchmetrics_cossim(pred, target, *, reduction="mean"):

# (..., N, d) required for `torchmetrics.functional.cosine_similarity`

pred_ = einops.rearrange(pred, "n c h w -> n (h w) c")

target_ = einops.rearrange(target, "n c h w -> n (h w) c")

cossim = torchmetrics.functional.cosine_similarity(pred_, target_, reduction="none")

return reduce(cossim.arccos(), reduction=reduction)

def sam_from_scratch(pred, target, *, reduction="mean"):

return reduce(_angle(pred, target, dim=SPECTRAL_DIM), reduction=reduction)

def _angle(x1, x2, *, dim):

return _cosine_similarity(x1, x2, dim=dim).acos()

def _cosine_similarity(x1, x2, *, dim):

x1 = x1 / torch.norm(x1, p=2, dim=dim, keepdim=True)

x2 = x2 / torch.norm(x2, p=2, dim=dim, keepdim=True)

return (x1 * x2).sum(dim=dim).clamp(-1.0, 1.0)

def reduce(x, reduction):

if reduction == "mean":

return x.mean()

if reduction == "sum":

return x.sum()

if reduction is None or reduction == "none":

return x

raise ValueError(

f"Expected reduction to be one of `['mean', 'sum', None]` but got {reduction}"

)

if __name__ == "__main__":

main()

@Paul-Aime No problems. SAM is a metric to determine the spectral similarity between two images by the angle between each spectrum, in short, it's the mean arccos of the cosine similarity in each dimension. Thank you for your snippet code.

@Borda I would like to work on Erreur Relative Globale Adimensionnelle de Synthèse (ERGAS) next after the process of reviewing SAM is completed

Hi @Borda,

I was looking into implementing the Spatial Distortion Index, but there is a problem with the inputs.

In this metric, a MS image and the respective PAN image are the targets that will be compared against the MS_low image. There is also a PAN_low image, but this is computed in a predefined way from the PAN image.

How will the targets and preds look like? One way would be to use the format target = [ms_1, pan_1, ms_2, pan_2, ...] and preds = [ms_low_1, ms_low_2, ...]. I am asking this, because other metrics seem to have the same shape for targets and preds and I am a bit unsure how to handle this in an elegant way.

Hi @victor1cea are ms_1 and ms_2 of the same shape? Are pan_1 and pan_2 of the same shape? Do they have the same size as the ms images?

The distortions indices were introduced in https://doi.org/10.14358/PERS.74.2.193

The reference implementation used in pansharpening (task for which the metric was introduced) can be found at liangjiandeng/DLPan-Toolbox/.../D_lambda.m (MATLAB).

The reference implementation gives 2 ways of computing the spatial distortion (translated in PyTorch):

torch.mean( (uiqi(hrms, pan) - uiqi(ms, pan_lr_decimated) ) ** p, axis=-1) ** (1 / p) # As original paper

# or

torch.mean( (uiqi(hrms, pan) - uiqi(ms_up, pan_lr ) ) ** p, axis=-1) ** (1 / p) # As done by default in reference implementation

This is with the classical pansharpening setting as follows:

r = 4 # ratio PAN / MS (pixel size)

# Raw inputs

ms.shape = (N, C, H, W) # MS

pan.shape = (N, 1, H * r, W * r) # PAN

# Modified inputs

ms_up.shape = (N, C, H * r, W * r) # classicaly bicubic interpolation

pan_lr.shape = (N, 1, H * r, W * r) # same shape as PAN, but degraded (blurred) to the resolution of MS

pan_lr_decimated.shape = (N, 1, H, W) # same as pan_lr, but then decimated to MS size

# Prediction

hrms.shape = (N, C, H * r, W * r) # the fused image prediction

Actually, it might be better to let the user choose its implementation by providing both arguments of the reference UIQI (ms and pan_lr below):

torch.mean( (uiqi(hrms, pan) - uiqi(ms, pan_lr) ** p, axis=-1) ** (1 / p)

This way pan_lr can also be optional, and if not given the assumption can be made that the ms provided is of shape (N, C, H, W), as in the original paper, and then the pan_lr will default to torch.interp the pan to ms size, as in the original paper.

Moreover, the interpolation method used to compute pan_lr has quite an impact on the resulting metric, so this is an additional benefit of letting the user choose its method.

Anyway, regarding preds and targets, I think that the best would then be:

targets = [ms, pan] # or [ms, pan, pan_lr], as the user want

preds = [hrms]

ms, pan, pan_lr and hrms being batched, with shapes as described above.

Thank you! :smile:

@Borda It would be great if you can give me some reference regarding Block Sensitive - Peak Signal-to-Noise Ratio (PSNR-B). Then I would like to work on adding it as a metrics. Thanks!

@SkafteNicki could you pls link some good resources? :)

I can take a stab at Visual Information Fidelity VIF if it hasn't been taken yet @Borda

@Borda It would be great if you can give me some reference regarding Block Sensitive - Peak Signal-to-Noise Ratio (PSNR-B). Then I would like to work on adding it as a metrics. Thanks!

Hi @soma2000-lang, Here is the reference paper: https://ieeexplore.ieee.org/abstract/document/5535179 and here is a reference implementation in numpy: https://github.com/andrewekhalel/sewar/blob/ac76e7bc75732fde40bb0d3908f4b6863400cc27/sewar/full_ref.py#L371-L395

Hi @Borda, please assign any metric that needs to be implemented to me. I see a lot of people taking a stab at most of them, so any metric which doesn't conflict with others would be great. Looking forward to hearing back from you.