django-q

django-q copied to clipboard

django-q copied to clipboard

How to deploy Django and django-q with Django ORM broker in a docker-compose.yml

Hello all,

I already built an image which includes Django framework, djano-q and other function needed packages.

For me now, If I only run Django web service and then go inside the container to run the command: python manage.py qcluster, Scheduler and async tasks could be work normally.

However, I should have one process per container right ? Docker official document also said that it is generally recommended that you separate areas of concern by using one service per container.

I had read the issue #513, The results I found on google are all applied redis as the broker. Because my project has already deployed many services, I wish to apply Django ORM as the broker.

settings.py

Q_CLUSTER = {

'name': 'pdm',

'workers': 1,

'recycle': 500,

'timeout': 60,

'retry': 120,

'compress': True,

'save_limit': 250,

'queue_limit': 500,

'cpu_affinity': 1,

'label': 'Django Q',

'daemonize_workers': False,

'orm': 'default',

}

docker-compose.yml

version: "3"

services:

web:

image: mydjango:latest

command: python manage.py runserver 0.0.0.0:8000

ports:

- "8000:8000"

django-q:

image: mydjango:latest

command: python manage.py qcluster

I don't know how to let Django web service communicate with django-q.

The correct work flow and relationship I thought is like the following: step1: Once the task is created in Django web service, it will send to web's broker (Django ORM) step2: At the same time, django-q will start to run the task process. logs are logged in this container. step3: Finally, results are send to web service's broker. All results also can be watched in Django admin.

Is the above description correct ?

Reference:

- https://forum.djangoproject.com/t/django-and-django-q-in-one-container/7403

- https://github.com/Koed00/django-q/issues/513 .

The ORM broker is the slowest broker (in that it needs to repeatedly poll the database to find new work) and the least robust (in that exactly-once delivery is not what the Django ORM is intended to provide, and hacks are required to accomplish it.)

I would strongly suggest you not try to use the ORM broker. If you're already using Docker Compose anyway, then running an additional redis service doesn't have any new operational cost. I'd suggest changing your compose file like this:

version: "3"

services:

web:

image: mydjango:latest

command: python manage.py runserver 0.0.0.0:8000

ports:

- "8000:8000"

django-q:

image: mydjango:latest

command: python manage.py qcluster

depends_on:

- "jobqueue"

# Redis server for storing async tasks in progress

jobqueue:

image: bitnami/redis

ports:

- '127.0.0.1:6379:6379'

However, if you insist on using the ORM broker, then you can accomplish it by making sure that the qcluster container has access to the database. The ORM broker doesn't communicate directly to the Django process. Rather, it communicates to the database, as does Django. In other words, if Django Q can access the database, then you don't need to do anything else.

Hello @nickodell, Thanks for your explanation.

As I said, there are so many services (e.g. postgresql, Influxdb, etc.) had to deploy in this project. Besides, my tasks would not be scheduled frequently, maybe 3 times in a month. That's the reason why I choose Django ORM to be my broker.

But the question is ... how Django Q access the Django database successfully ?

What I thought is trying to mount two services and then Django Q could access the Django's db.sqlite3 ???

By the way, I tried redis as the broker too. Although tasks can be executed normally, the results/records weren't shown in the Django /admin page. Did I miss any step or configuration ?

As I said, there are so many services (e.g. postgresql, Influxdb, etc.) had to deploy in this project.

This begs two questions:

- Why are you using both SQLite and postgresql? Seems like if you're going to set up postgresql, you could use that as your database backend.

- If the services are difficult to manage and deploy, why not make them part of your docker-compose setup?

But the question is ... how Django Q access the Django database successfully ?

What I thought is trying to mount two services and then Django Q could access the Django's db.sqlite3 ???

Multiple Docker containers can share a volume, which means that they can share a SQLite file.

Of course, if you're going to do this, you should be aware of the concurrency limitations of SQLite. From the SQLite FAQ:

Multiple processes can have the same database open at the same time. Multiple processes can be doing a SELECT at the same time. But only one process can be making changes to the database at any moment in time, however. [...] However, client/server database engines (such as PostgreSQL, MySQL, or Oracle) usually support a higher level of concurrency and allow multiple processes to be writing to the same database at the same time. This is possible in a client/server database because there is always a single well-controlled server process available to coordinate access. If your application has a need for a lot of concurrency, then you should consider using a client/server database. But experience suggests that most applications need much less concurrency than their designers imagine.

By the way, I tried redis as the broker too. Although tasks can be executed normally, the results/records weren't shown in the Django /admin page. Did I miss any step or configuration ?

I'm not sure what's wrong. What you described ought to work.

Do the tasks show up in the database table django_q_task ? This is how the queue server records successful tasks.

Why are you using both SQLite and postgresql? Seems like if you're going to set up postgresql, you could use that as your database backend.

The official document doesn't record that Postgresql can be as the broker, does it ? Additionally, Django supports SQLite by default, so I didn't think about it too much at the beginning. I just want to test it first to see if it is suitable as a solution or not. But it sounds like you would recommend using redis as the broker would easier and more effective.

If the services are difficult to manage and deploy, why not make them part of your docker-compose setup?

I am in the step of POC. All of the services would be deployed in k8s for production.

How Django Q access the Django database successfully ? Multiple Docker containers can share a volume, which means that they can share a SQLite file.

Is ''mount volume'' the only way to Django-q connect to Django's SQLite right?

The official document doesn't record that Postgresql can be as the broker, does it ?

The Django documentation says that PostgreSQL can be used as a database backend. The Django Q documentation says that the ORM can be used as a broker. Therefore, you can use PostgreSQL as a broker.

Again, I would steer you away from the ORM broker, but if you're using it anyway, then PostgreSQL is going to be more stable than SQLite, because it supports multiple writers at once.

Is ''mount volume'' the only way to Django-q connect to Django's SQLite right?

You could also use bind mounts.

But if you want them to have access to the same SQLite database, then somehow they have to share the same file, because SQLite is a file-based database.

Hello @nickodell , Thanks for your prompt reply. Sorry to leave a message until now. I have been busy with other things in the last few days. I would refer to your suggestion to try redis as the broker again and check admin could show the same outputs as SQLite next week.

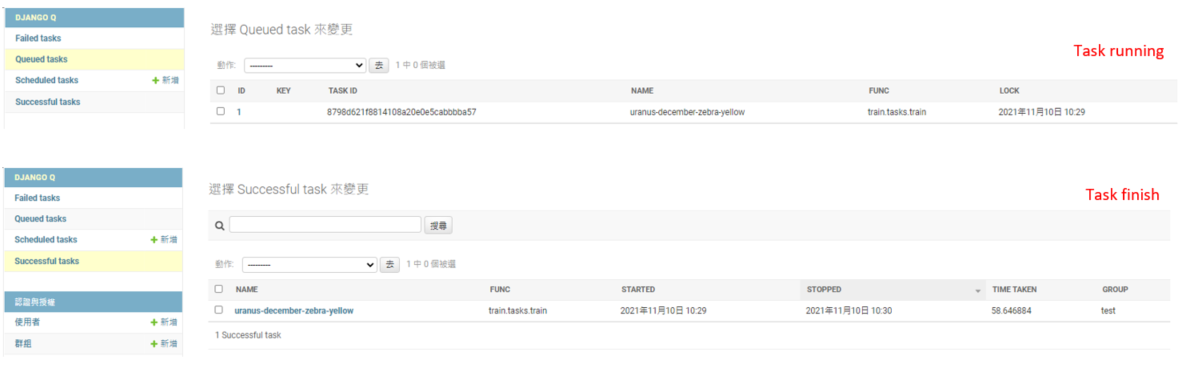

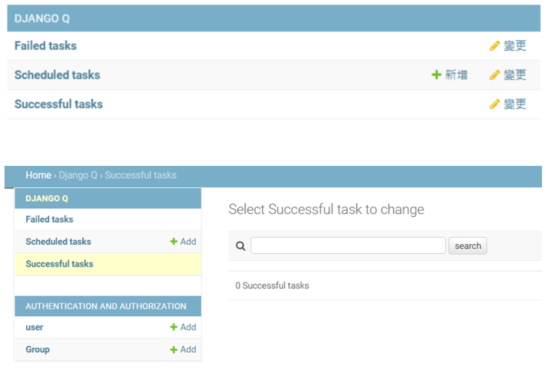

Hello @nickodell , when I configured SQLite ORM as the broker, it shows like the following

Scheduling Tasks displayed normally.

But, changing to redis as the broker, it showed nothing in the django-admin page after tasks had done successfully.

how to show the running task on the django-admin with redis as broker ?

Hard to say. There may be something misconfigured. Without going into each setting and checking it, it's hard to say.

I've written a tutorial on how to set up django-q with redis. Perhaps it will help you?

https://github.com/nickodell/django-q-demo

@nickodell It's so kind of you to write a document~ It's normal to run in the localhost. Tasks are also listed in the admin page successfully. However, tasks are also not shown in the Django admin page by using container way . Did you try to run three services by using a docker-compose file?

@Nicksia It's worth noting that while tasks are sent to the queue to dispatch them to a Django Q server, the broker is not involved in sending the task back to Django. That is done using the ORM, regardless of what broker is involved.

In other words, if you're using the SQLite backend, and the SQLite database file is not shared between containers, then result objects will not be sent back to the main Django process. In terms of how to set up a SQLite database to be shared between multiple containers, I would refer to my previous advice:

- Multiple Docker containers can share a volume, which means that they can share a SQLite file.

- You could also use bind mounts.

Did you try to run three services by using a docker-compose file?

Not using that tutorial, no.

However, I have a different docker setup for production use, which uses an external database. Because the database is shared between Django and Django Q, that means that tasks can alter the database, and that task results can be sent back to the database.

Hi @nickodell

However, I have a different docker setup for production use, which uses an external database. Because the database is shared between Django and Django Q, that means that tasks can alter the database, and that task results can be sent back to the database.

Is that mean using an external db (e.g. postgresql ) as the broker ? I just tried postgresql (ORM) with containers, task sent to the broker only, worker didn't work expectedly. (Task just existed in the queue, worker not run the task.)

So far, default SQLite ORM is a normal way for me although it needs an extra setting for sharing a volume.

BTW, it seems that someone had encountered a similar problem. #270

Hi @nickodell

The ORM broker is the slowest broker (in that it needs to repeatedly poll the database to find new work) and the least robust (in that exactly-once delivery is not what the Django ORM is intended to provide, and hacks are required to accomplish it.)

Would you please elaborate a bit on what you mean by a difficulty in achieving exactly-once delivery with Django ORM, and the hacks necessary to accomplish it?

I would have thought that by using an RDBMS to store your tasks that it would be the simplest to obtain exactly-once delivery due to atomic transactions.

If I use the ORM broker, am I risking any kind of bugs or strange behavior such as creating the same task twice/running the same task twice?

Thank you.

@mkmoisen

Would you please elaborate a bit on what you mean by a difficulty in achieving exactly-once delivery with Django ORM, and the hacks necessary to accomplish it?

I don't want to be alarmist here - there are no known bugs in the ORM broker.

You can read the code used to dequeue a task here: https://github.com/Koed00/django-q/blob/85baaccd2c3adfe0a414d4237465163e9ff6e5a0/django_q/brokers/orm.py#L63-L79

In general, it looks for tasks which are ready, then for each task:

- Issues a SELECT query to get the task.

- Issues a UPDATE query to read the lock time and update it. The return value is the number of tasks which matched the SELECT query.

- If step 2 succeeded, then process the task. Else some other task got it.

- If the worker gets stalled or killed somehow, the lock time on the task will expire, because it's set to a time slightly in the future.

So that's how it works, and I don't see any issues with it.

At the same time, it is hard to reach the same level of testing that the Redis and other brokers have reached. Django supports five database backends. Each of those backends can have their transaction isolation level modified by the server configuration. For example, MySQL supports four different transaction isolation levels, and this can be configured in my.cnf.

In contrast, if you use the Redis broker, then there is only one server implementation to test against. The command used to deque tasks, BLPOP, is atomic because the command is defined that way. You don't need to poll for tasks, because the command blocks if no tasks are available. This allows a much simpler implementation. Consequently, the equivalent code in the Redis broker is three lines long. This goes for all of the brokers which use a message-passing service to store tasks. They end up being much simpler.

Again, I don't know of any issues with the ORM broker. But if you've bit the bullet on using Docker Compose, then the cost of adding a container just for the broker is minimal, and the complexity savings are significant.

@nickodell Thank you.

If I understand correctly, it selects all the tasks where the locked date was greater than 60 seconds ago (by default). It then loops through each one and updates it if the lock has not yet been changed by another thread. If the update was successful our thread has won and can process it. Seems fine to me.

One question about the 60 seconds default: What happens if you want to run one particular task that might take 10 minutes, but you also want to run normal tasks that take less than a couple of seconds. I think what you have to do here is set the timeout to 10 minutes for all tasks. This would work but in the event a normal task failed you would have to wait 10 minutes before it was picked up again. Do I understand that correctly?

Have you considered an update with a select for update? It would be slightly more performant since it could update everything in one database trip. Although I'm not sure if it is easy to do using Django's syntax. It requires a UPDATE ... RETURNING which I'm not clear how to do with Django syntax. I normally use raw sql for something like this, but the UPDATE ... SELECT FOR UPDATE has a different syntax in postgres vs oracle which makes it not very useful for django-q.

Here is it using a raw postgresql query:

UPDATE ormq

SET lock = now()

WHERE id IN (

SELECT id

FROM ormq

WHERE lock < now() - INTERVAL '60 second'

FOR UPDATE SKIP LOCKED

LIMIT 10 -- only grab 10 tasks out of the q for this thread

)

RETURNING id, text, lock

One question about the 60 seconds default: What happens if you want to run one particular task that might take 10 minutes, but you also want to run normal tasks that take less than a couple of seconds. I think what you have to do here is set the timeout to 10 minutes for all tasks. This would work but in the event a normal task failed you would have to wait 10 minutes before it was picked up again. Do I understand that correctly?

Correct.

Another approach would be to chop work up into pieces that take less than a minute each. For example, if you wanted to email 1000 users inside a task, but it takes a second to send each mail, you could enqueue one task for every user. Or, you can have a task enqueue a task with its remaining work when it's almost out of time.