sarama

sarama copied to clipboard

sarama copied to clipboard

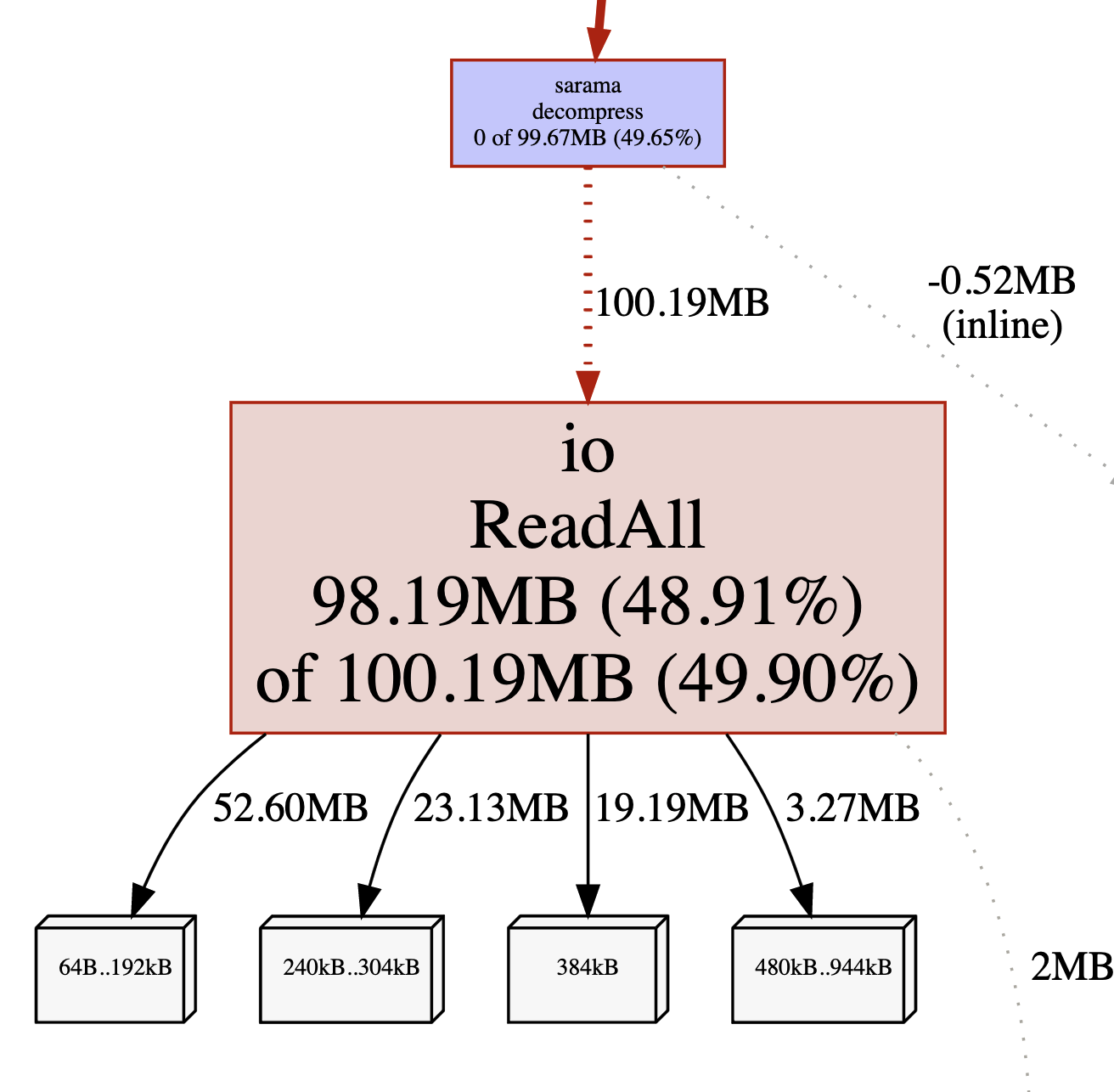

Go take a lot of heap on CompressionSnappy

Versions

Please specify real version numbers or git SHAs, not just "Latest" since that changes fairly regularly.

| Sarama | Kafka | Go |

|---|---|---|

| v1.29.1 | 1.1.1 | 1.16.5 |

Configuration

What configuration values are you using for Sarama and Kafka?

sarama.Logger = LogReWriter{}

cfg := sarama.NewConfig()

cfg.ClientID = groupID

cfg.Version = sarama.V1_1_1_0

cfg.Consumer.Offsets.AutoCommit.Enable = true

cfg.Consumer.Offsets.AutoCommit.Interval = 3 * time.Second

cfg.Consumer.Offsets.Initial = sarama.OffsetNewest

cfg.Consumer.Fetch.Default = 524288

cfg.Consumer.Fetch.Max = 1048576

cfg.Consumer.MaxWaitTime = 1000 * time.Millisecond

sarama.MaxResponseSize = 1049825

cfg.Metadata.Full = false

cfg.Metadata.Retry.Max = 1

cfg.Metadata.Retry.Backoff = 1000 * time.Millisecond

cfg.Consumer.Return.Errors = true

cfg.Consumer.Group.Rebalance.Strategy = sarama.BalanceStrategyRange

cfg.Consumer.Group.Session.Timeout = 30 * time.Second

cfg.Consumer.Group.Rebalance.Timeout = 60 * time.Second

cfg.Producer.Compression = sarama.CompressionSnappy

Logs

When filing an issue please provide logs from Sarama and Kafka if at all

possible. You can set sarama.Logger to a log.Logger to capture Sarama debug

output.

logs: CLICK ME

Problem Description

We use eapache/go-xerial-snappy which is just a lightweight framing wrapper around golang/snappy. I don't think the latter provides any tuning parameters around its internal buffer usage.

We might be able to do more if we adopt s2 via @klauspost 's compress library (which we already use for zstd) to provide our snappy support

Yes, it is a fairly easy wrapper to do, however to make a bigger dent you would need to refactor the interface to be an io.Reader wrapper, otherwise you need to have the compressed and decompressed sizes in memory at the same time.

Thank you for taking the time to raise this issue. However, it has not had any activity on it in the past 90 days and will be closed in 30 days if no updates occur. Please check if the main branch has already resolved the issue since it was raised. If you believe the issue is still valid and you would like input from the maintainers then please comment to ask for it to be reviewed.

I added a xerial-snappy fork, which eliminates allocations if the destination buffer is big enough to contain the decoded content.

There is also DecodeCapped, which allows to completely control the maximum output size. This prevents "zip bombs".

Even though Snappy is limited to ~21:1 expansion, the xerial streaming can potentially fill up memory if the input isn't limited. And a single adversarial block could allocate up to 4GB, just by specifying an uncompressed size of 4GB.

Thank you for taking the time to raise this issue. However, it has not had any activity on it in the past 90 days and will be closed in 30 days if no updates occur. Please check if the main branch has already resolved the issue since it was raised. If you believe the issue is still valid and you would like input from the maintainers then please comment to ask for it to be reviewed.