dependency-track

dependency-track copied to clipboard

dependency-track copied to clipboard

Improve efficiency of `MetricsUpdateTask`

Continuation of #1481. Relates to #1210. Replaces #1697 due to DCO causing trouble.

This PR contains a refactoring of the MetricsUpdateTask to improve its resource efficiency.

Calculating portfolio metrics in general is more I/O than CPU heavy and benefits greatly from parallelization. For this to work out OK, the footprint of the work being parallelized should be as slim as possible in order to keep the impact on overall resource consumption manageable. Speed of metrics updates also mainly depends on how beefy the database server is.

I investigated on doing all this entirely in the database using views and stored procedures, but ultimately decided that it'd be too much of a hassle to maintain and test. It would've been feasible if we only supported a single database, but that is not the reality today.

Changes in this PR come down to:

- Usage of raw SQL queries and projection classes instead of using the JDO API

- ✅ We have full control over what queries get executed which is more verbose, but also allows for further tuning

- ✅ We save the time spent by DataNucleus to compile JDOQL to SQL and maintain its cache

- ✅ We select only fields we need, reducing memory allocations

- The savings in resource usage made it feasible to parallelize project metrics calculation

- ✅ We leverage the event system, while also applying some back-pressure to not overload it when dealing with large portfolios

MetricsUpdateEvent(project)events are dispatched in batches ofNUM_WORKER_THREADS / 3. This number is high enough to provide a benefit from parallelization, and low enough so the system can still process other events.- During local testing I found that increasing the batch size to a higher number does not necessarily speed up the entire process. There is a point of diminishing returns, and it will be different for each system. I think the chosen batch size is a good happy medium.

- ✅ We leverage the event system, while also applying some back-pressure to not overload it when dealing with large portfolios

- Introduction of integration tests w/ testcontainers using all databases we support to ensure that queries are functional

- ⚠️ Running all tests now requires Docker

To clarify: This will increase total CPU usage (although for shorter periods of time) due to introduction of thread pools. Naturally, it will also increase database load while the task is running.

Test setup identical to that outlined in #1481. The following test class was profiled:

public class MetricsUpdateTaskBenchmarkTest {

@Test

public void test() {

final Properties jdoProps = JdoProperties.get();

jdoProps.setProperty(PropertyNames.PROPERTY_CONNECTION_URL, "jdbc:postgresql://localhost:5432/dtrack");

jdoProps.setProperty(PropertyNames.PROPERTY_CONNECTION_DRIVER_NAME, org.postgresql.Driver.class.getName());

jdoProps.setProperty(PropertyNames.PROPERTY_CONNECTION_USER_NAME, "dtrack");

jdoProps.setProperty(PropertyNames.PROPERTY_CONNECTION_PASSWORD, "dtrack");

final var pmf = (JDOPersistenceManagerFactory) JDOHelper.getPersistenceManagerFactory(jdoProps, "Alpine");

PersistenceManagerFactory.setJdoPersistenceManagerFactory(pmf);

new MetricsUpdateTask().inform(new MetricsUpdateEvent(MetricsUpdateEvent.Type.PORTFOLIO));

}

}

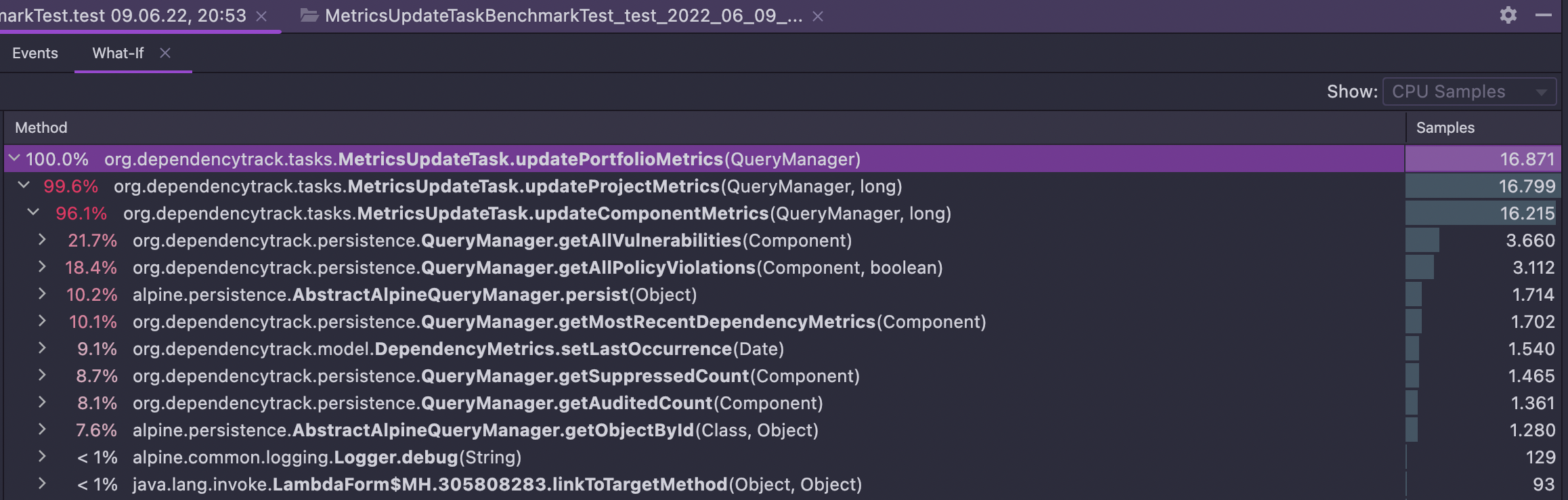

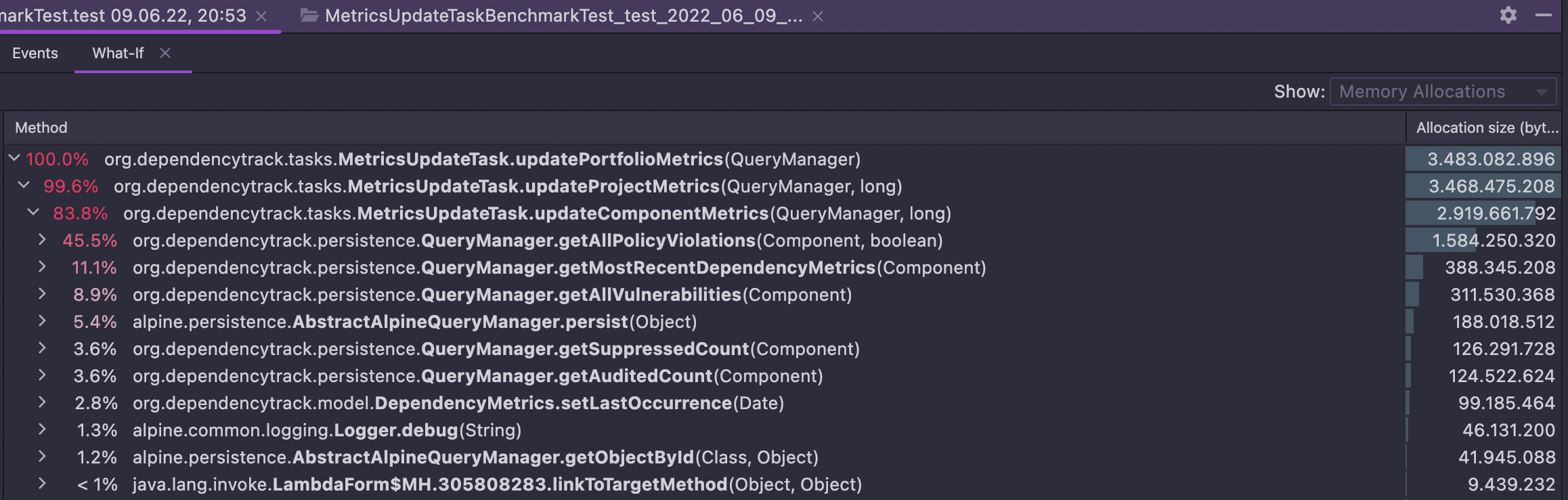

CPU samples and memory allocations with current master (ran for 2m30s):

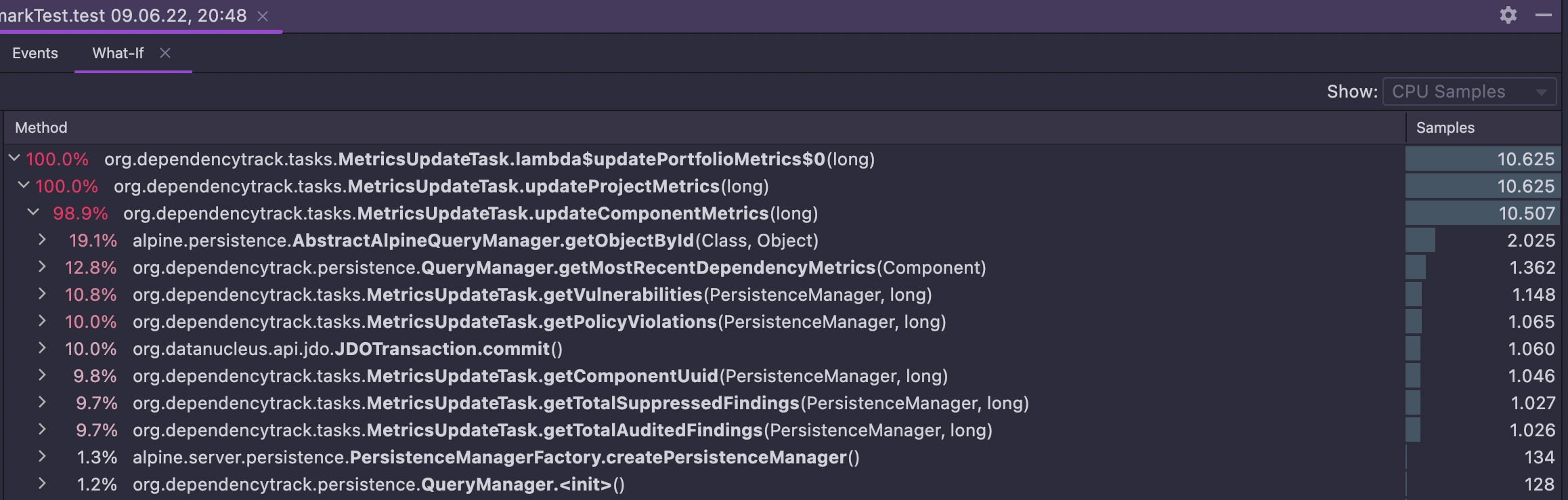

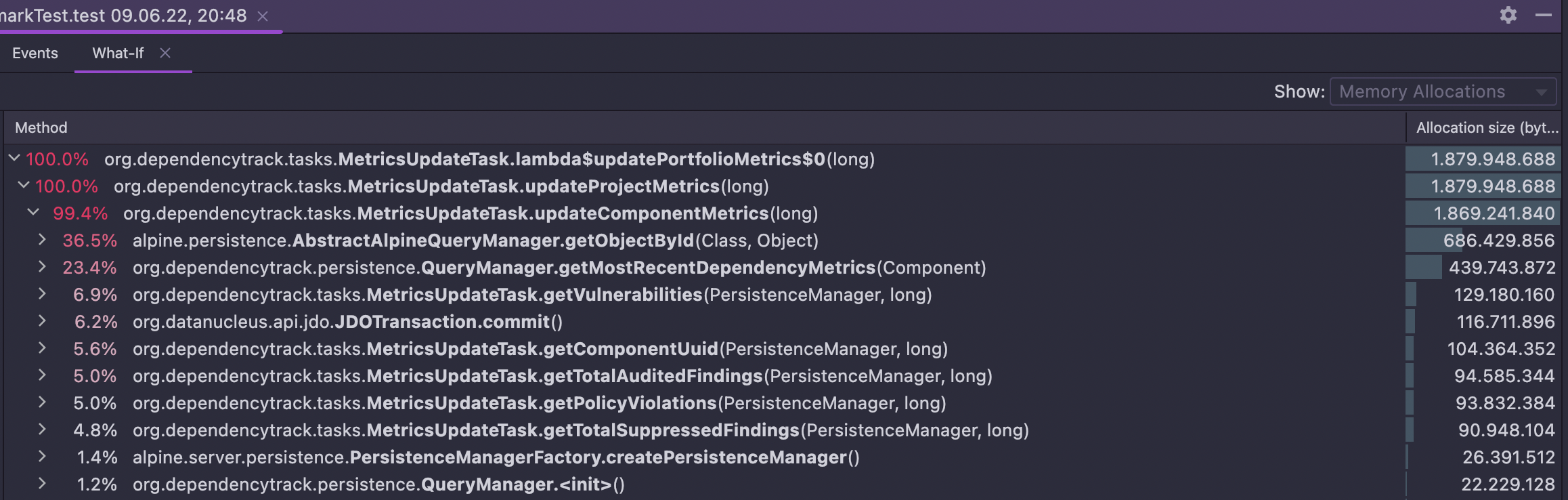

With this PR (ran for 30s):

Note that execution times will be only a fraction of the above in real deployments. I'm using Postgres in Docker, and it looks like Docker Desktop for Mac has a poor Host ➡️ Container network performance.

On my Linux workstation with 12 logical cores it's a difference of 2min with master and 8s with this PR.

I don't consider this to be the ultimate solution to improving metrics updates. But it's yet another step towards making it less of a pain point for large portfolios.

Long-term, we should remove the scheduled portfolio metrics calculation. Portfolio metrics will need to be calculated based on which projects a user or team has access to (see #1682), which we'll need to do ad-hoc. The MetricsUpdateTask would then only kick off project metrics updates, but not sum them up.

Updated the PR to leverage the event system instead of spawning a temporary ExecutorService upon every execution.

I used batching in combination with a CountDownLatch to apply some sort of back-pressure to the events being published. Other areas of DT may benefit from this or a similar approach as well (#1772).

How does this PR affect the JDO cache? Specifically, if metrics are updated and those same metrics are already in the JDO cache, the old values would likely be returned until the cache expires.

Upserts are still performed using the JDO API, so the "shared" L2 cache of the PersistenceManagerFactory will still be populated upon commit. The L1 cache is bound to PersistenceManager instances, thus very short-lived and not really relevant in that case. Only selections are done with raw SQL, and AFAIK query results are not cached by JDO / DN.

https://www.datanucleus.org/products/accessplatform/jdo/persistence.html#cache_level2

@nscuro Great job! We want to use this PR. But I see that you are still making changes. Please tell me when you plan to finish?

Thanks @shipko!

The PR in itself is pretty much ready and is only awaiting a review. As this refactoring touches a core functionality of DT, it should be reviewed by another maintainer - who just happens to be the very busy creator of the project. 😅

Awesome, thanks for the review. Going to merge this then. 🤞