dd-trace-js

dd-trace-js copied to clipboard

dd-trace-js copied to clipboard

Winston logs not being instrumented by dd-trace

Expected behaviour

Expected winston log outputs to include a dd object which includes a trace-id when traces are active

Actual behavior

No dd object in logs with active traces e.g.

{

"labels": { "env": "server" },

"level": "info",

"message": "{\"_id\":\"***\",\"company\":\"***\",\"__v\":0,\"createdAt\":\"2022-08-10T16:18:26.330Z\",\"disableVPNCheck\":true,\"disabledFeatures\":[],\"isDirectoryVisibleToAssistants\":false,\"templates\":[],\"updatedAt\":\"2022-08-11T09:03:49.659Z\",\"id\":\"**\"}",

"resource": { "type": "***" }

}

This log was created by the following block of code during an active trace

const companySettings = await tracer.trace(

"companySettings.setDisableVPNCheck",

async () => {

const settings = await upsertCompanySettings(***, {

***,

});

logger.info(JSON.stringify(settings));

console.log(tracer.scope().active().context()?.toTraceId());

return settings;

},

);

Note the console.log does log a trace ID, so this is an active trace.

In datadog we are seeing traces being sent, and we are seeing logs being sent. However, it seems now that none of our logs have the trace id so logs and traces are not linked together as they were before.

From my investigation I can see that we have logs and we have traces, we have an active trace during some log events as seen above but the winston logs are not instrumented with the dd object as they were before.

We are using datadog-winston and have been for some time now.

Our tracer is imported before any modules that use the custom logger or the tracer, see below;

server.ts // entry point

import "./tracer"; // must be imported before app

import { checkEnvironment } from "./common/environment";

import logger from "./logging/logger";

import app from "./app";

checkEnvironment();

(process as NodeJS.EventEmitter).on(

"uncaughtException",

(err: Error, origin: string) => {

logger.error("[App][Server] Uncaught Exception", {

err,

origin,

});

},

);

(process as NodeJS.EventEmitter).on(

"unhandledRejection",

(err: Error, origin: string) => {

logger.error("[App][Server] Unhandled Rejection", {

err: (err && err.stack) || err,

origin,

});

},

);

const PORT = process.env.PORT || 8000;

app.listen(PORT, () => {

logger.info(`[App][Bootstrap] App listening on port ${PORT}`);

logger.info("[App][Bootstrap] Press Ctrl+C to quit.");

});

tracer.ts // init tracer

import tracer from "dd-trace";

import { getReleaseEnvironment } from "./common/releaseEnvironment";

// initialized in a different file to avoid hoisting.

tracer.init({

env: getReleaseEnvironment(process.env.GCLOUD_PROJECT_NAME),

hostname: process.env.DD_HOSTNAME,

logInjection: true,

service: "service-name",

version: process.env.API_VERSION,

startupLogs: true,

});

export default tracer;

Environment

- Operation system: macOS

- Node.js version: 14.8

- Tracer version: 2.12.2

- Agent version:

- Relevant library versions:

In the above example, the object is stringified before being sent to the logger. The tracer needs an actual object to be able to do the injection, since a string could be in any format. Does it work if the object is not serialized externally?

In the above example, the object is stringified before being sent to the logger. The tracer needs an actual object to be able to do the injection, since a string could be in any format. Does it work if the object is not serialized externally?

So this was just an example which why I stringified that log.

I did try a console.log({msg: "hello"}) and still getting get the dd object.

Our actual logger does ultimately log an object e.g.

export const wrapLogger =

(logger: ReturnType<typeof loggerFactory>, level: Level) =>

(message: string, meta: unknown = {}) =>

logger.log({

level,

message,

...parseMetadata(meta),

});

@rochdev any ideas?

I've also had nothing but problems with this combination. No matter what, automatic injection was not working. We manually injected the logs by manually setting the trace_id and scope_id, which was working really well, until something changed (import order maybe) and then automatic injection started "working" again; however it caused our app to crash with a cryptic error:

TypeError: 'getOwnPropertyDescriptor' on proxy: trap returned descriptor for property 'dd' that is incompatible with the existing property in the proxy target

at JSON.stringify (<anonymous>)

TypeError: 'get' on proxy: property 'dd' is a read-only and non-configurable data property on the proxy target but the proxy did not return its actual value (expected '#<Object>' but got '#<Object>')

My opinion is that this library is trying to do too much magic, which instead of making it "automatic" makes it so that nothing ever works, automatic or otherwise, it is constantly breaking.

After the automatic trace injection started working, it broke a bunch of other observability related stuff, for example now our parent traces are missing, and the logs being ingested into data dog have mismatching service names (even from the same process).

Unfortunately, working with dd-trace has been really frustrating. If I had one piece of feedback, it would be stop trying to monkey patch dozens of libraries and make everything "automatic", it is impossible to account for everyone's setup. It would be much more desirable to just focus on having really simple mechanisms for manually instrumenting code that are really well documented and easy to debug. As it stands, there is just way too much going on. Sometimes reading from environment variables, sometimes reading from package.json, etc. it just feels like things are all over the place.

I managed to get the [seemingly broken] Winston integration to turn off, I first tried this with no luck:

tracer.init({

logInjection: false,

})

tracer.use('winston', false);

but that was not sufficient, it was still injecting the wrong service name, which it was getting from various places.

I had to first ensure I import the Winston logger inside the file where I import and call tracer.init(), then it finally turned off the automatic integration. From there, I can just set the expected properties service, trace_id, and span_id manually, which is ironically way easier than debugging the [seemingly broken] automatic integration.

For anyone experiencing issue, my experience is that if you turn off the automatic integrations and just do it manually, it will go much easier. Otherwise, your app is going to randomly break when you change unrelated thing that affect imports, and the library will randomly encounter "split brain" where it "randomly" sources the service name from different places, even within the context of a single request.

Even after you turn off the auto integration, and get the manual injection working; the auto integration may enable itself and rear it's "ugly" head if you aren't careful with the import order.

@joshribakoff-sm The main thing that usually breaks things is load order issues since dd-trace needs to be the very first thing loaded and initialized before anything else is imported. When that's not the case all sorts of issues can start happening, and trying to work around these problems can cause other issues as you've experienced. We're working on making all of this easier to use and making automatic and manual instrumentation work better together. In the meantime, the best approach would be to make 100% sure that dd-trace is initialized before anything else is imported so that everything just works out of the box. One sure way to do this is to load the library right at the CLI with node -r dd-trace/init index.js or as en environment variable with NODE_OPTIONS='-r dd-trace/init'.

If I had one piece of feedback, it would be stop trying to monkey patch dozens of libraries and make everything "automatic"

The main feedback that we usually get is that we're actually not automatic enough, hence the big focus on making everything work magically. Unfortunately there are cases where this doesn't work and doing things manually is needed, and we're definitely trying to make that easier as well.

In the meantime, the best approach would be to make 100% sure that dd-trace is initialized before anything else is imported so that everything just works out of the box.

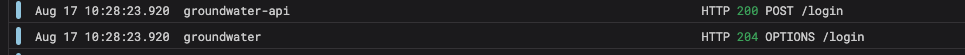

There must be more to it than this. When importing dd-trace first, or turning on automatic integration at all, too much stuff breaks. For example, our logger uses one service name and a second later uses a completed different service name:

Parent traces are missing, probably sent with the wrong service name if I had to guess.

One sure way to do this is to load the library right at the CLI with node -r dd-trace/init index.js

That's a good suggestion for anyone trying to use automatic integration!

The main feedback that we usually get is that we're actually not automatic enough, hence the big focus on making everything work magically. Unfortunately there are cases where this doesn't work and doing things manually is needed, and we're definitely trying to make that easier as well.

Yeah but it's too hard to turn off automatic integration, if you mess up the import order, it turns itself on before you can turn it off!

Perhaps you could separate manual and automatic integration into two separate libraries, this way for people like me I don't risk importing any of the automatic stuff if I do not want it.

There must be more to it than this. When importing dd-trace first, or turning on automatic integration at all, too much stuff breaks. For example, our logger uses one service name and a second later uses a completed different service name

That's definitely odd, the service name for log correlation purpose should always be the one for the process, which shouldn't change unless it's set programmatically in the tracer options. Is this something that you can reproduce easily with small snippet? Since it seems to happen when the application starts there shouldn't be too much of the app code involved.

Parent traces are missing, probably sent with the wrong service name if I had to guess.

Definitely sounds related, so I'd start with looking into the service name first.

Yeah but it's too hard to turn off automatic integration, if you mess up the import order, it turns itself on before you can turn it off!

The assumption is that if dd-trace is used, automatic instrumentation is used as well, but as you mentioned this is not always true. There is an option to disable individual plugins, which since 2.13 would prevent them from even being imported at all. We could add another option to disable auto-instrumentation entirely. However, even if that worked perfectly, we'd still be curious about why some users are unable to use auto-instrumentation in the first place since the main goal is that ideally everything just works with 0 code.

Yeah but it's too hard to turn off automatic integration, if you mess up the import order, it turns itself on before you can turn it off!

This is an issue that is likely to stay because of the current API design with init and use being separated. That API is used a lot and is not something we can deprecate easily. However, it should be possible in the latest version to call .use() before calling .init() so that the plugin never has the chance to be enabled in the first place. Another option is to use the DD_TRACE_DISABLED_PLUGINS environment variable.

Perhaps you could separate manual and automatic integration into two separate libraries, this way for people like me I don't risk importing any of the automatic stuff if I do not want it.

This is definitely something we're looking into. There are some environments where auto-instrumentation doesn't even make sense in the first place, like for example CloudFlare workers and similar environments. This also applies to other features like profiling and runtime metrics.

That's definitely odd, the service name for log correlation purpose should always be the one for the process

What it was is that sometimes we log, and out of all of those logs, only some of them are within traces. Because the automatic log injection was not working out for us, we had done manual log injection:

export const logFormat = (tracer?: Tracer) =>

printf((props: any) => {

const span = tracer?.scope().active();

const record = props;

if (span) {

tracer?.inject(span.context(), formats.LOG, record);

}

return JSON.stringify(props);

});

If the log entries have a trace associated, dd-trace assigns the service property. If the log entries occur outside of a trace, no service gets injected and so the DD agent assigns it based on a Kubernetes deployment name or YAML tag.

Parent traces are missing, probably sent with the wrong service name if I had to guess. Definitely sounds related, so I'd start with looking into the service name first.

I am not sure why, and I cannot replicate it locally. I realized it would not be related to the service name, because DataDog specifically shows distributed traces across multiple service names -- I don't think on DataDogs side they would drop part of a trace from one service and not others, but I could be wrong. If it keeps happening, I can turn on DD_TRACE_DEBUG and try to see more details about what is going on.

, it should be possible in the latest version to call .use() before calling .init() so that the plugin never has the chance to be enabled in the first place

That doesn't work in my project. It still enables log injection, and that is also with logInjection: false 🤣 . I think the disconnect is that as a library author, it is maybe easy to suggest fixing the import order, but then as a consumer maintaining a large codebase, there are many reasons someone may import from dd-trace, perhaps even just importing a Typescript type. Incidentally, one refactor that broke dd-trace was that we had to restructure our code so there are multiple apps and bootstrapping the app happens in a reusable library, and the top level apps imports the reusable bootstrap utility. Probably these apps transitively imported dd-trace or our logger unintentionally initializing things in the wrong order. In general, we don't want our observability to break because an import statement was moved around.

we'd still be curious about why some users are unable to use auto-instrumentation in the first place since the main goal is that ideally everything just works with 0 code.

There are many reasons. The import order issue mentioned above. Also, for example, currently, your integration for graphql is written in such a way that if a resolver fails, the span is marked as an error. However, if a user is not logged in that is not an error from the sense of APM, or our error rate SLOs. We don't want to be paged because someone was penetration testing the API at 2am 😆 I am actually monkey patching dd-trace library to fix this issue in my codebase, using patch-package, so I do want as much "automatic" integration as possible, but not the part where you treat ever error as a failed span, for example. For a while I was able to use your "hooks", until I found another issue where the hook never gets called if we mess up our import order 😆 , so I just patch the library and do everything as manually as possible whenever there are issues, and that seems to work as a strategy for me.

This is definitely something we're looking into. There are some environments where auto-instrumentation doesn't even make sense in the first place,

Awesome, glad to hear that!

I had to first ensure I import the Winston logger inside the file where I import and call

tracer.init(), then it finally turned off the automatic integration. From there, I can just set the expected propertiesservice,trace_id, andspan_idmanually, which is ironically way easier than debugging the [seemingly broken] automatic integration.

@joshribakoff-sm how do you manually set trace_id etc?

@chris-hinds I know this is an old issue but do you know if it's still a problem? I see that Roch merged a change which appears to have solved it back in late 2022.

I'll close this for now but we can reopen it if it's still an issue.

For future viewers, I would recommend using pinojs for logging: https://github.com/pinojs/pino Theres a "@types/pino" dependency as well for Typescript. Winston isn't production quality and fails at high concurrency.