banditpylib

banditpylib copied to clipboard

banditpylib copied to clipboard

Distributed Bandit setup

Hi, thanks for this - it's a really awesome work! I'm interested in the decentralized/distributed bandit setup. Do you have any pointers for extending your code - e.g. to support communication between players?

Hi Chester,

Thanks. That's a great suggestion! Actually, I have been considering implementing the communication protocol in our recent paper "Collaborative Learning with Limited Interaction: Tight Bounds for Distributed Exploration in Multi-armed Bandits". However, it is not finished yet. I am curious about which communication protocol you are interested in.

Hey Chao, thanks to the reference - it looks very relevant to my own problem! I look forward to reading it. I am interested in collaborative best arm identification in the heterogenous multi-player setting with/without collisions & communication.

That's interesting. I am curious why collision is needed in pure exploration setting?

Last year were some nice developments in general bounds for regret minimization in multiplayer informed/uninformed collision setting: https://arxiv.org/abs/1904.12233

It may not be as interesting as regret minimization, but the tradeoffs for pure exploration should be generally similar, and the difficulties should still be there (for uninformed collisions players don't know if loss corresponds to bad arm or collision)

I see. It sounds an interesting direction.

I made some minor change. Have you used pylint? It is a tool to make your code more stylized.

Sounds good - I've used pylint a couple times before. I'll start verifying for future PRs. I've also seen this plugin used on a couple gh os projects: pep8speaks. It could be helpful if you don't mind the additional comment clutter.

I can take a crack at a simple decentralized protocol this afternoon/this weekend - maybe something like homogeneous players with no collisions/wait modes & with universal communication.

I tried flake8 (a wrapper of pep8). And I gave up since there are too many comments. It was a pain to customize it. Not sure this plugin is easier to customize. Anyway, we can have a try.

Just refactored the structure.

Just finshed refactoring singleplayer policy. You can sync and take a look.

Awesome. I will take a look tomorrow afternoon and can try extending to best arm selection this weekend.

Hi,

Want to finish up Feraud's decentralized exploration algorithm before I take a break from implementing previous work & focus on something new.

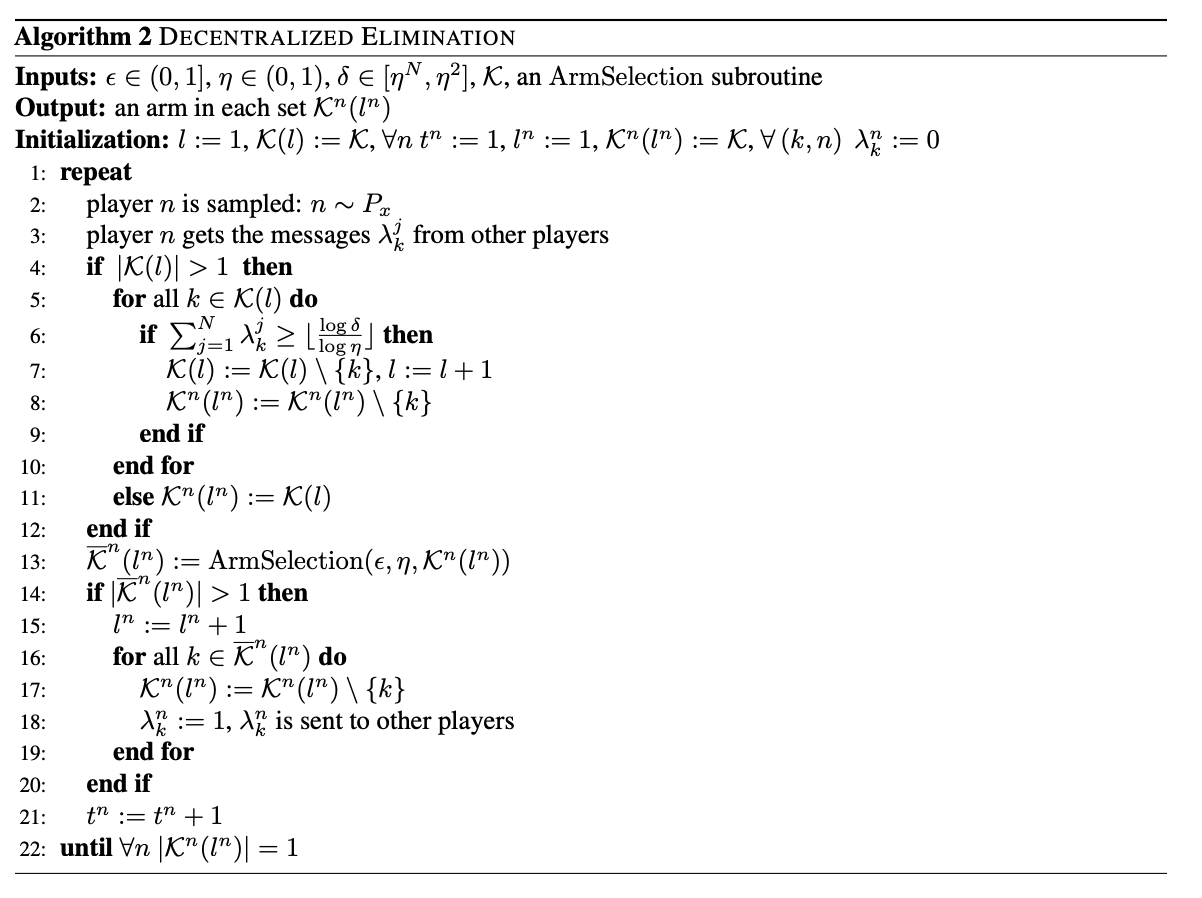

Do you have tips on the correct way to implement a kind of meta learner (which references an arm selection scheme). In particular, the call to ArmSelection in line 13 refers to a selection scheme which returns eliminated actions. If it is okay for a learner to pull its own arms and observe the feedback, it should be fine, but I'm not sure if this is okay from a design perspective (from what I understand, learners primarily only observe stats from em_arm, and the protocol handles interface to the bandit & feedback?).

Alternatively, I could implement a separate protocol to take the place of this meta learner.

Here is the pseudocode for reference:

It’s a great question. From the design perspective, I think we should not let the learner communicate with the bandit directly since we can not prevent the learner from hacking the environment. But, according to the pure exploration setting, we should provide such a schema. To get around this, we can hide the key parameters of the bandit from outside. In the meantime, we should monitor the learners in the protocol such that none of them use budgets more than the given one.

BTW, I have refactored the config. Now you can compare the policies under different protocols or with different number of players.

I have re-checked your decentralizedbaiprotocol and made some modifications. Not sure I have did it right. Anyway, we should be careful about the decentralized best arm identification protocol.

Can you help move these algorithms under the current branch?