stable-diffusion-webui

stable-diffusion-webui copied to clipboard

stable-diffusion-webui copied to clipboard

[Feature Request]: Offset noise - improved? technic to generate txt2img

Is there an existing issue for this?

- [X] I have searched the existing issues and checked the recent builds/commits

What would your feature do ?

Better denoising by train/generating Image See more details here: https://www.youtube.com/watch?v=cVxQmbf3q7Q

Curious when it comes to SD and WebUI :)

The change is very easy to implement (11:49S): https://www.youtube.com/watch?v=cVxQmbf3q7Q&t=701s

Comparison (15:21s): https://www.youtube.com/watch?v=cVxQmbf3q7Q&t=921s

Proposed workflow

- This is idea in YT video

Additional information

No response

@Centurion-Rome do you have some pictures comparison?

do you have some pictures comparison?

https://www.youtube.com/watch?v=cVxQmbf3q7Q&t=921s I also updated the description with link to some comparison ;)

ok this i guess is comparison:

This is for training, not related to any sort of webui functionality. If the model is trained with this offset noise technique, the webui will support it.

btw, link to youtube video is ok as intro, but at the end not much will happen unless you have a link to a doc or a repo.

There is a blog post in video description.Maybe that will help https://www.crosslabs.org/blog/diffusion-with-offset-noise

yes, that helps a lot :)

i took a quick look at the code and the change would be easy to implement and can be made tunable. the question is where should be it be applied?

- obious choice would be in

k_diffusionmodule hijaack so it would apply to model fine tuning,

but it would also require extra handling for higher batch sizes as they work with noise tensors placed in queues - same can be done for textual inversions or even lora

but no idea what would impact be?

Here's the comparison from the article. This simple technique (to be applied in the noising steps of Stable Diffusion image generation) enables Stable Diffusion to generate images with dramatic lighting. The current implementation, by contrast, tends to produce dull images, due to its tendency to produce images with 0.5 gray as the mean color.

One impact is that it's been shown LoRA models with this change cannot be mixed with LoRA models that don't use this change, it cooks the output.

Is it also safe to say most of the training features in this repo are considered legacy at this point? I feel most people have moved onto using other repos specifically for this purpose. kohya-ss/sd-scripts has already implemented this functionality into it's own trainer. Multi-resolution bucketing hasn't even been implemented in the webui trainer.

@catboxanon can you clarify your points?

you say loras with this change cannot be mixed - where did you see that?

when i mentioned lora, i did refer to kohya's implementation and i don't see any offset noise handling there - in either train_network, train_db or fine_tune (quite the opposite, all three have standard noise handling).

and how is multi-resolution bucketing relevant to this convo?

https://github.com/kohya-ss/sd-scripts/blob/f0ae7eea950b93b1550dc34a782da3bafda2a8c0/train_network.py#L359-L361

LoRAs being mixed with and without this training change were posted on 4chan, unfortunately I don't have an example on hand currently (nor one that's probably SFW anyway).

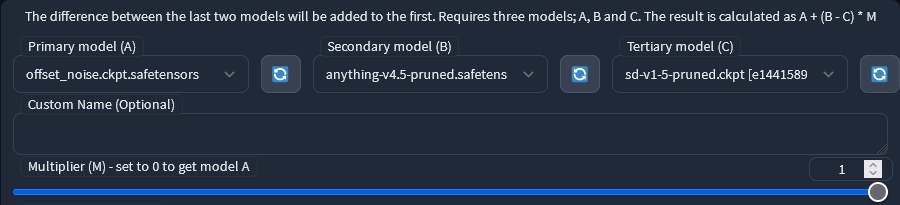

Not sure why I hadn't tried sooner, but you can simply do an add difference merge to bring similar results to any model. Same prompt and seed, the output speaks for itself.

Not sure why I hadn't tried sooner, but you can simply do an add difference merge to bring similar results to any model.

that sounds interesting, can you elaborate on the workflow you used to get this?

The result is described in the filename, but this is all it is:

Following the same method used to "convert" any model into an inpainting model. https://old.reddit.com/r/StableDiffusion/comments/zyi24j/how_to_turn_any_model_into_an_inpainting_model/

Following the same method used to "convert" any model into an inpainting model. https://old.reddit.com/r/StableDiffusion/comments/zyi24j/how_to_turn_any_model_into_an_inpainting_model/

@catboxanon oh, very cool, is it this? https://huggingface.co/Xynon/noise-offset/tree/main

I tried with the model above, the effect would produce darker atmospheres and lighting ~~particularly very prevalent at step 8-10, DPM++ 2M Karras with cfg 7~~ (not sure if that's just my prompt). It seems like a good finetune model to keep for quick merge tests.

Yeah, that looks to be the same. The actual upload is at the end of the original blog post.

Further updates on this:

LoRA model for applying to other models on-the-fly: https://civitai.com/models/13941/epinoiseoffset Pyramid noise (possibly better alternative to the offset noise technique): https://wandb.ai/johnowhitaker/multires_noise/reports/Multi-Resolution-Noise-for-Diffusion-Model-Training--VmlldzozNjYyOTU2

you can play with merging if you like https://huggingface.co/ClashSAN/pyramid_noise_test_600steps_08discount/tree/main is this issue resolved?