tkseem

tkseem copied to clipboard

tkseem copied to clipboard

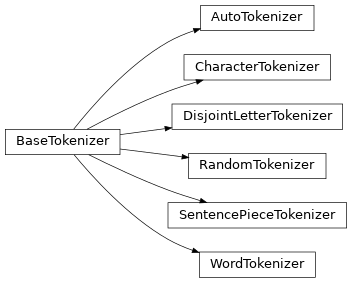

Arabic Tokenization Library. It provides many tokenization algorithms.

tkseem (تقسيم) is a tokenization library that encapsulates different approaches for tokenization and preprocessing of Arabic text.

Documentation

Please visit readthedocs for the full documentation.

Installation

pip install tkseem

Usage

Tokenization

import tkseem as tk

tokenizer = tk.WordTokenizer()

tokenizer.train('samples/data.txt')

tokenizer.tokenize("السلام عليكم")

tokenizer.encode("السلام عليكم")

tokenizer.decode([536, 829])

Caching

tokenizer.tokenize(open('data/raw/train.txt').read(), use_cache = True)

Save and Load

import tkseem as tk

tokenizer = tk.WordTokenizer()

tokenizer.train('samples/data.txt')

# save the model

tokenizer.save_model('vocab.pl')

# load the model

tokenizer = tk.WordTokenizer()

tokenizer.load_model('vocab.pl')

Model Agnostic

import tkseem as tk

import time

import seaborn as sns

import pandas as pd

def calc_time(fun):

start_time = time.time()

fun().train()

return time.time() - start_time

running_times = {}

running_times['Word'] = calc_time(tk.WordTokenizer)

running_times['SP'] = calc_time(tk.SentencePieceTokenizer)

running_times['Random'] = calc_time(tk.RandomTokenizer)

running_times['Disjoint'] = calc_time(tk.DisjointLetterTokenizer)

running_times['Char'] = calc_time(tk.CharacterTokenizer)

Notebooks

We show how to use tkseem to train some nlp models.