Ilya Matiach

Ilya Matiach

I had a similar issue, using an older version of spacy (2.3.7) package on pypi fixed it, looks like the tokenizer code needs to be updated to latest spacy

see related issue: https://github.com/interpretml/interpret-text/issues/182

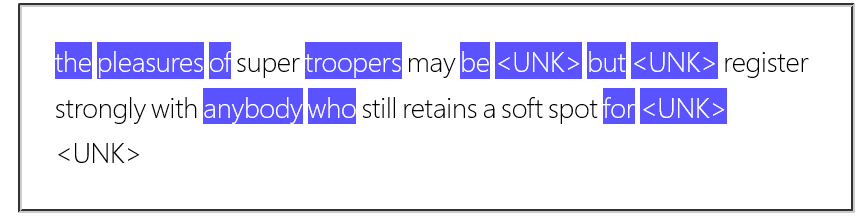

I'm guessing this is after doing the full run? doing a quick run shows this:

I think we need to just move the dashboard to the microsoft/responsible-ai-toolbox repository now

I had a similar issue, using an older version of spacy (2.3.7) package on pypi fixed it, looks like the tokenizer code needs to be updated to latest spacy

see related issue: https://github.com/interpretml/interpret-text/issues/176

I was thinking of adding this fix directly to https://github.com/Microsoft/LightGBM, but I wanted to make sure the issue is actually in the native code there and not shap.

@slundberg sorry, to be sure I understand, by output 1D or 2D array you mean the predicted value (regression) or predicted probabilities per class (classification): "But if the model object...

@slundberg sorry to be persistent about this issue, but I would really like to resolve this in either shap or lightgbm/xgboost. This issue seems like a bad experience and makes...

@slundberg I think it would make sense for all shap values from TreeExplainer, no matter what the shape of the prediction probabilities are, to be consistent based on whether we...