NeuralNetwork-Examples

NeuralNetwork-Examples copied to clipboard

NeuralNetwork-Examples copied to clipboard

Unexpected results when transforming from Theano to Keras

Hi, I used Keras to re-implement CountCeption model but got unexpected results and did not know how to fix it. Here is my model:

from keras.layers import Convolution2D, Input, Flatten, Activation

from keras.layers.merge import concatenate

from keras.layers.normalization import BatchNormalization

from keras.models import Model

from keras.layers.convolutional import ZeroPadding2D

from keras import optimizers

from keras.losses import mean_squared_error, mean_absolute_error, categorical_crossentropy

from keras.layers.advanced_activations import LeakyReLU

import keras.backend as K

import numpy as np

class CountCeption(object):

def __init__(self, frame_size, stride, learning_rate = 1e-4, padding=0, ef = 1):

self.frame_size = frame_size

self.stride = stride

self.padding = padding

self.learning_rate = learning_rate

self.ef = ef

self.build_model()

def ConvFactory(self, inp, num_filter, filter_size, pad=0):

inp = ZeroPadding2D(padding=(pad, pad), data_format='channels_last')(inp)

inp = Convolution2D(num_filter, filter_size, strides=(self.stride, self.stride),

padding='valid', data_format='channels_last', kernel_initializer='glorot_uniform')(inp)

inp = LeakyReLU(alpha=0.01)(inp)

return BatchNormalization()(inp)

def Inception(self, inp, first_channel, second_channel):

conv1x1 = self.ConvFactory(inp, num_filter= first_channel, filter_size= 1, pad= 0)

conv3x3 = self.ConvFactory(inp, num_filter= second_channel, filter_size= 3, pad= 1)

return concatenate([conv1x1, conv3x3])

def build_model(self):

self.inp = Input(name='Input', shape= (self.frame_size, self.frame_size,3), dtype='float32')

self.net = self.ConvFactory(self.inp, filter_size=3, num_filter=64, pad=self.padding)

self.net = self.Inception(self.net, 16, 16)

self.net = self.Inception(self.net, 16, 32)

self.net = self.ConvFactory(self.net, filter_size=14, num_filter=16)

self.net = self.Inception(self.net, 112, 48)

self.net = self.Inception(self.net, 64, 32)

self.net = self.Inception(self.net, 40, 40)

self.net = self.Inception(self.net, 32, 96)

self.net = self.ConvFactory(self.net, filter_size=18, num_filter=32)

self.net = self.ConvFactory(self.net, filter_size=1, num_filter=64)

self.net = self.ConvFactory(self.net, filter_size=1, num_filter=64)

self.out = self.ConvFactory(self.net, filter_size=1, num_filter=1)

self.model = Model(inputs=self.inp, outputs=self.out)

self.model.summary()

self.count = K.sum(self.out, axis=(1,2,3))/self.ef

self.predict = K.function([self.inp, K.learning_phase()], [self.out, self.count])

def compile_model(self):

self.label = K.placeholder(ndim= 4, dtype='float32')

self.label_count = K.placeholder(ndim= 2, dtype='float32')

self.loss_pixel = K.mean(K.abs(self.label-self.out))

self.loss_count = K.mean(K.abs(self.label_count - self.count))

self.optimizer = optimizers.Adam(lr=self.learning_rate)

# self.optimizer = optimizers.SGD(lr=self.learning_rate)

self.update = self.optimizer.get_updates(self.model.trainable_weights, [], loss = self.loss_pixel)

self.test_step = K.function([self.inp, self.label, self.label_count, K.learning_phase()], \

[self.loss_pixel, self.loss_count])

self.train_step = K.function([self.inp, self.label, self.label_count, K.learning_phase()], \

[self.loss_pixel, self.loss_count, self.out], updates = self.update)

>> model = CountCeption(256, stride=1, padding=32)

Layer (type) Output Shape Param # Connected to

====================================================================================================

Input (InputLayer) (None, 256, 256, 3) 0

____________________________________________________________________________________________________

zero_padding2d_37 (ZeroPadding2D (None, 320, 320, 3) 0 Input[0][0]

____________________________________________________________________________________________________

conv2d_37 (Conv2D) (None, 318, 318, 64) 1792 zero_padding2d_37[0][0]

____________________________________________________________________________________________________

leaky_re_lu_37 (LeakyReLU) (None, 318, 318, 64) 0 conv2d_37[0][0]

____________________________________________________________________________________________________

batch_normalization_37 (BatchNor (None, 318, 318, 64) 256 leaky_re_lu_37[0][0]

____________________________________________________________________________________________________

zero_padding2d_38 (ZeroPadding2D (None, 318, 318, 64) 0 batch_normalization_37[0][0]

____________________________________________________________________________________________________

zero_padding2d_39 (ZeroPadding2D (None, 320, 320, 64) 0 batch_normalization_37[0][0]

____________________________________________________________________________________________________

conv2d_38 (Conv2D) (None, 318, 318, 16) 1040 zero_padding2d_38[0][0]

____________________________________________________________________________________________________

conv2d_39 (Conv2D) (None, 318, 318, 16) 9232 zero_padding2d_39[0][0]

____________________________________________________________________________________________________

leaky_re_lu_38 (LeakyReLU) (None, 318, 318, 16) 0 conv2d_38[0][0]

____________________________________________________________________________________________________

leaky_re_lu_39 (LeakyReLU) (None, 318, 318, 16) 0 conv2d_39[0][0]

____________________________________________________________________________________________________

batch_normalization_38 (BatchNor (None, 318, 318, 16) 64 leaky_re_lu_38[0][0]

____________________________________________________________________________________________________

batch_normalization_39 (BatchNor (None, 318, 318, 16) 64 leaky_re_lu_39[0][0]

____________________________________________________________________________________________________

concatenate_13 (Concatenate) (None, 318, 318, 32) 0 batch_normalization_38[0][0]

batch_normalization_39[0][0]

____________________________________________________________________________________________________

zero_padding2d_40 (ZeroPadding2D (None, 318, 318, 32) 0 concatenate_13[0][0]

____________________________________________________________________________________________________

zero_padding2d_41 (ZeroPadding2D (None, 320, 320, 32) 0 concatenate_13[0][0]

____________________________________________________________________________________________________

conv2d_40 (Conv2D) (None, 318, 318, 16) 528 zero_padding2d_40[0][0]

____________________________________________________________________________________________________

conv2d_41 (Conv2D) (None, 318, 318, 32) 9248 zero_padding2d_41[0][0]

____________________________________________________________________________________________________

leaky_re_lu_40 (LeakyReLU) (None, 318, 318, 16) 0 conv2d_40[0][0]

____________________________________________________________________________________________________

leaky_re_lu_41 (LeakyReLU) (None, 318, 318, 32) 0 conv2d_41[0][0]

____________________________________________________________________________________________________

batch_normalization_40 (BatchNor (None, 318, 318, 16) 64 leaky_re_lu_40[0][0]

____________________________________________________________________________________________________

batch_normalization_41 (BatchNor (None, 318, 318, 32) 128 leaky_re_lu_41[0][0]

____________________________________________________________________________________________________

concatenate_14 (Concatenate) (None, 318, 318, 48) 0 batch_normalization_40[0][0]

batch_normalization_41[0][0]

____________________________________________________________________________________________________

zero_padding2d_42 (ZeroPadding2D (None, 318, 318, 48) 0 concatenate_14[0][0]

____________________________________________________________________________________________________

conv2d_42 (Conv2D) (None, 305, 305, 16) 150544 zero_padding2d_42[0][0]

____________________________________________________________________________________________________

leaky_re_lu_42 (LeakyReLU) (None, 305, 305, 16) 0 conv2d_42[0][0]

____________________________________________________________________________________________________

batch_normalization_42 (BatchNor (None, 305, 305, 16) 64 leaky_re_lu_42[0][0]

____________________________________________________________________________________________________

zero_padding2d_43 (ZeroPadding2D (None, 305, 305, 16) 0 batch_normalization_42[0][0]

____________________________________________________________________________________________________

zero_padding2d_44 (ZeroPadding2D (None, 307, 307, 16) 0 batch_normalization_42[0][0]

____________________________________________________________________________________________________

conv2d_43 (Conv2D) (None, 305, 305, 112) 1904 zero_padding2d_43[0][0]

____________________________________________________________________________________________________

conv2d_44 (Conv2D) (None, 305, 305, 48) 6960 zero_padding2d_44[0][0]

____________________________________________________________________________________________________

leaky_re_lu_43 (LeakyReLU) (None, 305, 305, 112) 0 conv2d_43[0][0]

____________________________________________________________________________________________________

leaky_re_lu_44 (LeakyReLU) (None, 305, 305, 48) 0 conv2d_44[0][0]

____________________________________________________________________________________________________

batch_normalization_43 (BatchNor (None, 305, 305, 112) 448 leaky_re_lu_43[0][0]

____________________________________________________________________________________________________

batch_normalization_44 (BatchNor (None, 305, 305, 48) 192 leaky_re_lu_44[0][0]

____________________________________________________________________________________________________

concatenate_15 (Concatenate) (None, 305, 305, 160) 0 batch_normalization_43[0][0]

batch_normalization_44[0][0]

____________________________________________________________________________________________________

zero_padding2d_45 (ZeroPadding2D (None, 305, 305, 160) 0 concatenate_15[0][0]

____________________________________________________________________________________________________

zero_padding2d_46 (ZeroPadding2D (None, 307, 307, 160) 0 concatenate_15[0][0]

____________________________________________________________________________________________________

conv2d_45 (Conv2D) (None, 305, 305, 64) 10304 zero_padding2d_45[0][0]

____________________________________________________________________________________________________

conv2d_46 (Conv2D) (None, 305, 305, 32) 46112 zero_padding2d_46[0][0]

____________________________________________________________________________________________________

leaky_re_lu_45 (LeakyReLU) (None, 305, 305, 64) 0 conv2d_45[0][0]

____________________________________________________________________________________________________

leaky_re_lu_46 (LeakyReLU) (None, 305, 305, 32) 0 conv2d_46[0][0]

____________________________________________________________________________________________________

batch_normalization_45 (BatchNor (None, 305, 305, 64) 256 leaky_re_lu_45[0][0]

____________________________________________________________________________________________________

batch_normalization_46 (BatchNor (None, 305, 305, 32) 128 leaky_re_lu_46[0][0]

____________________________________________________________________________________________________

concatenate_16 (Concatenate) (None, 305, 305, 96) 0 batch_normalization_45[0][0]

batch_normalization_46[0][0]

____________________________________________________________________________________________________

zero_padding2d_47 (ZeroPadding2D (None, 305, 305, 96) 0 concatenate_16[0][0]

____________________________________________________________________________________________________

zero_padding2d_48 (ZeroPadding2D (None, 307, 307, 96) 0 concatenate_16[0][0]

____________________________________________________________________________________________________

conv2d_47 (Conv2D) (None, 305, 305, 40) 3880 zero_padding2d_47[0][0]

____________________________________________________________________________________________________

conv2d_48 (Conv2D) (None, 305, 305, 40) 34600 zero_padding2d_48[0][0]

____________________________________________________________________________________________________

leaky_re_lu_47 (LeakyReLU) (None, 305, 305, 40) 0 conv2d_47[0][0]

____________________________________________________________________________________________________

leaky_re_lu_48 (LeakyReLU) (None, 305, 305, 40) 0 conv2d_48[0][0]

____________________________________________________________________________________________________

batch_normalization_47 (BatchNor (None, 305, 305, 40) 160 leaky_re_lu_47[0][0]

____________________________________________________________________________________________________

batch_normalization_48 (BatchNor (None, 305, 305, 40) 160 leaky_re_lu_48[0][0]

____________________________________________________________________________________________________

concatenate_17 (Concatenate) (None, 305, 305, 80) 0 batch_normalization_47[0][0]

batch_normalization_48[0][0]

____________________________________________________________________________________________________

zero_padding2d_49 (ZeroPadding2D (None, 305, 305, 80) 0 concatenate_17[0][0]

____________________________________________________________________________________________________

zero_padding2d_50 (ZeroPadding2D (None, 307, 307, 80) 0 concatenate_17[0][0]

____________________________________________________________________________________________________

conv2d_49 (Conv2D) (None, 305, 305, 32) 2592 zero_padding2d_49[0][0]

____________________________________________________________________________________________________

conv2d_50 (Conv2D) (None, 305, 305, 96) 69216 zero_padding2d_50[0][0]

____________________________________________________________________________________________________

leaky_re_lu_49 (LeakyReLU) (None, 305, 305, 32) 0 conv2d_49[0][0]

____________________________________________________________________________________________________

leaky_re_lu_50 (LeakyReLU) (None, 305, 305, 96) 0 conv2d_50[0][0]

____________________________________________________________________________________________________

batch_normalization_49 (BatchNor (None, 305, 305, 32) 128 leaky_re_lu_49[0][0]

____________________________________________________________________________________________________

batch_normalization_50 (BatchNor (None, 305, 305, 96) 384 leaky_re_lu_50[0][0]

____________________________________________________________________________________________________

concatenate_18 (Concatenate) (None, 305, 305, 128) 0 batch_normalization_49[0][0]

batch_normalization_50[0][0]

____________________________________________________________________________________________________

zero_padding2d_51 (ZeroPadding2D (None, 305, 305, 128) 0 concatenate_18[0][0]

____________________________________________________________________________________________________

conv2d_51 (Conv2D) (None, 288, 288, 32) 1327136 zero_padding2d_51[0][0]

____________________________________________________________________________________________________

leaky_re_lu_51 (LeakyReLU) (None, 288, 288, 32) 0 conv2d_51[0][0]

____________________________________________________________________________________________________

batch_normalization_51 (BatchNor (None, 288, 288, 32) 128 leaky_re_lu_51[0][0]

____________________________________________________________________________________________________

zero_padding2d_52 (ZeroPadding2D (None, 288, 288, 32) 0 batch_normalization_51[0][0]

____________________________________________________________________________________________________

conv2d_52 (Conv2D) (None, 288, 288, 64) 2112 zero_padding2d_52[0][0]

____________________________________________________________________________________________________

leaky_re_lu_52 (LeakyReLU) (None, 288, 288, 64) 0 conv2d_52[0][0]

____________________________________________________________________________________________________

batch_normalization_52 (BatchNor (None, 288, 288, 64) 256 leaky_re_lu_52[0][0]

____________________________________________________________________________________________________

zero_padding2d_53 (ZeroPadding2D (None, 288, 288, 64) 0 batch_normalization_52[0][0]

____________________________________________________________________________________________________

conv2d_53 (Conv2D) (None, 288, 288, 64) 4160 zero_padding2d_53[0][0]

____________________________________________________________________________________________________

leaky_re_lu_53 (LeakyReLU) (None, 288, 288, 64) 0 conv2d_53[0][0]

____________________________________________________________________________________________________

batch_normalization_53 (BatchNor (None, 288, 288, 64) 256 leaky_re_lu_53[0][0]

____________________________________________________________________________________________________

zero_padding2d_54 (ZeroPadding2D (None, 288, 288, 64) 0 batch_normalization_53[0][0]

____________________________________________________________________________________________________

conv2d_54 (Conv2D) (None, 288, 288, 1) 65 zero_padding2d_54[0][0]

____________________________________________________________________________________________________

leaky_re_lu_54 (LeakyReLU) (None, 288, 288, 1) 0 conv2d_54[0][0]

____________________________________________________________________________________________________

batch_normalization_54 (BatchNor (None, 288, 288, 1) 4 leaky_re_lu_54[0][0]

====================================================================================================

Total params: 1,684,565

Trainable params: 1,682,995

Non-trainable params: 1,570

_______________________________

And here come the losses:

Found device 0 with properties:

name: GeForce GTX TITAN Z

major: 3 minor: 5 memoryClockRate (GHz) 0.8755

pciBusID 0000:84:00.0

Total memory: 5.94GiB

Free memory: 5.86GiB

2017-10-12 21:14:52.882773: I tensorflow/core/common_runtime/gpu/gpu_device.cc:961] DMA: 0

2017-10-12 21:14:52.882787: I tensorflow/core/common_runtime/gpu/gpu_device.cc:971] 0: Y

2017-10-12 21:14:52.882805: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1030] Creating TensorFlow device (/gpu:0) -> (device: 0, name: GeForce GTX TITAN Z, pci bus id: 0000:84:00.0) Weights loaded!

2017-10-12 21:15:04.972817: I tensorflow/core/common_runtime/gpu/pool_allocator.cc:247] PoolAllocator: After 2024 get requests, put_count=1992 evicted_count=1000 eviction_rate=0.502008 and unsatisfied allocation rate=0.559289

2017-10-12 21:15:04.972892: I tensorflow/core/common_runtime/gpu/pool_allocator.cc:259] Raising pool_size_limit_ from 100 to 110

EP 0/100 | TRAIN_LOSS: 2.074034, 167.909124 | VALID_LOSS: 0.000000, 0.000000

EP 5/100 | TRAIN_LOSS: 1.574912, 165.120924 | VALID_LOSS: 0.000000, 0.000000

EP 10/100 | TRAIN_LOSS: 0.81238, 164.328942 | VALID_LOSS: 0.000000, 0.000000

.

.

.

EP 80/100 | TRAIN_LOSS: 0.24034, 26.891274 | VALID_LOSS: 0.000000, 0.000000

EP 85/100 | TRAIN_LOSS: 0.23912, 26.889122 | VALID_LOSS: 0.000000, 0.000000

EP 90/100 | TRAIN_LOSS: 0.23120, 26.871982 | VALID_LOSS: 0.000000, 0.000000

The valid loss is 0 because I do not calculate it (for timing purpose). Actually I calculated all the losses at the first time I ran the code and I saw that the valid loss decreased as low as the training loss.

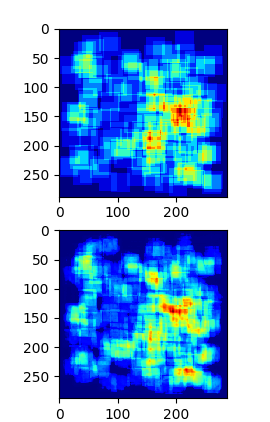

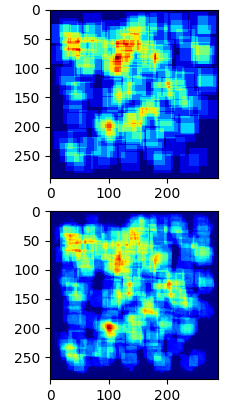

When I test the model, it turns out that it always predict the same count (160) although the heat maps returned are different and quite closed to the label.

Could anyone please explain what is wrong with my code :D