tensorflow-wavenet

tensorflow-wavenet copied to clipboard

tensorflow-wavenet copied to clipboard

Dilated Causal Convolution is implemented differently from the paper

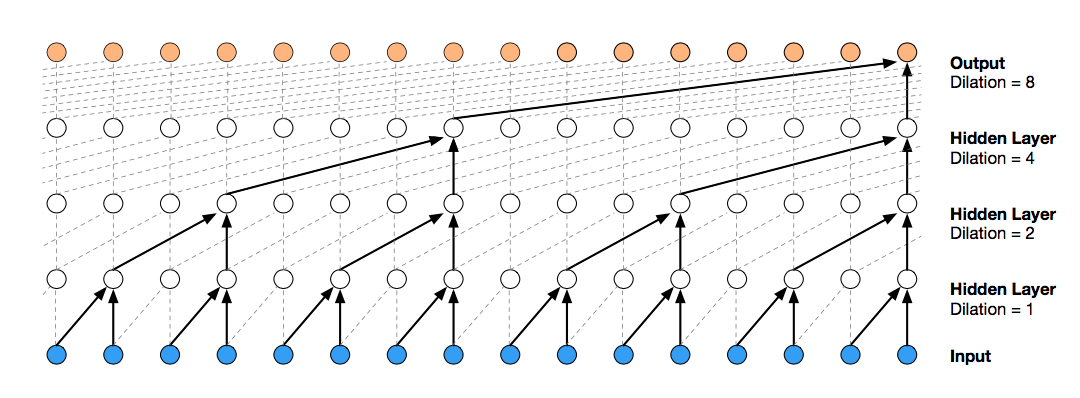

According to the following visualization of dilated causal convolutional layers, I think strides in the conv1d (after time_to_batch) should be 2(equal to the dilation) so that there is only minimum number of nodes which can cover the receptive field. However, in this implementation, the stride is set to 2. I can somehow understand this can also work because anyway neural network will extract proper feature for the generative model. What I want to know is whether it is intended or not. If so, what is the reason that it is modified?

I'm not sure I follow you. You think the strides should be 2 but in the implementation it is set to 2?

@godelicbach The strides should be 1 for conv1d (after time_to_batch). Because after time_to_batch reshape, data is arranged to dilated form for conv1d. Check following numpy example code for doing the same reshape as time_to_batch (ignore the channel dimension):

dilation = 2, batch = 1, value_len = 8

arr = np.arange(batch * value_len).reshape((batch, value_len))

arr:

[[0, 1, 2, 3, 4, 5, 6, 7]]

arr_r = arr.reshape([-1, dilation])

arr_r:

[[0, 1],

[2, 3],

[4, 5],

[6, 7]]

arr_t = arr1.transpose()

arr_t:

[[0, 2, 4, 6],

[1, 3, 5, 7]]

time_to_batch_output = arr_t.reshape([dilation * batch, -1])

time_to_batch_output:

[[0, 2, 4, 6],

[1, 3, 5, 7]]