besu

besu copied to clipboard

besu copied to clipboard

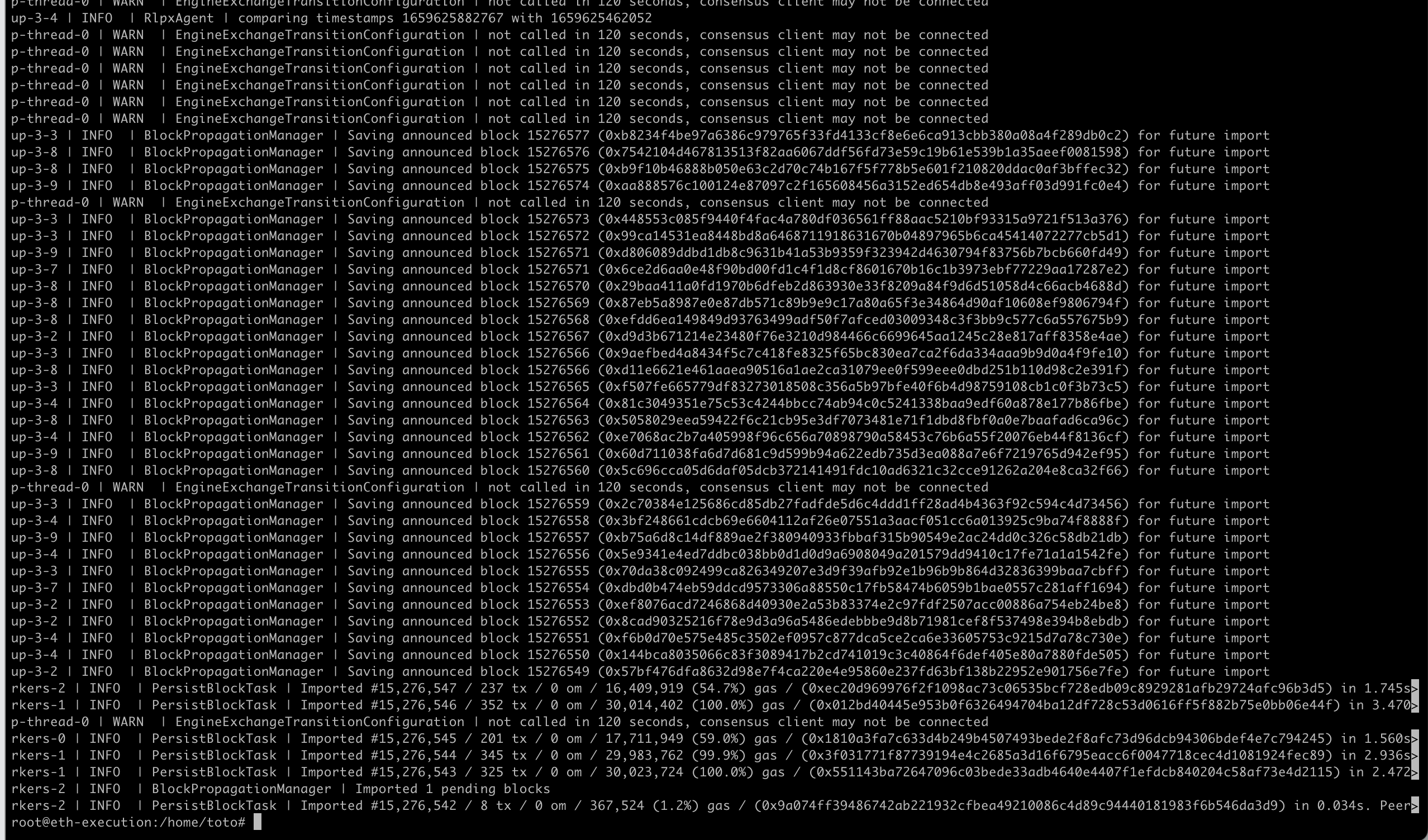

Besu stops importing new blocks on mainnet

Besu has stopped updating blocks on mainnet a couple of times recently. After a manual restart it court up with the head of the chain and contiues as normal. Im running Geth on another computer (different port) as a backup which has had no issues.

Besu v22.4.1 openJDK v11.0.15 Ubuntu server v22.04 (kernal 5.15.37)

i7 12700 32GB ram

This log line is a bit strange:

Jun 06 16:16:21 node besu[968]: 2022-06-06 16:16:21.842+01:00 | nioEventLoopGroup-3-1 | INFO | BlockPropagationManager | Saving announced block 14915630 (0x090c5aefdaf3d7e03a4f4ae3be280e8e5795958d33188baf3de5aa48eeaeab94) for future import

We have changed this log message a few months ago, but in your log it still show the old message format. Could be please verify that you are actually running v22.4.1? It appears to be an older version.

Looks good.

curl -X POST -H "Content-Type: application/json" --data '{"jsonrpc":"2.0","method":"web3_clientVersion","params":[],"id":67}' http://localhost:8545 { "jsonrpc" : "2.0", "id" : 67, "result" : "besu/v22.4.1/linux-x86_64/openjdk-java-11" }

Do you want more of the log?

This occured again a couple of hours ago. I've attated a larger log dump over the outage. Log03.txt

@Gabriel-Trintinalia and I looked at the log file (Log03.txt) and found the following: Block #14,965,607 with block hash 0xe27481970fc2c069ce84d9be9561d21ac87d699fd814e388a5eea24d587cfb51 is imported, but checking on a block explorer we can see that this block is not on the canonical chain. Following this, announced blocks are saved, including block 14965608 with hash 0xf8f193f22a447848ff0e2b36f2ea8c0f2765185556749e7bb7c9dd6e69b80563, which is part of the canonical chain, but the block with number 14,965,607 that is on the canonical chain is never received and imported. We described the problem to @ajsutton and looked at some code, where we found the following (writeup from Adrian):

BlockPropagationManager is responsible for handling blocks we receive from gossip and backfilling any ancestors for those blocks we don’t have so that we can import them. It’s the backfilling process that’s not working and causing the stall. The overall issue seems to be that BlockPropagationManager is assuming that every block it’s trying to backfill is on the canonical chain. So if the block we need is actually on a non-canonical chain we won’t ever fetch it and can no longer follow that fork. There’s some argument that we don’t need to follow non-canonical chains, and we don’t want to backfill too many non-canonical blocks this way or it could be a DoS vector, but we do need to follow short forks in order to determine if they wind up with a greater total difficulty (and thus become canonical).

In the case of this stall, we received a block 7 which wound up being non-canonical but because it was all we received besu imported it to its canonical chain. We didn’t receive the canonical version of xxx07. We then received the canonical 8 and I think we wound up in BlockPropagationManager.maybeProcessNonAnnouncedBlock where it compared our current chain head height (7) with the block number we require (7 but from a different fork) and it bailed out without requesting anything because 7 > 7 returned false.

The next problem is that BlockPropagationManager is requesting the block by number, not be hash so which fork the block we get is from will depend on what the peer we send the request to views as the canonical chain and that may not match our view or may not be the fork we need the block from.

In general I’d say that BlockPropagationManager needs to be reviewed and reworked with every usage of block number being very suspicious and thinking through how it would play out in the case of there being multiple forks.

@ajsutton it's a little bit different in this case because we received the canonical block

2022-07-19 20:17:21.051+00:00 | EthScheduler-Workers-2 | INFO | PersistBlockTask | Imported #15,175,202 / 31 tx / 0 om / 2,861,838 (9.5%) gas / (0x2071f46a7b3f487328984f14bcbdfc0833ef262b406a9a069b68fe56803dd5c4) in 0.106s. Peers: 36 2022-07-19 20:17:34.818+00:00 | EthScheduler-Workers-3 | INFO | PersistBlockTask | Imported #15,175,203 / 0 tx / 0 om / 0 (0.0%) gas / (0xa784b56c3b33c8ee141870e252b0260d4eb219908b5b5dd1c56172d831229cdb) in 0.003s. Peers: 34 2022-07-19 20:17:43.642+00:00 | EthScheduler-Workers-2 | INFO | PersistBlockTask | Imported #15,175,204 / 412 tx / 0 om / 29,983,543 (99.9%) gas / (0x6045730876efaa358cac24ed29536bbdce2eed1a9a5ec8e12b23b3408b175667) in 1.427s. Peers: 36 2022-07-19 20:17:51.739+00:00 | EthScheduler-Workers-1 | INFO | PersistBlockTask | Imported #15,175,205 / 217 tx / 0 om / 29,269,765 (97.5%) gas / (0x65f3ba10cdefd2f093c0dacaf4eadc11e11260fbaa88e0e2e64de50961d0d4d4) in 1.517s. Peers: 36 2022-07-19 20:17:57.462+00:00 | EthScheduler-Workers-1 | INFO | PersistBlockTask | Imported #15,175,206 / 58 tx / 0 om / 4,891,990 (16.3%) gas / (0xf135040b7995f979a4e52d75f295197892c8ce1d05a16fd628c56b0db256f59f) in 0.184s. Peers: 35 2022-07-19 20:17:58.178+00:00 | EthScheduler-Workers-1 | INFO | PersistBlockTask | Imported #15,175,206 / 60 tx / 0 om / 3,977,315 (13.3%) gas / (0x04261e16ba8bf3922bf9aa906504117d6af22dc92d21feaa0348ece3109b1c7a) in 0.115s. Peers: 35 2022-07-19 20:19:07.530+00:00 | nioEventLoopGroup-3-10 | INFO | BlockPropagationManager | Saving announced block 15175208 (0x5bc289661840bdd58b2a5fbf444dd0633a7649cf54a30c5354f71fa2cbbe040f) for future import 2022-07-19 20:19:33.266+00:00 | nioEventLoopGroup-3-10 | INFO | BlockPropagationManager | Saving announced block 15175210 (0x8dafb711ab0ff3599c268fccee67d757ee17e54c30db881b4a8cb4b6a3decb3a) for future import 2022-07-19 20:19:39.459+00:00 | nioEventLoopGroup-3-10 | INFO | BlockPropagationManager | Saving announced block 15175211 (0xcaabcfc11d610f74a917d8891a9510c2061e858446ac80b117905c106391d111) for future import 2022-07-19 20:19:48.976+00:00 | nioEventLoopGroup-3-3 | INFO | BlockPropagationManager | Saving announced block 15175212 (0xe0696c71b43807dd01d396dfb0e113cc5eb8f03e8a4e43f6f91f552ed074a97c) for future import 2022-07-19 20:20:05.414+00:00 | nioEventLoopGroup-3-3 | INFO | BlockPropagationManager | Saving announced block 15175213 (0xbfd885923cf7ba44ef3cbe88f0dc984744bfb90df9fb7c70eac1254b1c6e70e4) for future import 2022-07-19 20:20:10.167+00:00 | nioEventLoopGroup-3-3 | INFO | BlockPropagationManager | Saving announced block 15175214 (0x2917acccbdbb89f74111aea2581a8f1f65d1a0ad938d10b19360daed9db26c9e) for future import

Another interesting case https://ipfs.infura-ipfs.io/ipfs/QmWQKwTRbcXUgvw9WWP9pd7WFQQ7VSJYb5L9orTrwp5fDi

I found that on the log

RetryingGetBlockFromPeersTask | Getting block 14896930 (Optional[0xc29ee86a20768d59f287c37c77f6af3220d3aebdf565396ca9c81c5e131bc128]) from peer Optional[Peer 0x615a7472bf4d367704... PeerReputation 95, validated? true, disconnected? false], attempt 1 2022-06-03 11:46:26.581+00:00 | EthScheduler-Workers-2 | DEBUG | GetBlockFromPeerTask | Downloading block 14896930 (Optional[0xc29ee86a20768d59f287c37c77f6af3220d3aebdf565396ca9c81c5e131bc128]) from peer Peer 0x615a7472bf4d367704... PeerReputation 95, validated? true, disconnected? false.

it seems we have difficulties to get the next block if we look the behavior of another block

2022-06-03 11:46:45.834+00:00 | EthScheduler-Workers-3 | DEBUG | GetBlockFromPeerTask | Downloading block 14896932 (Optional[0xce2dd55bd28fa2f5248b2b0b11ba8fc466f610c70633a6aa0bdda01fd7ba9af1]) from peer Peer 0x710d7109c4e5127dc2... PeerReputation 100, validated? true, disconnected? false. 2022-06-03 11:46:45.835+00:00 | EthScheduler-Workers-3 | DEBUG | GetHeadersFromPeerByHashTask | Requesting 1 headers from peer Peer 0x710d7109c4e5127dc2... PeerReputation 100, validated? true, disconnected? false. 2022-06-03 11:46:45.845+00:00 | nioEventLoopGroup-3-2 | DEBUG | AbstractGetHeadersFromPeerTask | Received 1 of 1 headers requested from peer Peer 0x710d7109c4e5127dc2... PeerReputation 100, validated? true, disconnected? false 2022-06-03 11:46:45.845+00:00 | nioEventLoopGroup-3-2 | DEBUG | GetBodiesFromPeerTask | Requesting 1 bodies from peer Peer 0x710d7109c4e5127dc2... PeerReputation 100, validated? true, disconnected? false. 2022-06-03 11:46:45.850+00:00 | EthScheduler-Workers-0 | DEBUG | GetPooledTransactionsFromPeerTask | Requesting 1 transaction pool entries from peer Peer 0x4c5e92a1da98c42908... PeerReputation 100, validated? true, disconnected? false. 2022-06-03 11:46:45.857+00:00 | nioEventLoopGroup-3-2 | DEBUG | GetBlockFromPeerTask | Successfully downloaded block 14896932 (Optional[0xce2dd55bd28fa2f5248b2b0b11ba8fc466f610c70633a6aa0bdda01fd7ba9af1]) from peer Peer

it’s like we never ask for the header

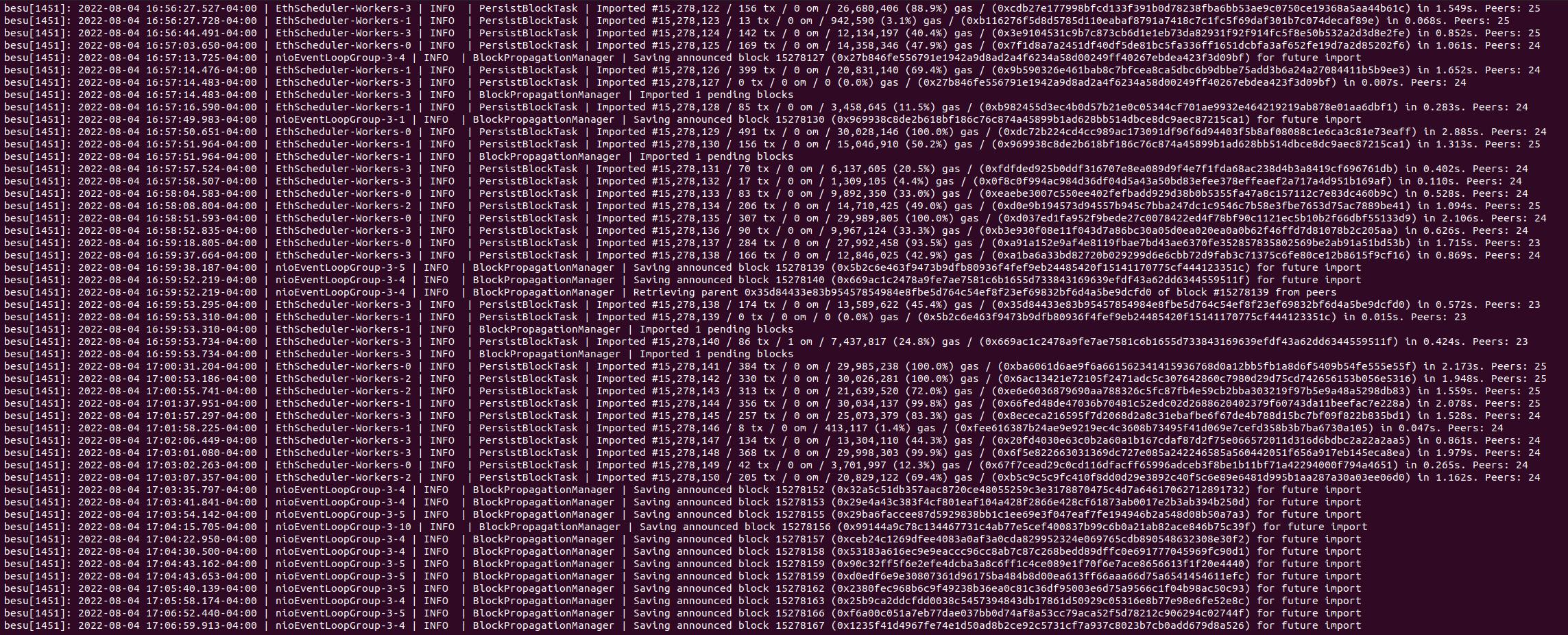

I reproduced this issue in 22.7.0-RC2. BTW, snap sync with Bonsai was super fast and it was running fine until:

2022-07-23 18:10:48.616+00:00 | EthScheduler-Workers-3 | INFO | PersistBlockTask | Imported #15,200,404 / 147 tx / 0 om / 13,796,201 (45.9%) gas / (0xab3d64b585026163e31598ea2d9af51c63326287723c60b9a8eef

e60e9adf3b1) in 0.419s. Peers: 53

2022-07-23 18:11:14.037+00:00 | EthScheduler-Workers-3 | INFO | PersistBlockTask | Imported #15,200,405 / 129 tx / 1 om / 8,525,250 (28.4%) gas / (0x7d9b93e12de81af0342335b8a5c87d2aa06c783b7df9e58f87ce5e

e6a2b1fd4d) in 0.353s. Peers: 63

2022-07-23 18:11:44.512+00:00 | nioEventLoopGroup-3-3 | INFO | BlockPropagationManager | Saving announced block 15200407 (0xc97f48368ce7c3b96b32cd730f9ce714e6b6e33d40cb49d4b6563bf1f144bc13) for future im

port

2022-07-23 18:11:46.094+00:00 | Timer-0 | INFO | DNSTimerTask | Refreshing DNS records with enrtree://AKA3AM6LPBYEUDMVNU3BSVQJ5AD45Y7YPOHJLEF6W26QOE4VTUDPE@all.mainnet.ethdisco.net

2022-07-23 18:12:17.802+00:00 | nioEventLoopGroup-3-6 | INFO | BlockPropagationManager | Saving announced block 15200408 (0x06f356035feb078b34a410545785ca2decec4cfdbe3d6594d121147945fc8ff1) for future im

port

2022-07-23 18:12:31.655+00:00 | nioEventLoopGroup-3-3 | INFO | BlockPropagationManager | Saving announced block 15200410 (0x465d88e5e5fb83f79c1e8fcbe7fe0e38824825be373e3ed8e970c2f04b5c7a78) for future im

port

Then it appears to have given up and reached this point where it does nothing but refresh DNS repeatedly:

2022-07-23 18:17:17.507+00:00 | nioEventLoopGroup-3-10 | INFO | BlockPropagationManager | Saving announced block 15200434 (0xf6476b6944510a322cca69039dc43b680ed7b8ddf375e97b767823e11930a376) for future import

2022-07-23 18:17:46.093+00:00 | Timer-0 | INFO | DNSTimerTask | Refreshing DNS records with enrtree://AKA3AM6LPBYEUDMVNU3BSVQJ5AD45Y7YPOHJLEF6W26QOE4VTUDPE@all.mainnet.ethdisco.net

2022-07-23 18:17:52.677+00:00 | nioEventLoopGroup-3-10 | INFO | BlockPropagationManager | Saving announced block 15200435 (0x96162feb935cff325a1ce5004ab661758e58e6f3bb14cb9b9af19f4fcab84c11) for future import

2022-07-23 18:18:46.093+00:00 | Timer-0 | INFO | DNSTimerTask | Refreshing DNS records with enrtree://AKA3AM6LPBYEUDMVNU3BSVQJ5AD45Y7YPOHJLEF6W26QOE4VTUDPE@all.mainnet.ethdisco.net

2022-07-23 18:19:46.093+00:00 | Timer-0 | INFO | DNSTimerTask | Refreshing DNS records with enrtree://AKA3AM6LPBYEUDMVNU3BSVQJ5AD45Y7YPOHJLEF6W26QOE4VTUDPE@all.mainnet.ethdisco.net

2022-07-23 18:20:46.094+00:00 | Timer-0 | INFO | DNSTimerTask | Refreshing DNS records with enrtree://AKA3AM6LPBYEUDMVNU3BSVQJ5AD45Y7YPOHJLEF6W26QOE4VTUDPE@all.mainnet.ethdisco.net

curl -X POST --data '{"jsonrpc":"2.0","method":"web3_clientVersion","params":[],"id":1}' localhost:8545 -s | jq

{

"jsonrpc": "2.0",

"id": 1,

"result": "besu/v22.7.0-RC2/linux-x86_64/openjdk-java-11"

}

Restart and we're back to normal operations.

Closing this as fixed, via RC3 and #4175. Please let us know if the fix is not working in the latest release.

Might re-open in conjunction with #4197

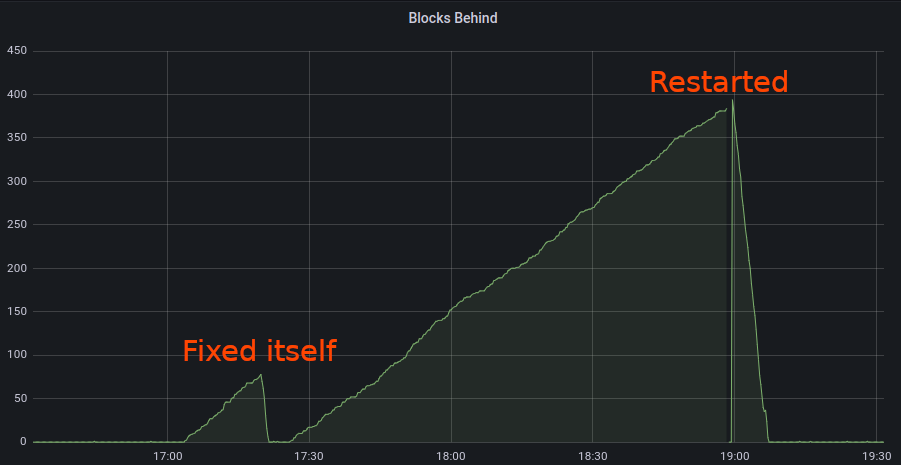

I have something like this happenning. 22.7.0 on mainnet. It's not the first time I had to restart besu in order for it to do something. Sometimes I look at grafana and see it crapped out for a few hours at night and then somehow resynced. I'll have to look into this in more detail.

Thanks for the report @totocz - we're investigating.

I'm having the same issue. Besu 22.7.0 on mainnet, fully synced (with X_SNAP) only a few days ago, and a couple hours ago it lost sync and was reporting a lot of "Saving announced block XXX for future import." It was up to over 350 blocks behind without signs of getting back in sync, and so I restarted it and it was able to resync. But I certainly won't be around to restart it all the time

.

.

Same issue for me today on 22.7.0. Synced with FAST sync mode last week.

And again, overnight... :\

I don't know if this is the same issue, but I also lost sync and it doesn't look like it's going to catch up: notice that it imports only 200 blocks per 5 mins:

edit: it did catch up after 2 hrs

eth1_1 | 2022-08-05 09:42:42.003+00:00 | main | INFO | DefaultP2PNetwork | Node address 0x4c9bb15b7415116d6062db5764e46b63e3b2560b

eth1_1 | 2022-08-05 09:42:42.005+00:00 | main | INFO | DefaultSynchronizer | Starting synchronizer.

eth1_1 | 2022-08-05 09:42:42.008+00:00 | main | INFO | FullSyncDownloader | Starting full sync.

eth1_1 | 2022-08-05 09:42:42.009+00:00 | main | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 0

eth1_1 | 2022-08-05 09:42:42.019+00:00 | main | INFO | Runner | Ethereum main loop is up.

eth1_1 | 2022-08-05 09:42:47.019+00:00 | EthScheduler-Timer-0 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 0

eth1_1 | 2022-08-05 09:42:47.237+00:00 | nioEventLoopGroup-3-1 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 3

eth1_1 | 2022-08-05 09:42:47.852+00:00 | nioEventLoopGroup-3-3 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 3

eth1_1 | 2022-08-05 09:42:48.161+00:00 | nioEventLoopGroup-3-5 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 4

eth1_1 | 2022-08-05 09:42:49.854+00:00 | nioEventLoopGroup-3-2 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 6

eth1_1 | 2022-08-05 09:42:50.299+00:00 | nioEventLoopGroup-3-5 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 5

eth1_1 | 2022-08-05 09:42:53.810+00:00 | nioEventLoopGroup-3-3 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 6

eth1_1 | 2022-08-05 09:42:54.365+00:00 | nioEventLoopGroup-3-10 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 11

eth1_1 | 2022-08-05 09:42:54.543+00:00 | nioEventLoopGroup-3-5 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 7

eth1_1 | 2022-08-05 09:42:56.547+00:00 | nioEventLoopGroup-3-9 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 9

eth1_1 | 2022-08-05 09:42:57.009+00:00 | nioEventLoopGroup-3-4 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 10

eth1_1 | 2022-08-05 09:42:58.044+00:00 | nioEventLoopGroup-3-9 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 10

eth1_1 | 2022-08-05 09:43:03.044+00:00 | EthScheduler-Timer-0 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 10

eth1_1 | 2022-08-05 09:43:03.309+00:00 | nioEventLoopGroup-3-4 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 11

eth1_1 | 2022-08-05 09:43:04.799+00:00 | nioEventLoopGroup-3-3 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 11

eth1_1 | 2022-08-05 09:43:06.984+00:00 | nioEventLoopGroup-3-2 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 11

eth1_1 | 2022-08-05 09:43:07.229+00:00 | nioEventLoopGroup-3-4 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 12

eth1_1 | 2022-08-05 09:43:10.072+00:00 | nioEventLoopGroup-3-7 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 12

eth1_1 | 2022-08-05 09:43:10.838+00:00 | nioEventLoopGroup-3-5 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 16

eth1_1 | 2022-08-05 09:43:13.200+00:00 | nioEventLoopGroup-3-1 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 14

eth1_1 | 2022-08-05 09:43:13.698+00:00 | nioEventLoopGroup-3-3 | INFO | FullSyncTargetManager | No sync target, waiting for peers. Current peers: 15

eth1_1 | 2022-08-05 09:44:18.084+00:00 | Timer-0 | INFO | DNSResolver | Resolved 2409 nodes

eth1_1 | 2022-08-05 09:44:18.464+00:00 | Timer-0 | WARN | RlpxAgent | Attempt to connect to peer with no listening port: enode://92ae467ca2719a0ca683d103580b78f3d0d0d58be0f8313ebe268da05e94ef0f2d91aa7834b0ec260e4b121a04a4bbf428d31d2b86bd34df3141ee56ccd63b01@34.76.173.33:0?discport=30853

eth1_1 | 2022-08-05 09:44:41.542+00:00 | vert.x-eventloop-thread-0 | WARN | EngineExchangeTransitionConfiguration | not called in 120 seconds, consensus client may not be connected

eth1_1 | 2022-08-05 09:45:32.115+00:00 | EthScheduler-Services-1 (importBlock) | INFO | FullImportBlockStep | Import reached block 15277400 (0x7fc89a0f745deb9c9045808b948a3a860e2d7eb925598d9d570fdc916c0264b4), - Mg/s, Peers: 20

eth1_1 | 2022-08-05 09:46:41.643+00:00 | vert.x-eventloop-thread-0 | WARN | EngineExchangeTransitionConfiguration | not called in 120 seconds, consensus client may not be connected

eth1_1 | 2022-08-05 09:48:41.743+00:00 | vert.x-eventloop-thread-0 | WARN | EngineExchangeTransitionConfiguration | not called in 120 seconds, consensus client may not be connected

eth1_1 | 2022-08-05 09:50:41.821+00:00 | vert.x-eventloop-thread-0 | WARN | EngineExchangeTransitionConfiguration | not called in 120 seconds, consensus client may not be connected

eth1_1 | 2022-08-05 09:51:12.811+00:00 | EthScheduler-Services-1 (importBlock) | INFO | FullImportBlockStep | Import reached block 15277600 (0xbd0b69eca39ffb24074824a9a43833e078ed4c69fb6e54b66beaf4bad0b5ea6c), 8.995 Mg/s, Peers: 25

eth1_1 | 2022-08-05 09:52:41.850+00:00 | vert.x-eventloop-thread-0 | WARN | EngineExchangeTransitionConfiguration | not called in 120 seconds, consensus client may not be connected

eth1_1 | 2022-08-05 09:54:41.950+00:00 | vert.x-eventloop-thread-0 | WARN | EngineExchangeTransitionConfiguration | not called in 120 seconds, consensus client may not be connected

eth1_1 | 2022-08-05 09:56:33.465+00:00 | EthScheduler-Services-1 (importBlock) | INFO | FullImportBlockStep | Import reached block 15277800 (0xd2334906a8375881573a58809246512f40cd5af90076dc5c5a333702f5e62128), 9.663 Mg/s, Peers: 25

eth1_1 | 2022-08-05 09:56:42.030+00:00 | vert.x-eventloop-thread-0 | WARN | EngineExchangeTransitionConfiguration | not called in 120 seconds, consensus client may not be connected

eth1_1 | 2022-08-05 09:58:42.034+00:00 | vert.x-eventloop-thread-0 | WARN | EngineExchangeTransitionConfiguration | not called in 120 seconds, consensus client may not be connected

eth1_1 | 2022-08-05 10:00:42.132+00:00 | vert.x-eventloop-thread-0 | WARN | EngineExchangeTransitionConfiguration | not called in 120 seconds, consensus client may not be connected

eth1_1 | 2022-08-05 10:01:53.450+00:00 | EthScheduler-Services-1 (importBlock) | INFO | FullImportBlockStep | Import reached block 15278000 (0xed72dd08f53fca91ce5994ff30221382d0833f05146cb94fec7671428581a27a), 9.856 Mg/s, Peers: 25

same here, but on test-nest

last block:

2022-08-07 20:20:50.176+03:00 | EthScheduler-Workers-1 | INFO | PersistBlockTask | Imported #7,363,647 / 4 tx / 0 om / 190,397 (0.6%) gas / (0x35c43081124438426a2bea5c39f7971de56ec5fa1a7aef6bb31faccc50d5dc9f) in 0.033s. Peers: 11

after that there is a few messages about

Saving announced block 7363676 (0x92429e092f2d318f38cb0024af19abc8bc320371feaaa135b5d60cb4b8e76f48) for future import

then it stops render this and render only

2022-08-08 17:33:42.865+03:00 | vert.x-eventloop-thread-0 | WARN | EngineExchangeTransitionConfiguration | not called in 120 seconds, consensus client may not be connected

commandline is this:

rem set pass=--engine-jwt-secret=C:/eth2D/goerli/jwt.secret.hex

rem set rpc=--rpc-http-enabled

set port=--engine-rpc-http-port=9551

set p2pport=--p2p-port=33333

%JAVA_HOME%/bin/java -cp besu-22.7.0/lib/* org.hyperledger.besu.Besu --network=goerli ^

--data-path C:/eth2D/goerl_chk --sync-mode=X_CHECKPOINT --data-storage-format=BONSAI ^

%p2pport% %port% %pass% %rpc% >> besu.besu-22.7.0.checkpoint.log 2>&1

full log https://gist.githubusercontent.com/kirillp/b1fc280d517559fbd565d8718942634d/raw/0289d2b735fa2c3e28a0bd26c312f5561f1f1815/besu.besu-22.7.0.checkpoint.log.fail

@kirillp if your node is still running and stuck, could you please run this command:

curl -X POST --data '{"jsonrpc":"2.0","method":"admin_peers","params":[],"id":1}' http://127.0.0.1:8545

and post the result?

See also: https://besu.hyperledger.org/en/stable/Reference/API-Methods/#admin_peers

it is running and stuck but it does not have "--rpc-http-enabled" in the start args, it is commented ;( however it is responding to this:

curl -X POST --data '{"jsonrpc":"2.0","method":"admin_peers","params":[],"id":1}' 127.0.0.1:9551

{"jsonrpc":"2.0","id":null,"error":{"code":-40100,"message":"Unauthorized"}}

I can also invoke jstack or run VisualVM, sample CPU, or memory or make a heapdump

I tried this on my other machine that running Besu main-net and it does not work:

C:\Eth\besu>curl -X POST --data '{"jsonrpc":"2.0","method":"admin_peers","params":[],"id":1}' http://127.0.0.1:8545

{"jsonrpc":"2.0","id":null,"error":{"code":-32700,"message":"Parse error"}}

Do I need to put the content of jwt.secret.hex somewhere to curl request ?

@kirillp probably on Window the command needs to be written differently, but I do not know how. If you node is still stuck, then a restart usually solves.

This is hard to replicate. From the logs, it appears that:

When a block is saved for future import, requesting the lowest announced block parent is not always the best option. It can be that we cannot retrieve this block and then we keep selecting the lowest block over and over and eventually it does not fix the gap.

For example, Chain is at 10. Block 12 (Hash A) arrives and it is saved.

I think a better approach for trying to unstick is to request the parent of the lowest pending ancestor when saving a

We likely need to be able to request multiple blocks at once. If there are multiple forks we may need to retrieve then we could, up to some reasonable limit like 5 or 10, request a block from each fork in parallel. That would avoid getting stuck because one fork is unavailable, and improve performance when multiple forks are pending.

This is hard to replicate. From the logs, I can see at least one situation that could cause issues. It appears that:

When a block is saved for future import, requesting the lowest announced block parent is not always the best option. It can be that we cannot retrieve this block and then we keep selecting the lowest block over and over and eventually it does not fix the gap.

For example, Chain is at 10. Block 12 (Hash A) arrives and it is saved. Block 12 (Hash B) arrives and it is saved. Block 11 arrives and it is imported and then it triggers block 12 (Hash A) to be imported. Block 13 arrivers and it is imported. … We miss 14 … Block 15 arrives and it is saved. We request the parent of Block 12 (Hash B). Block 16 arrives and it is saved. We request the parent of Block 12 (Hash B). Block 17 arrives and it is saved. We request the parent of Block 12 (Hash B). Chain is stuck.

Maybe a better approach for trying to unstick is to request the parent of the lowest pending ancestor, for example: Chain is at 10. Block 12 (Hash A) arrives and it is saved. Block 12 (Hash B) arrives and it is saved. Block 11 arrives and it is imported and then it triggers block 12 (Hash A) to be imported. Block 13 arrives and it is imported. … We miss 14 … … We miss 15 … Block 16 arrives and it is saved. We request its parent 15. Block 15 arrives and it is saved. We request its parent 14. 14 arrives and it is imported, triggering 15 and 16 Chain is unstuck.

Also, we should make sure that when we request a block, we don't limit the request to a single peer (from a scan of the code a little while back it looked like we unintentionally were doing that). Any peer that's advertised any of the descendant blocks can be used to retrieve the missing block.

I created this draft PR to request the parent of a block instead of the lowest announced block: https://github.com/hyperledger/besu/pull/4227

probably on Window the command needs to be written differently

request itself works, but it needs authorization

probably on Window the command needs to be written differently

request itself works, but it needs authorization

It's an ADMIN API method and isn't enabled by default with --rpc-http-enabled - you need to add --rpc-http-api (and specify the APIs to use) as well.

As commented here we should also focus on why Full sync is not able to recover these situation, since it runs in parallel to the block synchronization, so I am focusing my investigation on Full sync and the sync target selection

It's an ADMIN API method and isn't enabled by default with

--rpc-http-enabled- you need to add --rpc-http-api (and specify the APIs to use) as well.

I restarted with --rpc-http-api=ETH,NET,WEB3,ADMIN

main | WARN | Besu | --rpc-http-api has been ignored because --rpc-http-enabled was not defined on the command line. main | INFO | JsonRpcService | Starting JSON-RPC service on 127.0.0.1:9551

can I query admin_peers using JSON-RPC service on 127.0.0.1:9551 ?

yes - can run this @kirillp

curl -X POST --data '{"jsonrpc":"2.0","method":"admin_peers","params":[],"id":1}' http://127.0.0.1:9551

Another user reporting this issue on Discord https://discord.com/channels/905194001349627914/1015386305871491123 - same block number with different hashes being imported, and then a few blocks skipped, and further blocks saved for future import. 22.7.1