tokenizers

tokenizers copied to clipboard

Custom tokenizer not returning UNK on tokens outside the vocabulary

I was fooling around with a custom tokenizer and when I passed it text that wasn't in the vocabulary it simply ignored it :-(

from tokenizers.trainers import BpeTrainer

from tokenizers import Tokenizer

from tokenizers.models import BPE

import tokenizers

tokenizer = Tokenizer(BPE())

tokenizer.pre_tokenizer = tokenizers.pre_tokenizers.Whitespace()

trainer = BpeTrainer(special_tokens=["[UNK]", "[CLS]", "[SEP]", "[PAD]", "[MASK]"])

tokenizer.train(trainer, ["path to any file without hebrew"])

text = "i am tal and I wrote this שלום לברים"

encoding = tokenizer.encode(text)

shalom_index = text.find('שלום')

assert shalom_index>0

assert encoding.char_to_token(shalom_index) is not None, "The tokenizer wont say Shalom"

And

print(encoding.tokens)

Gives back

['i', 'am', 'tal', 'and', 'I', 'w', 'ro', 'te', 'this']

What am I doing wrong?

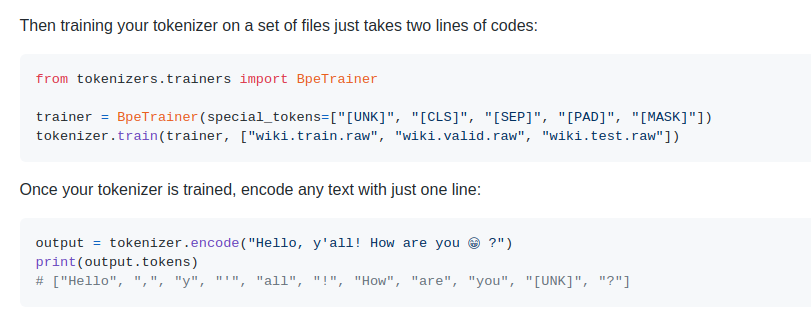

I found the answer in the docs

The README is inconsistent, it doesn't say to save the model and for UNK tokens to work.

Any objections to me adding that to the readme ?

This issue is stale because it has been open 30 days with no activity. Remove stale label or comment or this will be closed in 5 days.