peft

peft copied to clipboard

peft copied to clipboard

Does PEFT support translation models such as M2M100?

I'm tring to applied LoRA to M2M100.

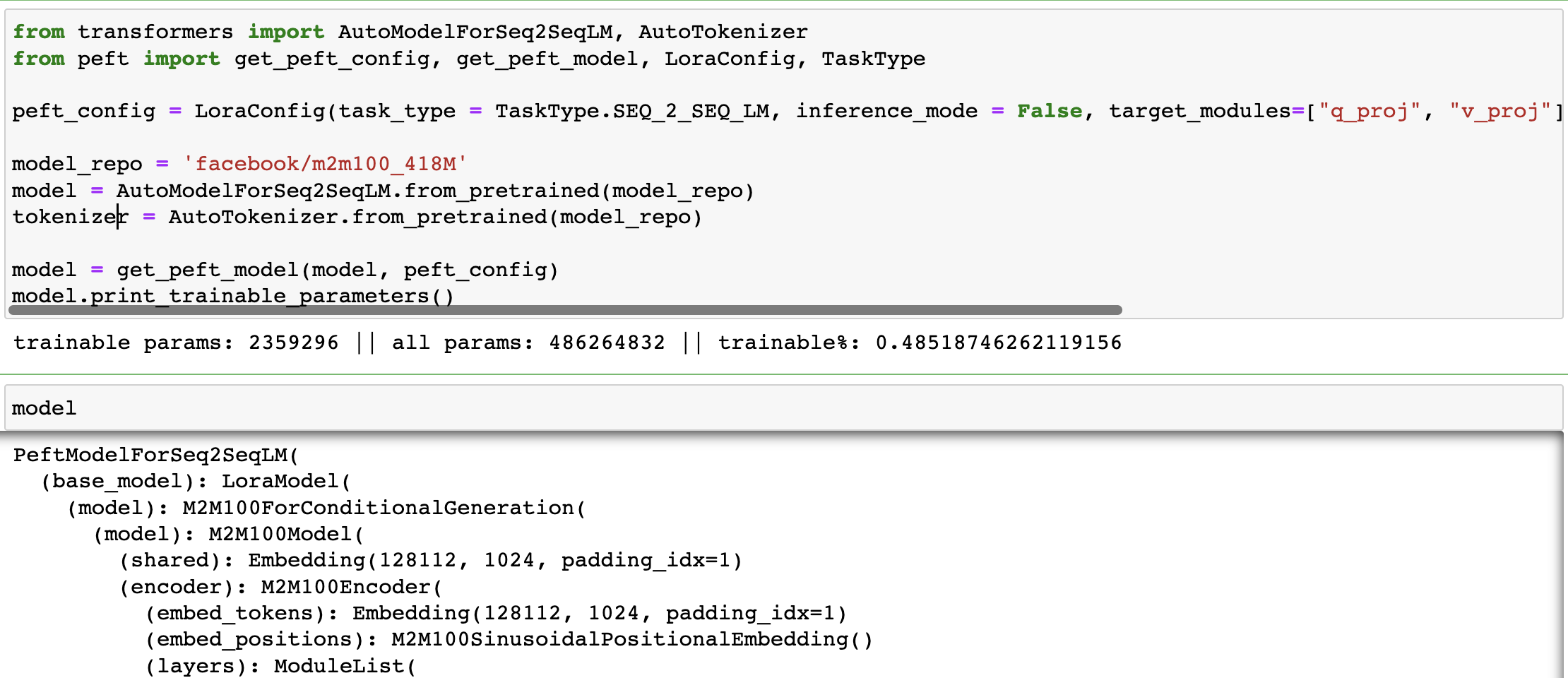

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

from peft import get_peft_config, get_peft_model, LoraConfig, TaskType

peft_config = LoraConfig(task_type = TaskType.SEQ_2_SEQ_LM, inference_mode = False, target_modules=["q_proj", "v_proj"], r = 16, lora_alpha = 32, lora_dropout = 0.1)

model_repo = 'facebook/m2m100_418M'

model = AutoModelForSeq2SeqLM.from_pretrained(model_repo, proxies=proxies)

tokenizer = AutoTokenizer.from_pretrained(model_repo, proxies=proxies)

model = get_peft_model(model, peft_config)

model.print_trainable_parameter()

...

peft_model_id="results"

model.save_pretrained(peft_model_id)

tokenizer.save_pretrained(peft_model_id)

torch.save(model.state_dict(), path)

But I got below error message.

AttributeError: 'M2M100ForConditionalGeneration' object has no attribute 'print_trainable_parameter'

If comment out 'print_trainable_parameter()', it runs without error, but I can't be sure if it goes right. Please let me know how to solve it? and if it supports other models not specified in models-support-matrix.

Thank you!

the function is print_trainable_parameters and not print_trainable_parameter

Thank you for confirming that it is applicable to other models. Also about print_trainable_parameter, sorry for bothering you with a minor mistake.