multi-gpu bloomz-7b1 fails: RuntimeError: module must have its parameters and buffers on device cuda:0 (device_ids[0]) but found one of them on device: cuda:7

I saw there are a lot of issues running on multi-gpu, so I thought to add more from myself.

First, I have the latest version of transformers:

transformers 4.28.0.dev0

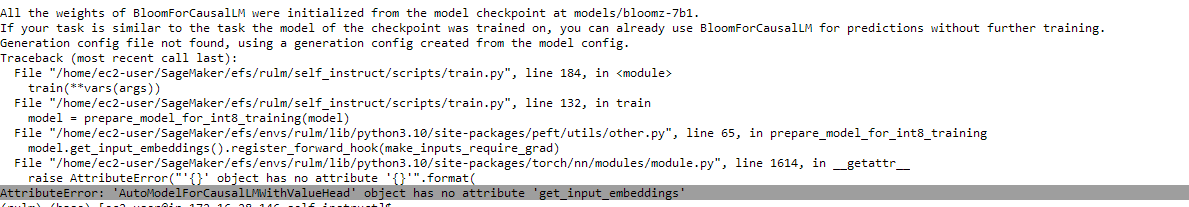

Second, the error I get when initializing the model:

RuntimeError: module must have its parameters and buffers on device cuda:0 (device_ids[0]) but found one of them on device: cuda:7

Third, my code to initialize the model

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM, AutoModelForCausalLM

from peft import get_peft_model, LoraConfig, prepare_model_for_int8_training

model = AutoModelForCausalLM.from_pretrained(

"models/bloomz-7b1",

load_in_8bit=True,

device_map="auto"

)

model = prepare_model_for_int8_training(model)

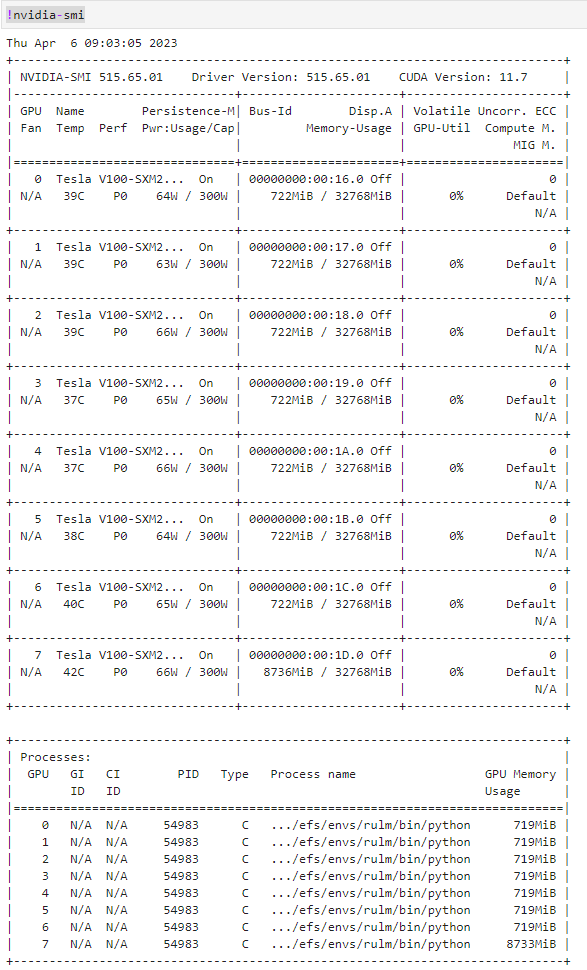

I can see that the model stores its weights on gpu=7:

device(type='cuda', index=7)

I would expect if the model has its parameters stored on gpu=0 it would solve the aforementioned problem.

Please, let me know if there is a fix?

P.S. I tried to set attributes as for this comment, but it led me to another [common error](RuntimeError: expected scalar type Half but found Float).

https://github.com/huggingface/peft/issues/269#issuecomment-1498814310

https://github.com/huggingface/peft/issues/269#issuecomment-1498868232

Hi @nd7141

Can you try to run the script by installing transformers from source?

pip install git+https://github.com/huggingface/transformers.git

I believe this commit: https://github.com/huggingface/transformers/pull/22532 was not added in your transformers package

I uninstall and reinstalled everything with:

pip install git+https://github.com/huggingface/peft.git

pip install git+https://github.com/huggingface/accelerate.git

pip install git+https://github.com/huggingface/transformers.git

It didn't help and it gives familiar: RuntimeError: expected scalar type Half but found Float.

What helps is load_in_8bit=False but then it fails with OOM issues (see here).

This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.