For MPS using num_images_per_prompt with StableDiffusionImg2ImgPipeline results in noise

Describe the bug

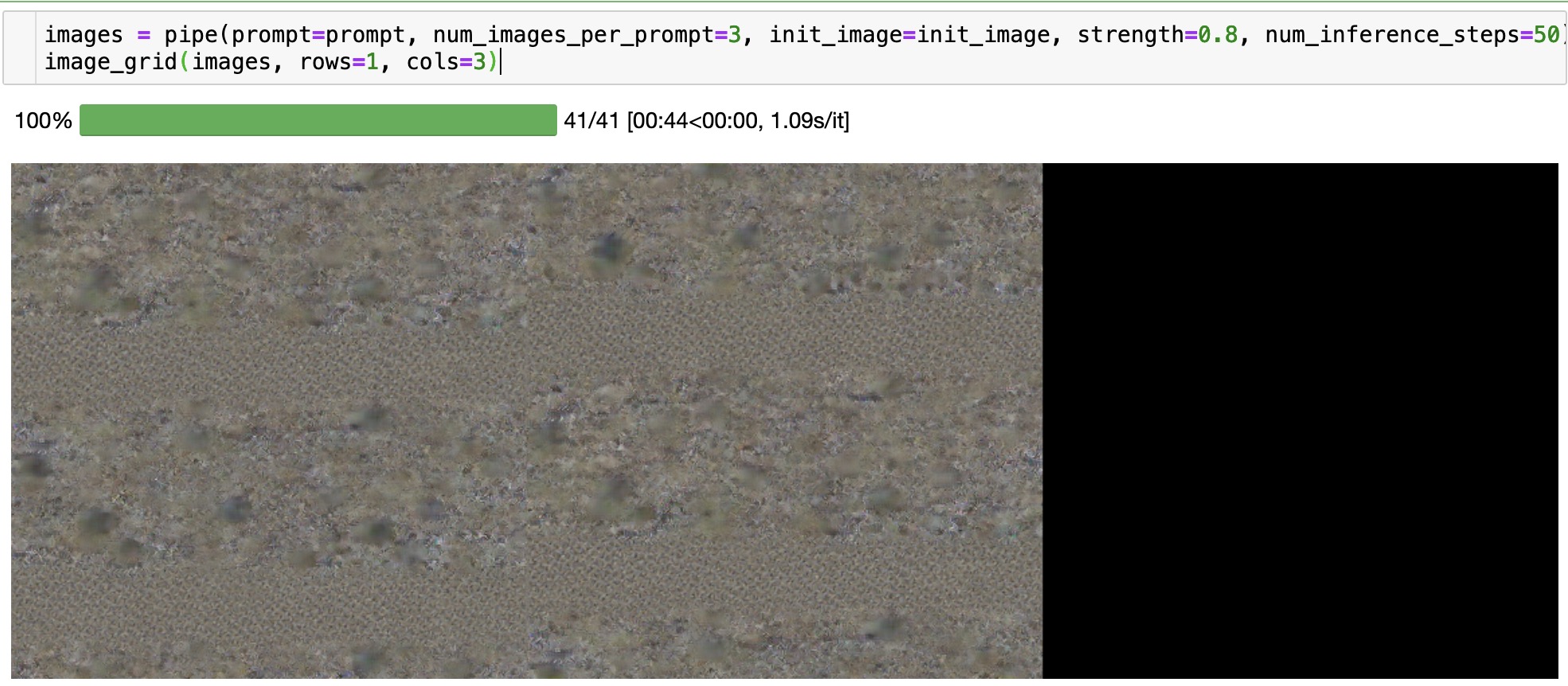

If you try to generate multiple images with StableDiffusionImg2ImgPipeline by using the num_images_per_prompt parameter, under MPS all you get is noise/brown images.

Reproduction

The following code should show the issue:

from diffusers import StableDiffusionImg2ImgPipeline

device = "cuda" if torch.cuda.is_available() else "mps" if torch.has_mps else "cpu"

pipe = StableDiffusionImg2ImgPipeline.from_pretrained("CompVis/stable-diffusion-v1-4").to(device)

pipe.enable_attention_slicing()

init_image = Image.open('wolf.png').convert("RGB")

torch.manual_seed(1000)

prompt = "Wolf howling at the moon, photorealistic 4K"

images = pipe(prompt=prompt, num_images_per_prompt=3, init_image=init_image, strength=0.8, num_inference_steps=50).images

image_grid(images, rows=1, cols=3)

Logs

No response

System Info

Python 3.9.13 torch 1.14.0.dev20221021 diffusers 0.6.0 macOS 12.6 Apple M1 Max with 32GB RAM

Hi @FahimF! This issue is still depending on https://github.com/pytorch/pytorch/issues/84039#issuecomment-1281125012 to be fully resolved.

Hey @pcuenca, I had a vague recollection that this was possibly at the PyTorch end but was juggling a bunch of things today and so went ahead and created the bug report instead of doing further searches. Apologies 🙂

Sure @FahimF, no problem at all! I thought there was an old issue about this but couldn't find it either, so we'll use this one to have it tracked :)

This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the contributing guidelines are likely to be ignored.

@pcuenca is this still relevant?

@patrickvonplaten Yes, this is still happening ... I thought this was fixed but apparently I had all my code set to do one image at a time because of this bug. Just tested by passing num_images_per_prompt a value of 2 and I still get noise/brown image instead of an actual image.

Update: Just to be accurate, I tested with a num_images_per_prompt value of 3 as well and that too results in the same behaviour. You only get images if num_images_per_prompt is set to 1.

Gently ping @pcuenca here :-)

I pinged the PyTorch team again, last time they did confirm that the problem appeared to lie in the PyTorch side of things (not in the Apple framework) :)

This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the contributing guidelines are likely to be ignored.

InvokeAI ran in to similar issues: https://github.com/invoke-ai/InvokeAI/pull/2458

the only workaround we found was to run the whole VAE encode step on CPU instead of MPS.

@keturn I tried the same fix in diffusers but it didn't fix the problem, unfortunately. With and without the fix, the img2img pipeline works when generating 1 image, but produces wrong results when generating more than 1. I'm using PyTorch 1.13.1 (stable release). Would you happen to have any additional insight on what other factors might be involved? In addition, @kulinseth mentioned that the fix would possibly be applied in 2.0: https://github.com/pytorch/pytorch/issues/84039#issuecomment-1373573246

Hi @FahimF - thanks for filling this issue. The fix for this issue just landed in PyTorch master (https://github.com/pytorch/pytorch/pull/94963) and next nightly build should have it. Please let me know if you still see any issues after that

This has now been fixed in the nighly versions of the upcoming PyTorch 2.0: https://github.com/pytorch/pytorch/pull/95325#event-8602259017

This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the contributing guidelines are likely to be ignored.