diffusers

diffusers copied to clipboard

diffusers copied to clipboard

Best practices / example results and run settings for Dreambooth inpainting

Hi @thedarkzeno @patil-suraj

I'm trying to run the script here, https://github.com/huggingface/diffusers/tree/main/examples/research_projects/dreambooth_inpaint (ty for this)

with similar setup as with training regular text=>image dreambooth. I'm finding that the quality of the generations for inpainting is far inferior to those that I get from pure text => image generation.

Wondering if there are any rules of thumbs that I should be following in order to get better results (e.g. does it require longer training, should I be modifying the masking method during training etc.) Are there any sample runs/results from this pipeline that I can reference?

Thanks!

Interesting! I don't know really and sadly won't have the time to look into it :sweat_smile: @thedarkzeno maybe?

The training uses random masks, this may cause it to learn a bit slower, for me it worked well with more steps like 500-1000, but it could be be different for your dataset. I'm Thinking about adding some segmentation option to optimize the training, but not sure if I will have time soon.

Thanks for the clarification - I've been training for up to 1k steps on a concept with ~7 images. I think the main issue is that it doesn't seem to be learning the concept - I tested this by basically providing an all-white mask on top of a blank base, and it was unable to re-generate the concept (though typically dreambooth would be able to overfit very quickly). Are there any results / reference runs or notebooks? I can also play around with hparams but wanted to get an e2e run working first.

As a side note - if there's a general inpainting training script, (similar to diffusers/examples/text_to_image/train_text_to_image.py)? I could also try that if so.

I had the same issue with the inpainting script (https://github.com/huggingface/diffusers/tree/main/examples/research_projects/dreambooth_inpaint).

It didn't learn the concept on 100-500 training steps, using 10 example images.

This helped for me:

- training with my own masks;

- training text encoder as it was suggested in the readme.

- increasing training steps to 2000

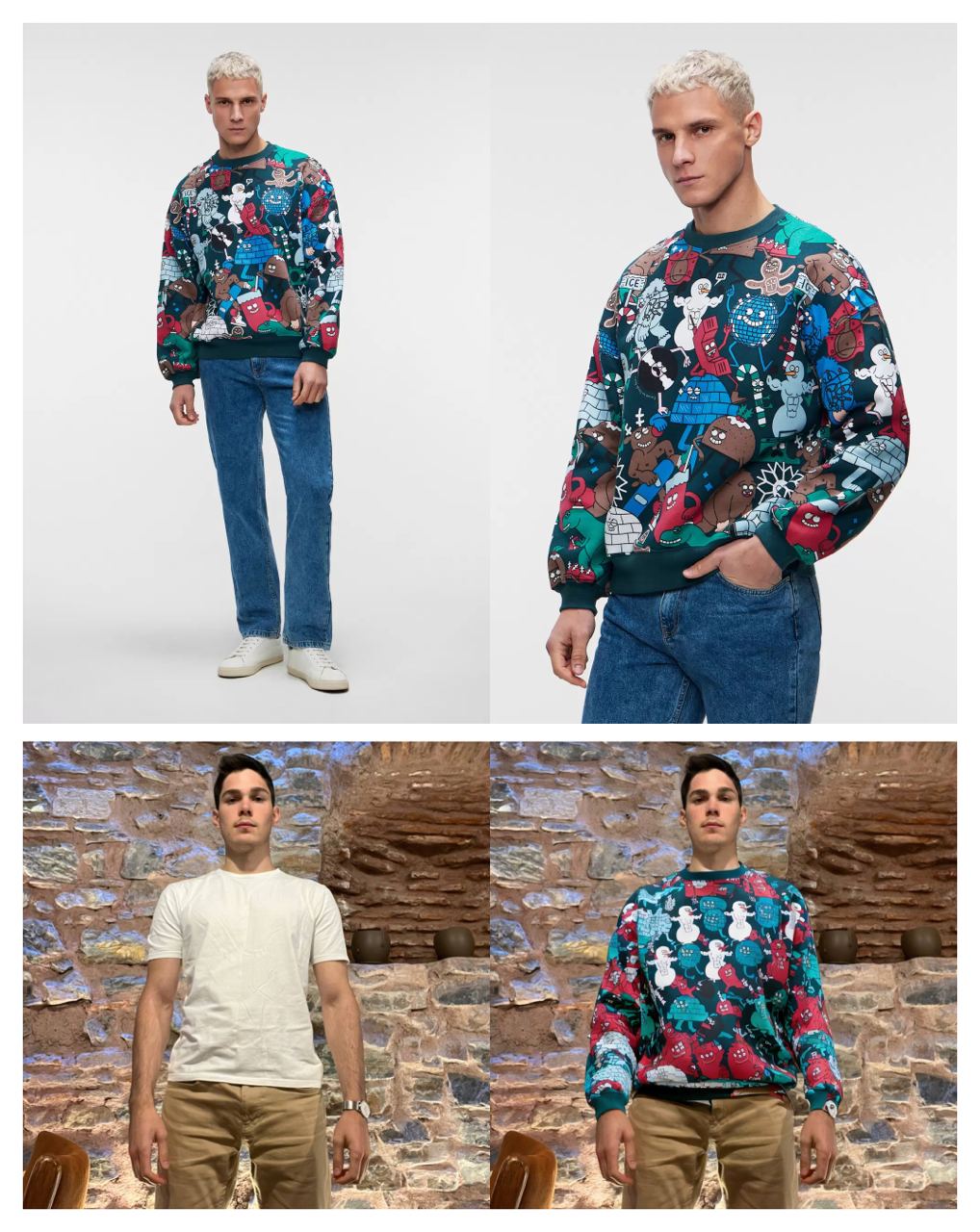

Here are the results for a sweatshirt.

Still, strange that inpainting takes much more training steps than a dreambooth text2image. @thedarkzeno Do you have any suggestions on why this might be the case?

Hey @belonel could you elaborate on the masks that you used? I don't know what masks you used, but I believe the training for inpainting takes longer because of the random masks. I think that using a model like clipseg to generate masks could make it better, but I haven't had time to implement it.

Thanks, I'll take a look at the clipseg. I used cloth segmentation using u2net to train the model to reproduce clothes. Masks look like this:

@belonel these masks look pretty good, they must be much better for training the model compared to the random ones.

This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the contributing guidelines are likely to be ignored.

@belonel , Do you have a sample notebook? How can I do that?