Coordinate Encoder - WIP

Work In Progress

See also issue #258 & #259.

Use the following python script to view the Receptive Fields of the simulated place cells:

import numpy as np

from nupic.bindings.encoders import *

from nupic.bindings.sdr import *

P = CoordinateEncoderParameters()

P.size = 2500

P.activeBits = 75

P.numDimensions = 2

P.resolution = 1

P.seed = 42

C = CoordinateEncoder( P )

arena = 200

M = Metrics( [P.size], 999999 )

rf = np.ones((arena, arena, P.size))

for x in range(arena):

for y in range(arena):

Z = SDR( P.size )

C.encode( [x, y], Z )

M.addData( Z )

rf[x,y, :] = Z.dense

print(M)

import matplotlib.pyplot as plt

for i in range(12):

plt.subplot(3, 4, i + 1)

plt.imshow( 255 * rf[:,:,i], interpolation='nearest' )

plt.show()

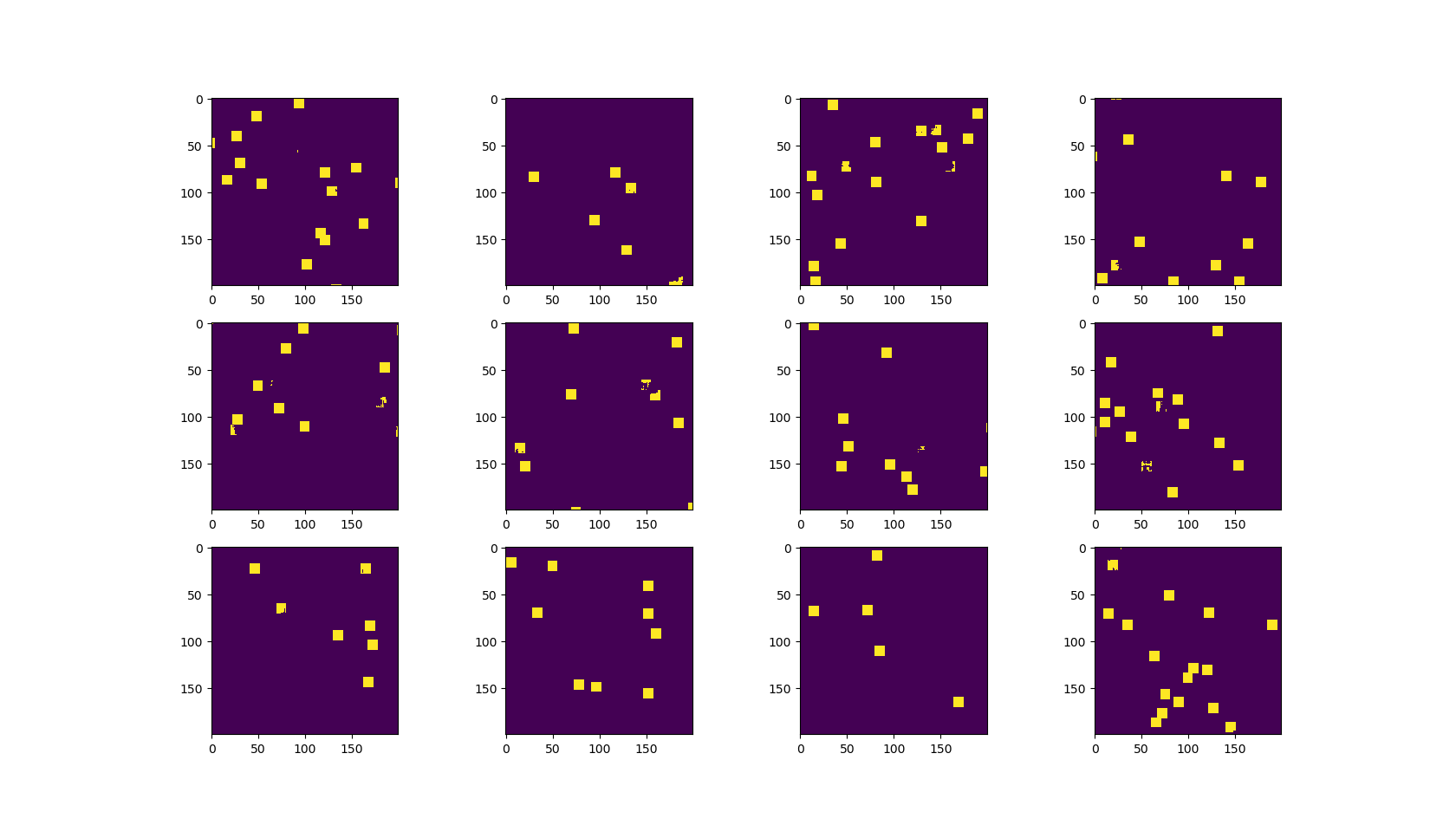

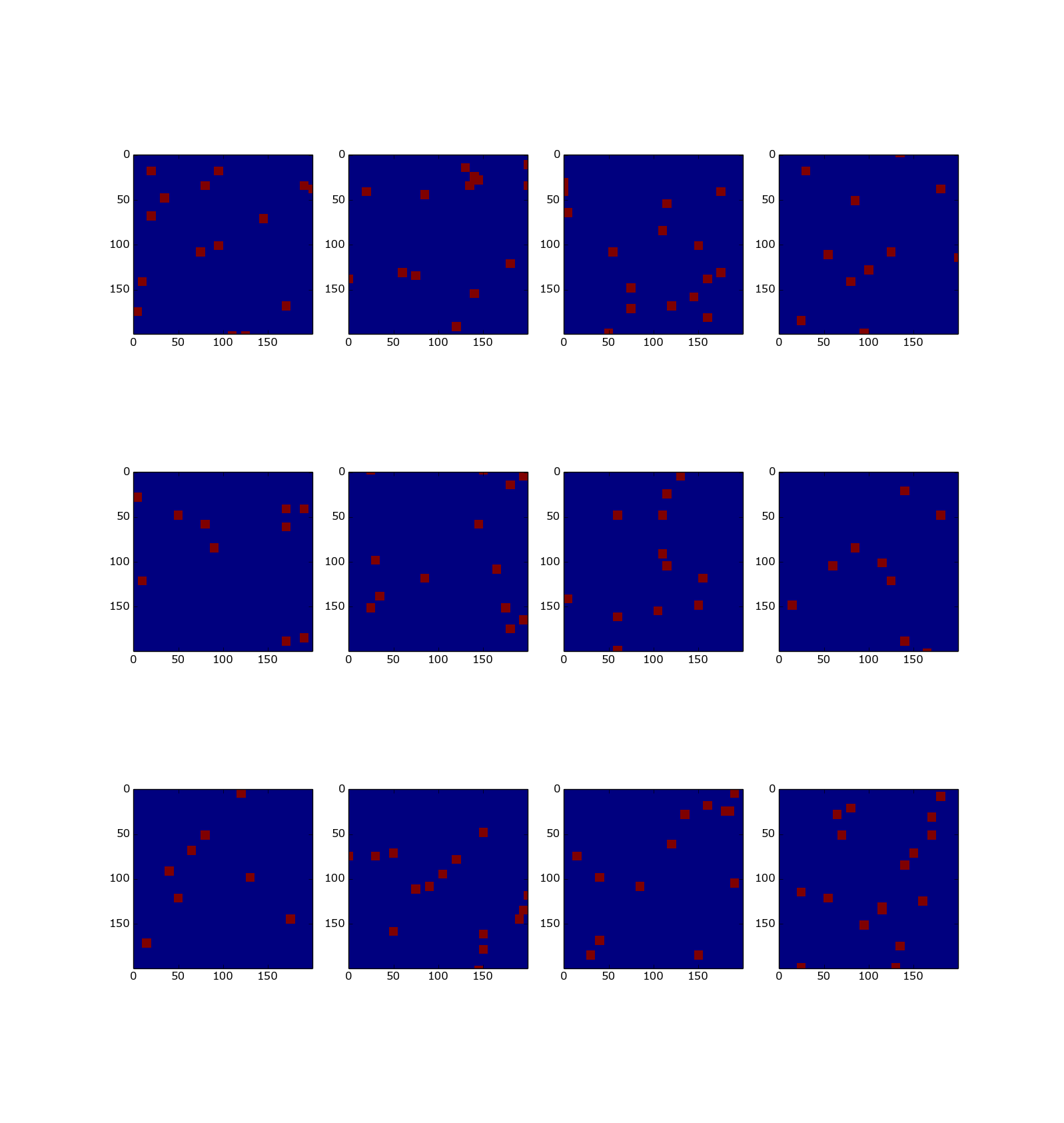

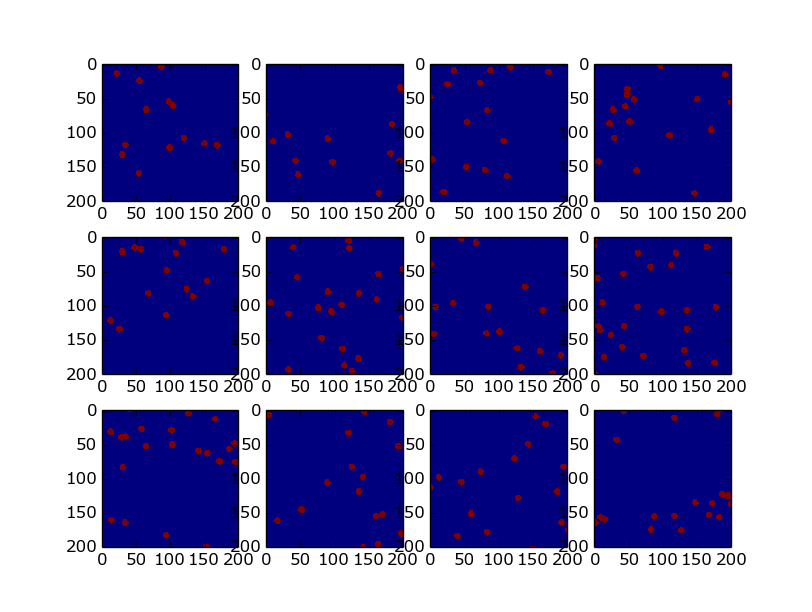

This uses a different method than the previous Numenta version. Both methods hash coordinates. The old method hashed all coordinates within the receptive field radius and then ran a competition to determine active cells. The new method hashes exactly enough bits and then activates all of them, without competition. Here is a comparison of old & new methods for Coordinate Encoder with identical parameters:

Sparsity = 3% Radius = 5 units

Numenta's Old Method:

My New Method:

Update: now with spheres:

Thanks for the visualization. Can you give some details, it's hard for me to tell if one method is superior (or even correct) to other.

The new method hashes exactly enough bits and then activates all of them, without competition

it seems to me that advantage of your method is that your method keeps constant sparsity for a single coordinate (correct?) :+1:

I'm not sure, but what might be disadvantage here is lesser locality/semantics? (that is similar inputs -> similar encodings)

-

can you please show how this works? Say for both methods, make a set of points (or a curve): a few points nearby, a few distal. And encode such bitmap as coordinates using both methods.

-

show encoding of a more complex object (face, car) simplified (B/W, contours,..)

I will approve this PR :+1:

What we need to decide is how to handle the differences with Coordinate from Numenta. Even if we provide c++/py of the new encoder, I think it'll be useful to have the original implementation. Ideally, if the switch could be just a parameter. But here the implementation is rather different.

it seems to me that advantage of your method is that your method keeps constant sparsity for a single coordinate (correct?)

My method does not keep a constant sparsity, unlike Numenta's method which does keep a constant sparsity. We can measure the resulting sparsity and verify that the discrepancy is acceptable.

I ignore hash collisions. In the picture I posted showing the receptive fields of each cell in the encoder, every time two squares overlap is a hash collision and the sparsity at that location is lower by one cell activation.

show encoding of a more complex object (face, car) simplified (B/W, contours,..)

This only encodes Cartesian Coordinates. The images above shows the response of a cell in the encoder at every location in an area.

Can you give some details, it's hard for me to tell if one method is superior (or even correct) to other.

I will write up a more in-depth description of the encoding process when I have time, and add it to the documentation too.

@ctrl-z-9000-times I've resolved the merge conflicts to push this back on track with master.

The important thing to check for is for location similarity. The best way to confirm this is to use the coordinate_test.py tests that I have pushed into htm.core.