ColossalAI

ColossalAI copied to clipboard

[BUG]: Acc decreases when training with PP and TP

🐛 Describe the bug

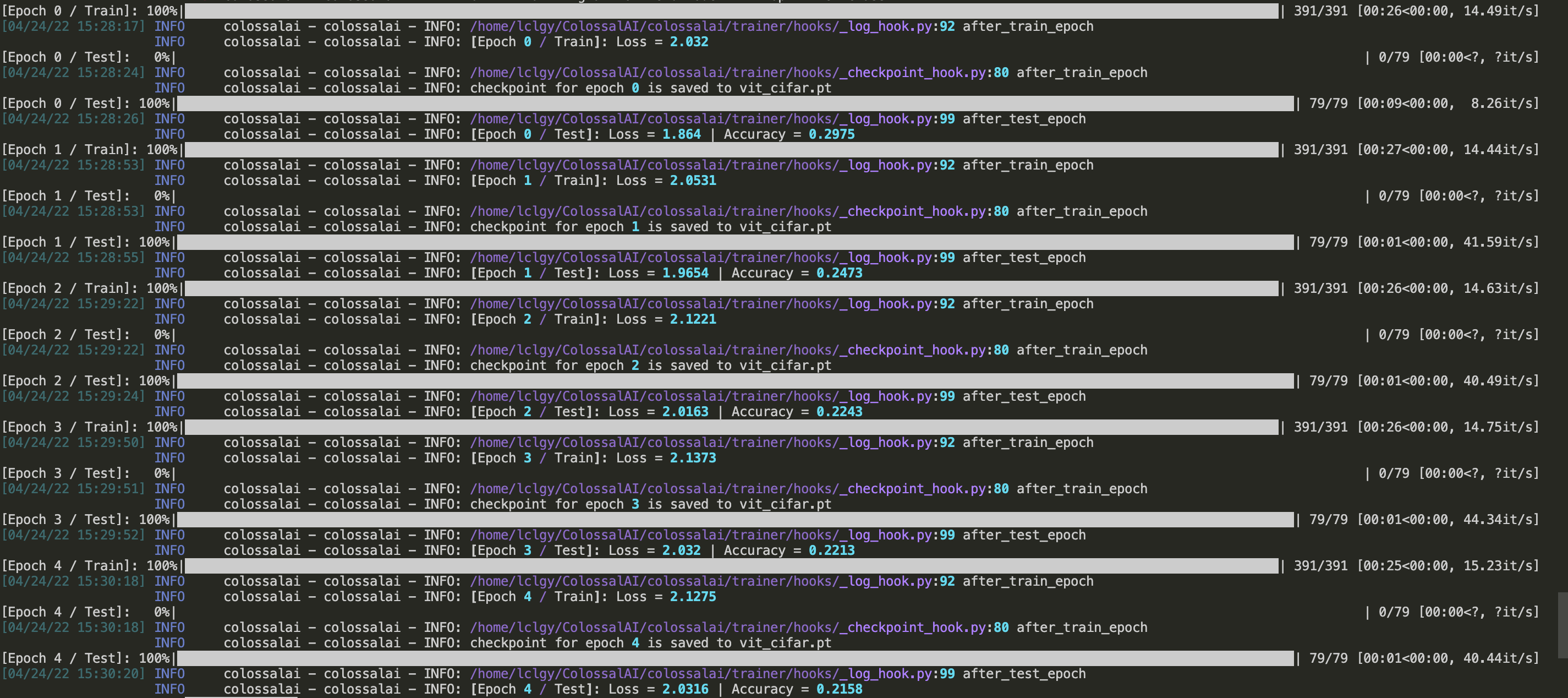

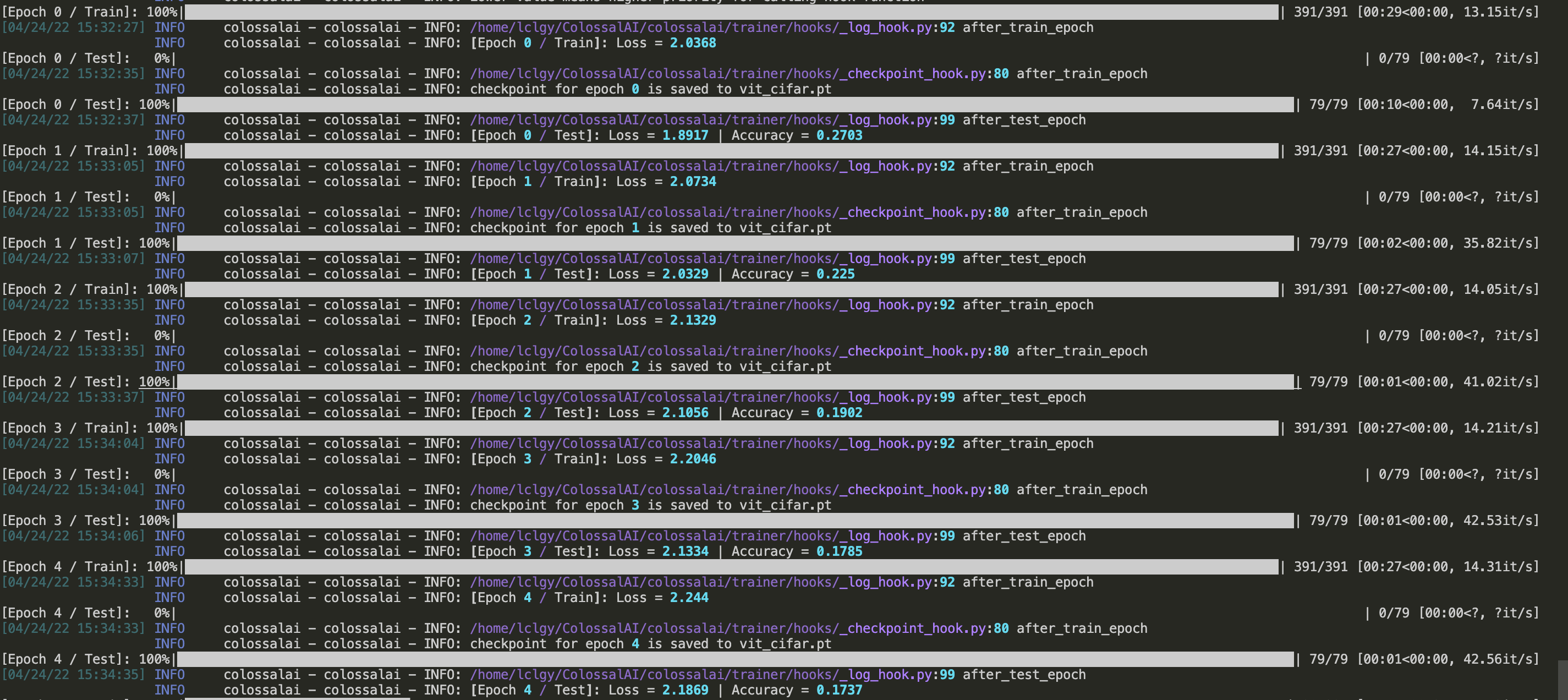

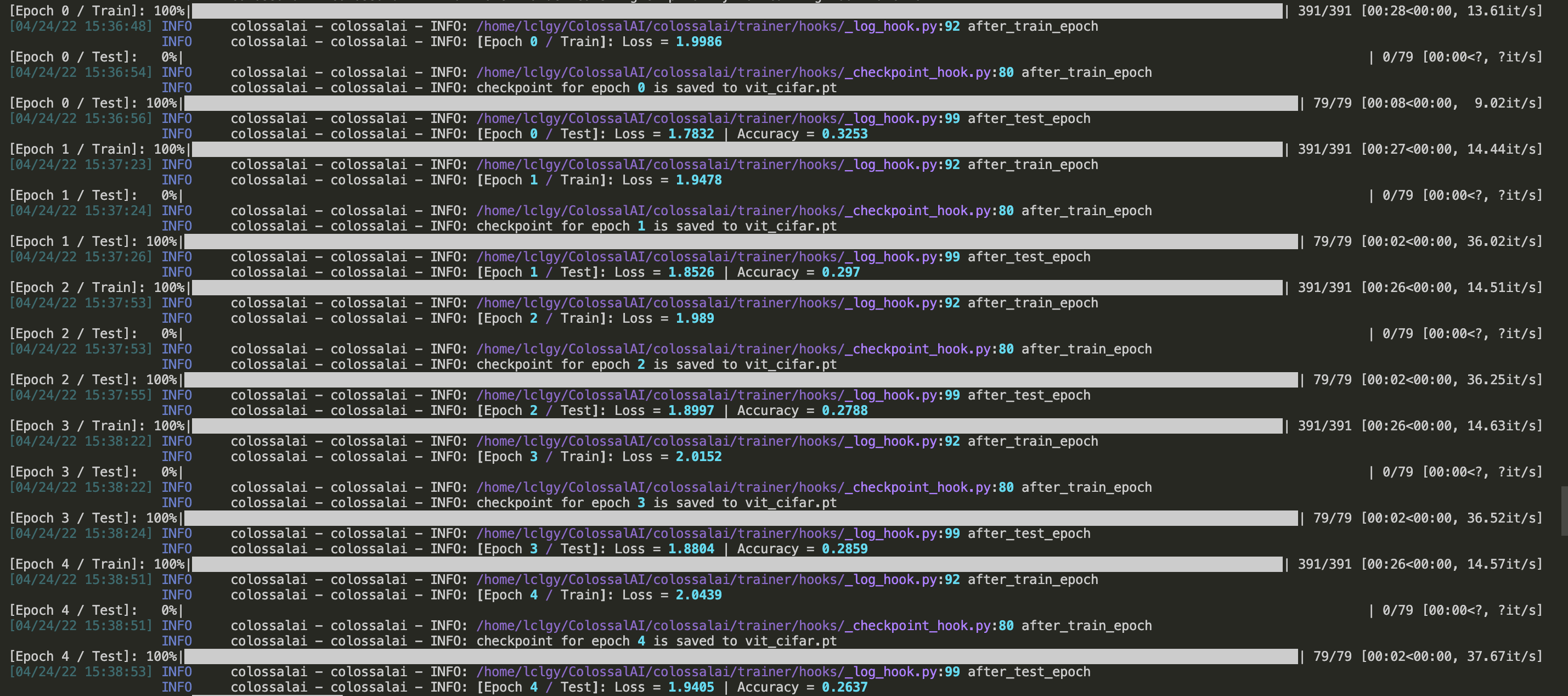

When I ran ViT with cifar-10, I found that if using a hybrid of PP and TP, the test ACC decreased with the process of training. However, things went fine if using PP or TP alone.

- non_interleaved PP and 1d TP

CONFIG = dict(NUM_MICRO_BATCHES=2, parallel=dict(pipeline=2, tensor=dict(size=2, mode='1d')))

- interleaved PP(num_chunk=1) and 1d TP

NUM_CHUNKS = 1

CONFIG = dict(NUM_MICRO_BATCHES=2, parallel=dict(pipeline=2, tensor=dict(size=2, mode='1d')), model=dict(num_chunks=NUM_CHUNKS))

- interleaved PP(num_chunks=2) and 2d TP

NUM_CHUNKS = 2

CONFIG = dict(NUM_MICRO_BATCHES=2, parallel=dict(pipeline=2, tensor=dict(size=2, mode='1d')), model=dict(num_chunks=NUM_CHUNKS))

Environment

No response

Oh, I found that this model was defined with torch.nn.

:x

We have updated a lot. This issue was closed due to inactivity. Thanks.