ColossalAI

ColossalAI copied to clipboard

ColossalAI copied to clipboard

[BUG]: save model parameters are incorrect

🐛 Describe the bug

After the Llama model is trained using Lora training method, the model can be saved normally. However, Lora's model parameters were not included in the model parameters, resulting in failure of model reasoning in the prediction.

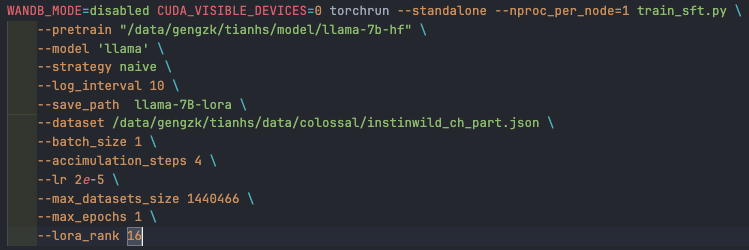

This is the content of the script I executed:

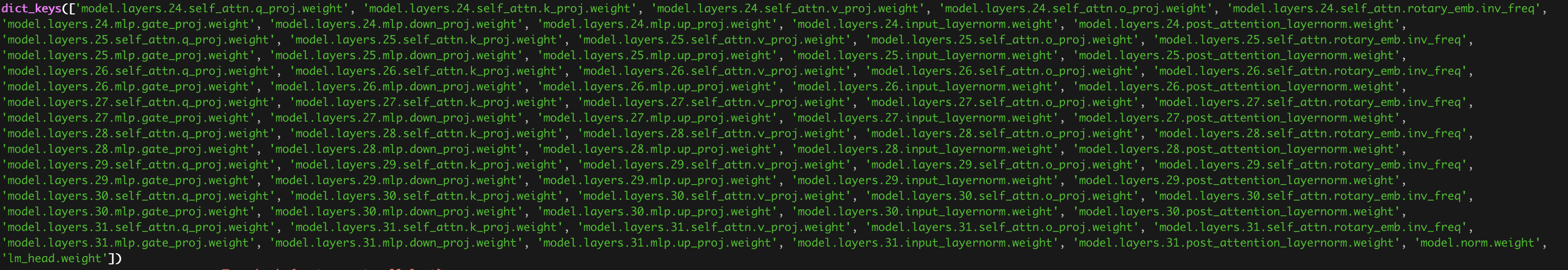

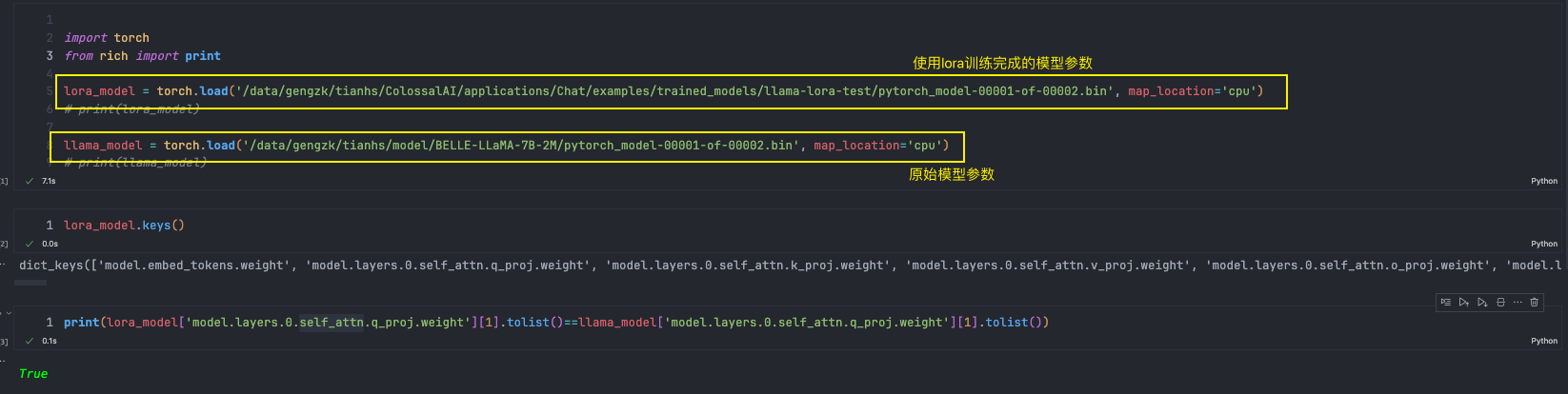

This is the case for loading saved model parameters:

Environment

No response

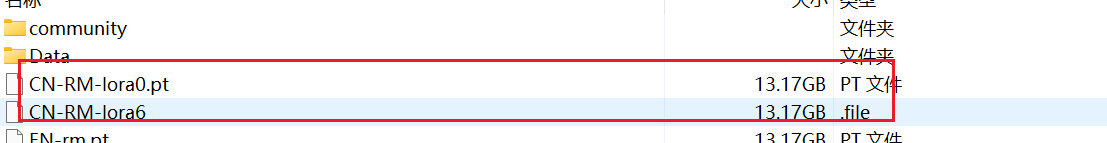

the same puzzle!! when i set the "lora_rank" as "0", i can run successfully. But the the saved model file is very big, it is about 13GB!!!

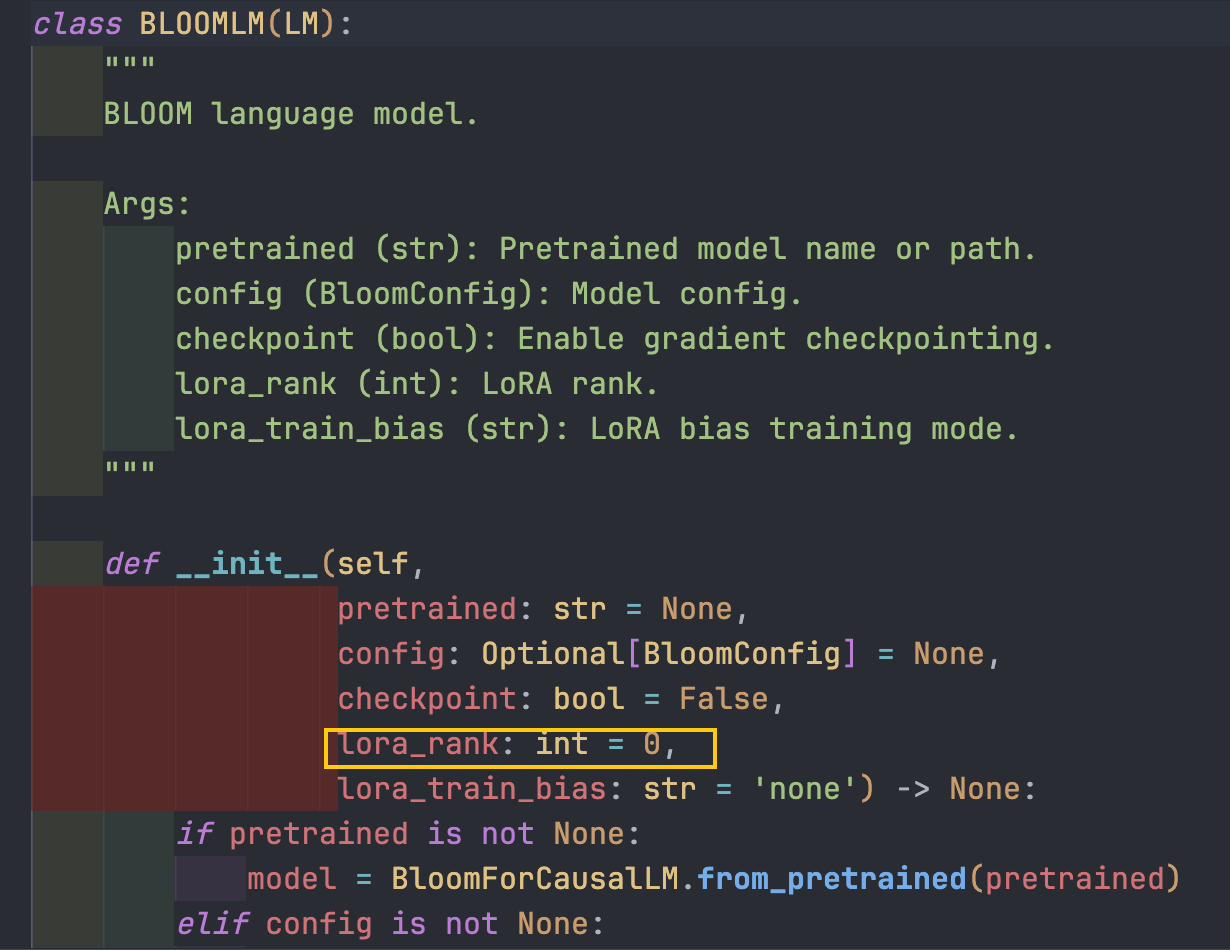

When you set lora_rank to 0, you are training the model without lora training, which is described here:

When you set lora_rank to 0, you are training the model without lora training, which is described here:

yes, i know that when i set "lora_rank" to 0 means training the model without lora training. But does the lora_rank need to be set as the same value in stage1, stage2 and stag3? i think it‘s unnecessary! but i do this, it runs the same error as the you met when i load the model!

When you set lora_rank to 0, you are training the model without lora training, which is described here:

yes, i know that when i set "lora_rank" to 0 means training the model without lora training. But does the lora_rank need to be set as the same value in stage1, stage2 and stag3? i think it‘s unnecessary! but i do this, it runs the same error as the you met when i load the model!

In stage 2, i set the lora_rank to 6, after finishing the training, the size of the RM is 13GB.

hi @HaixHan @tianbuwei for the lora parameters, when saving final model weights, they are merged with the previous weights, you can refer to these lines in lora.py.

@Camille7777 Hello, I found that the code you submitted did not solve the problem of using lora training to save model parameters. I found that after the training, the parameters of the model were consistent with those of the previous model, and the parameters of lora layer were not incorporated into the original model.

@Camille7777 Hello, I found that the code you submitted did not solve the problem of using lora training to save model parameters. I found that after the training, the parameters of the model were consistent with those of the previous model, and the parameters of lora layer were not incorporated into the original model.

so, How to save only the lora weights after the model training is completed? Have you already done this? look forward to your reply!

Same error, any updates?