[BUG]: Error when download from source

🐛 Describe the bug

To Reproduce

git clone https://github.com/hpcaitech/ColossalAI.git

cd ColossalAI

# install dependency

pip install -r requirements/requirements.txt

# install colossalai

pip install .

I have installed the corresponding version of Pytorch with CUDA 11.3, Python3.8. My environment works fine with pip install, and can import successfully. But I notice that dreambooth example is still under fast development, so it would be better to keep updated with the lastest version.

Expected behavior

colossalai check -i should work.

Logs

root@qs-527-4961-master-0:/workspace/ColossalAI# colossalai check -i

Using /root/.cache/torch_extensions/py38_cu113 as PyTorch extensions root...

Traceback (most recent call last):

File "/opt/conda/lib/python3.8/site-packages/colossalai/kernel/__init__.py", line 4, in <module>

from colossalai._C import fused_optim

ImportError: cannot import name 'fused_optim' from 'colossalai._C' (unknown location)

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/opt/conda/bin/colossalai", line 33, in <module>

sys.exit(load_entry_point('colossalai==0.2.0', 'console_scripts', 'colossalai')())

File "/opt/conda/bin/colossalai", line 25, in importlib_load_entry_point

return next(matches).load()

File "/opt/conda/lib/python3.8/importlib/metadata.py", line 77, in load

module = import_module(match.group('module'))

File "/opt/conda/lib/python3.8/importlib/__init__.py", line 127, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "<frozen importlib._bootstrap>", line 1014, in _gcd_import

File "<frozen importlib._bootstrap>", line 991, in _find_and_load

File "<frozen importlib._bootstrap>", line 961, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 219, in _call_with_frames_removed

File "<frozen importlib._bootstrap>", line 1014, in _gcd_import

File "<frozen importlib._bootstrap>", line 991, in _find_and_load

File "<frozen importlib._bootstrap>", line 975, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 671, in _load_unlocked

File "<frozen importlib._bootstrap_external>", line 843, in exec_module

File "<frozen importlib._bootstrap>", line 219, in _call_with_frames_removed

File "/opt/conda/lib/python3.8/site-packages/colossalai/__init__.py", line 1, in <module>

from .initialize import (

File "/opt/conda/lib/python3.8/site-packages/colossalai/initialize.py", line 23, in <module>

from colossalai.engine.schedule import NonPipelineSchedule, PipelineSchedule, InterleavedPipelineSchedule, get_tensor_shape

File "/opt/conda/lib/python3.8/site-packages/colossalai/engine/__init__.py", line 1, in <module>

from ._base_engine import Engine

File "/opt/conda/lib/python3.8/site-packages/colossalai/engine/_base_engine.py", line 10, in <module>

from colossalai.gemini.ophooks import register_ophooks_recursively, BaseOpHook

File "/opt/conda/lib/python3.8/site-packages/colossalai/gemini/__init__.py", line 1, in <module>

from .chunk import ChunkManager, TensorInfo, TensorState, search_chunk_configuration

File "/opt/conda/lib/python3.8/site-packages/colossalai/gemini/chunk/__init__.py", line 1, in <module>

from .chunk import Chunk, ChunkFullError, TensorInfo, TensorState

File "/opt/conda/lib/python3.8/site-packages/colossalai/gemini/chunk/chunk.py", line 9, in <module>

from colossalai.utils import get_current_device

File "/opt/conda/lib/python3.8/site-packages/colossalai/utils/__init__.py", line 3, in <module>

from .checkpointing import load_checkpoint, save_checkpoint

File "/opt/conda/lib/python3.8/site-packages/colossalai/utils/checkpointing.py", line 14, in <module>

from .common import is_using_pp

File "/opt/conda/lib/python3.8/site-packages/colossalai/utils/common.py", line 21, in <module>

from colossalai.kernel import fused_optim

File "/opt/conda/lib/python3.8/site-packages/colossalai/kernel/__init__.py", line 7, in <module>

fused_optim = FusedOptimBuilder().load()

File "/opt/conda/lib/python3.8/site-packages/colossalai/kernel/op_builder/builder.py", line 83, in load

op_module = load(name=self.name,

File "/opt/conda/lib/python3.8/site-packages/torch/utils/cpp_extension.py", line 1202, in load

return _jit_compile(

File "/opt/conda/lib/python3.8/site-packages/torch/utils/cpp_extension.py", line 1385, in _jit_compile

version = JIT_EXTENSION_VERSIONER.bump_version_if_changed(

File "/opt/conda/lib/python3.8/site-packages/torch/utils/_cpp_extension_versioner.py", line 45, in bump_version_if_changed

hash_value = hash_source_files(hash_value, source_files)

File "/opt/conda/lib/python3.8/site-packages/torch/utils/_cpp_extension_versioner.py", line 15, in hash_source_files

with open(filename) as file:

FileNotFoundError: [Errno 2] No such file or directory: '/opt/conda/lib/python3.8/site-packages/colossalai/kernel/colossalai/kernel/cuda_native/csrc/colossal_C_frontend.cpp'

Environment

No response

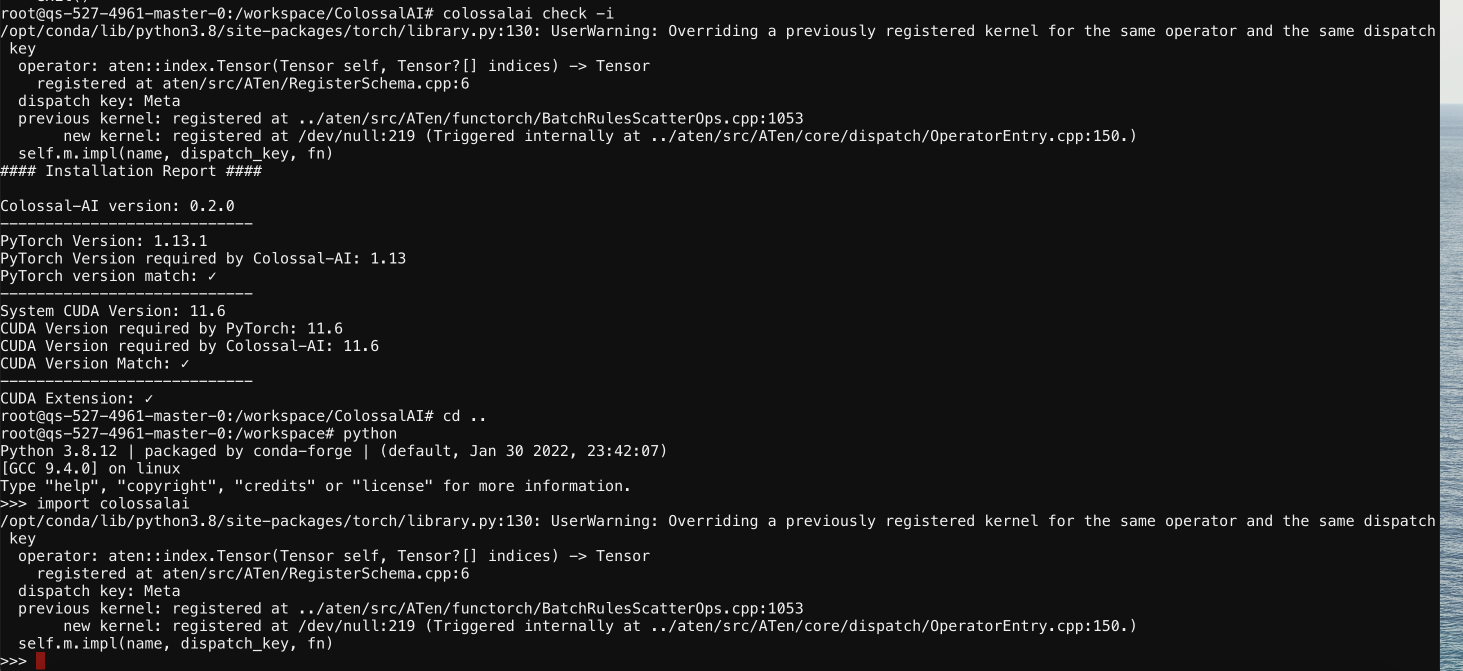

run python setup.py clean and python setup.py install, to recompile cpu kernel

It doesn't work. Error messages are the same as above.

@haofanwang sorry, it is a bug

could you please install CAI using the following cmd.

CUDA_EXT=1 pip install .

Thanks, @feifeibear. It looks fine now. I will report a new issue if I still meet problems, and I'm also glad to PR if I can fix it on my own.

@haofanwang It has worked already. You can ignore those warnings. Anyway, we will fix them later.