[BUG]: RuntimeError: setStorage: sizes when training to finetune mT5 model

🐛 Describe the bug

I am trying to train Google mT5 model ( I have tried mt5-small and mt5-large ). My model had a runtime error during the feedforward phase in training.

The error had something to do with setStorage problem, which I suspect it's down to a bug in custom cuda code?

RuntimeError: setStorage: sizes [1024, 2816], strides [1, 1024], storage offset 42737152, and itemsize 2 requiring a storage size of 91241472 are out of bounds for storage of size 0

More specifically:

copycat/env/lib/python3.8/site-packages/transformers/tokenization_utils_base.py:2354: UserWarning: `max_length` is ignored when `padding`=`True` and there is no truncation strategy. To pad to max length, use `padding='max_length'`.

warnings.warn(

torch.Size([2, 130]) torch.Size([2, 130]) torch.Size([2, 319])

torch.Size([2, 130, 1024])

torch.Size([2, 130, 1024])

torch.Size([2, 130, 1024])

torch.Size([2, 130, 1024])

0%| | 0/25100 [00:01<?, ?it/s]

Traceback (most recent call last):

File "colossalai_baseline.py", line 150, in <module>

main()

File "colossalai_baseline.py", line 140, in main

outputs = model(batch['input_ids'], batch['attention_mask'], batch['decoder_input_ids'])

File "copycat/env/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "copycat/env/lib/python3.8/site-packages/colossalai/nn/parallel/data_parallel.py", line 263, in forward

outputs = self.module(*args, **kwargs)

File "copycat/env/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "colossalai_baseline.py", line 68, in forward

return self.model(input_ids=input_ids, attention_mask=attention_mask, decoder_input_ids=decoder_input_ids)[0]

File "copycat/env/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "copycat/env/lib/python3.8/site-packages/transformers/models/t5/modeling_t5.py", line 1612, in forward

encoder_outputs = self.encoder(

File "copycat/env/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "copycat/env/lib/python3.8/site-packages/transformers/models/t5/modeling_t5.py", line 1028, in forward

layer_outputs = checkpoint(

File "copycat/env/lib/python3.8/site-packages/torch/utils/checkpoint.py", line 235, in checkpoint

return CheckpointFunction.apply(function, preserve, *args)

File "copycat/env/lib/python3.8/site-packages/torch/utils/checkpoint.py", line 96, in forward

outputs = run_function(*args)

File "copycat/env/lib/python3.8/site-packages/transformers/models/t5/modeling_t5.py", line 1024, in custom_forward

return tuple(module(*inputs, use_cache, output_attentions))

File "copycat/env/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "copycat/env/lib/python3.8/site-packages/transformers/models/t5/modeling_t5.py", line 726, in forward

hidden_states = self.layer[-1](hidden_states)

File "copycat/env/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "copycat/env/lib/python3.8/site-packages/transformers/models/t5/modeling_t5.py", line 329, in forward

forwarded_states = self.DenseReluDense(forwarded_states)

File "copycat/env/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "copycat/env/lib/python3.8/site-packages/transformers/models/t5/modeling_t5.py", line 308, in forward

hidden_gelu = self.act(self.wi_0(hidden_states))

File "copycat/env/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "copycat/env/lib/python3.8/site-packages/torch/nn/modules/linear.py", line 114, in forward

return F.linear(input, self.weight, self.bias)

File "copycat/env/lib/python3.8/site-packages/colossalai/tensor/colo_tensor.py", line 183, in __torch_function__

ret = func(*args, **kwargs)

File "copycat/env/lib/python3.8/site-packages/colossalai/nn/_ops/linear.py", line 171, in colo_linear

return colo_linear_imp(input_tensor, weight, bias)

File "copycat/env/lib/python3.8/site-packages/colossalai/nn/_ops/linear.py", line 76, in colo_linear_imp

ret_tensor = ColoTensor.from_torch_tensor(F.linear(input_tensor, weight, bias), spec=ColoTensorSpec(pg))

RuntimeError: setStorage: sizes [1024, 2816], strides [1, 1024], storage offset 42737152, and itemsize 2 requiring a storage size of 91241472 are out of bounds for storage of size 0

ERROR:torch.distributed.elastic.multiprocessing.api:failed (exitcode: 1) local_rank: 0 (pid: 2335161) of binary: copycat/env/bin/python

I have tried the GPT example which run perfectly without any issues. So I think the issue has to do with a different architecture design.

You can check my fullcode here : https://github.com/theblackcat102/copycat/blob/master/colossalai_baseline.py

Environment

CUDA 11.3 Python 3.8.15 Colossal package version : 0.1.12+torch1.12cu11.3

Could you please update the colossalai_baseline.py using synthetic data (rand generated)? I can not download the dataset.

@feifeibear My bad, here's a test code for debugging, just git pull my repo and you should see the update

colossalai run --nproc_per_node 1 colossalai_test.py

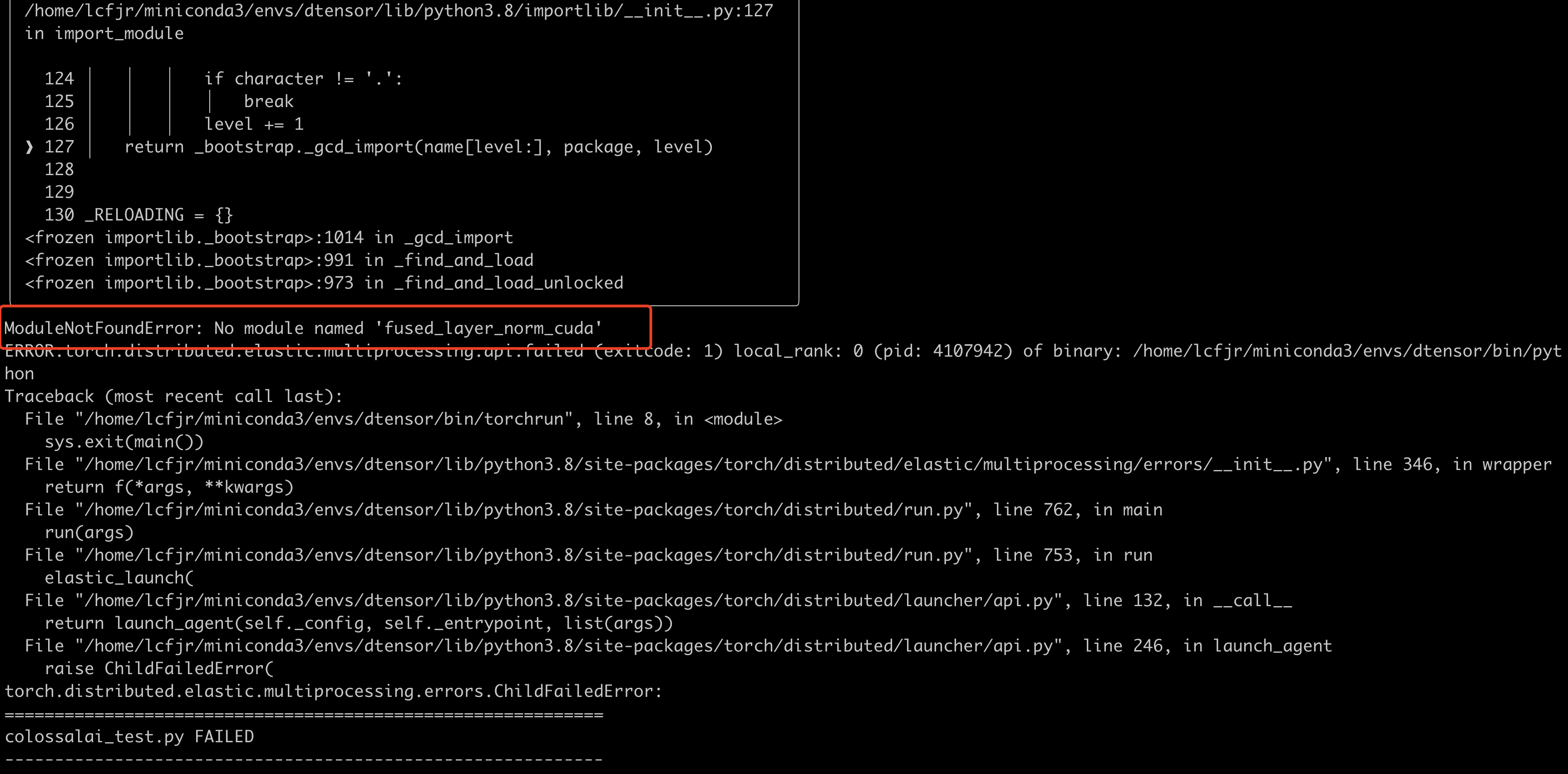

Sorry I can not run the code. I think mt5 use some apex kernels, which is not supported by CAI right now.

I see, that's disappointing. I will test other encoder-decoder arch and see which works.

Thanks for looking into this issue!

We have updated a lot. This issue was closed due to inactivity. Thanks.