[BUG]: Bug in Stable-Diffusion Example, "Killed" when saving checkpoint

🐛 Describe the bug

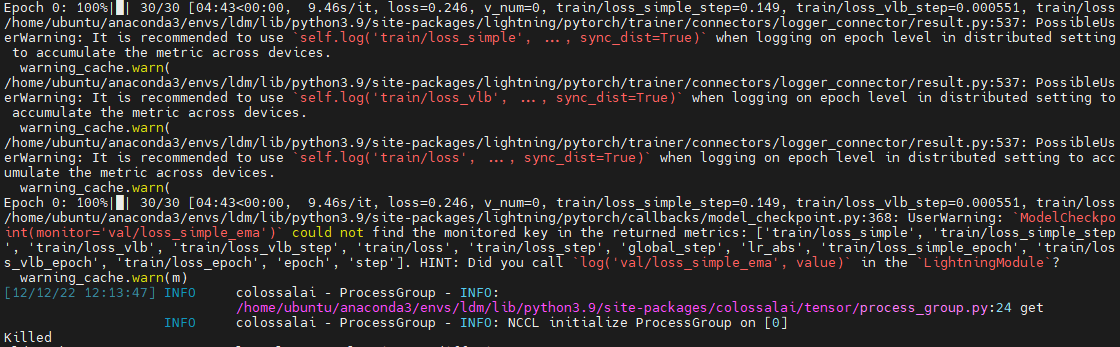

I was trying to run the stable-diffusuin example, but found the program would be Killed at the saving process, Without any Error Message. Also found the config file "train_ddp.yaml" is OK. So maybe some bug in save_checkpoint, would you please fix this bug?

Environment

I'm using the Docker Image you provide "hpcaitech/colossalai:0.1.10-torch1.11-cu11.3"

Thanks for your issue, can you offer more detail such as the image?

I also get this bug when trying to save this model. This was in a conda environment using the provided environment.yaml and on Ubuntu 20.04. I ran multiple tests to try and fix it and noted that, even with batch size of one the training went from virtual memory usage 22 to 46 to 68 and then the process was killed. I noticed that using save_weights_only: True enabled saving to work but with the weights only I am unable to do sampling, nor could I continue training. I also tried not using modelcheckpoint every_n_train_steps and let the training finish it's entire epoch and that was still killed.

Hello, I also met the same problem. How did you solve it

we have update the stable diffusion to v2 (https://github.com/hpcaitech/ColossalAI/pull/2120), Now there is no problem of kill

@Fazziekey But stable diffusion v2 has no pre-trained weight for training, so is not useful. How to train with stable diffusion v1? I also met with this problem, the first epoch is normal , the second time to save checkpoints it is killed. It seems to be a memory leak.

We have updated a lot. This issue was closed due to inactivity. Thanks. https://github.com/hpcaitech/ColossalAI/tree/main/examples/images/diffusion