ColossalAI

ColossalAI copied to clipboard

ColossalAI copied to clipboard

[BUG]: examples/images/diffusion ran failed

🐛 Describe the bug

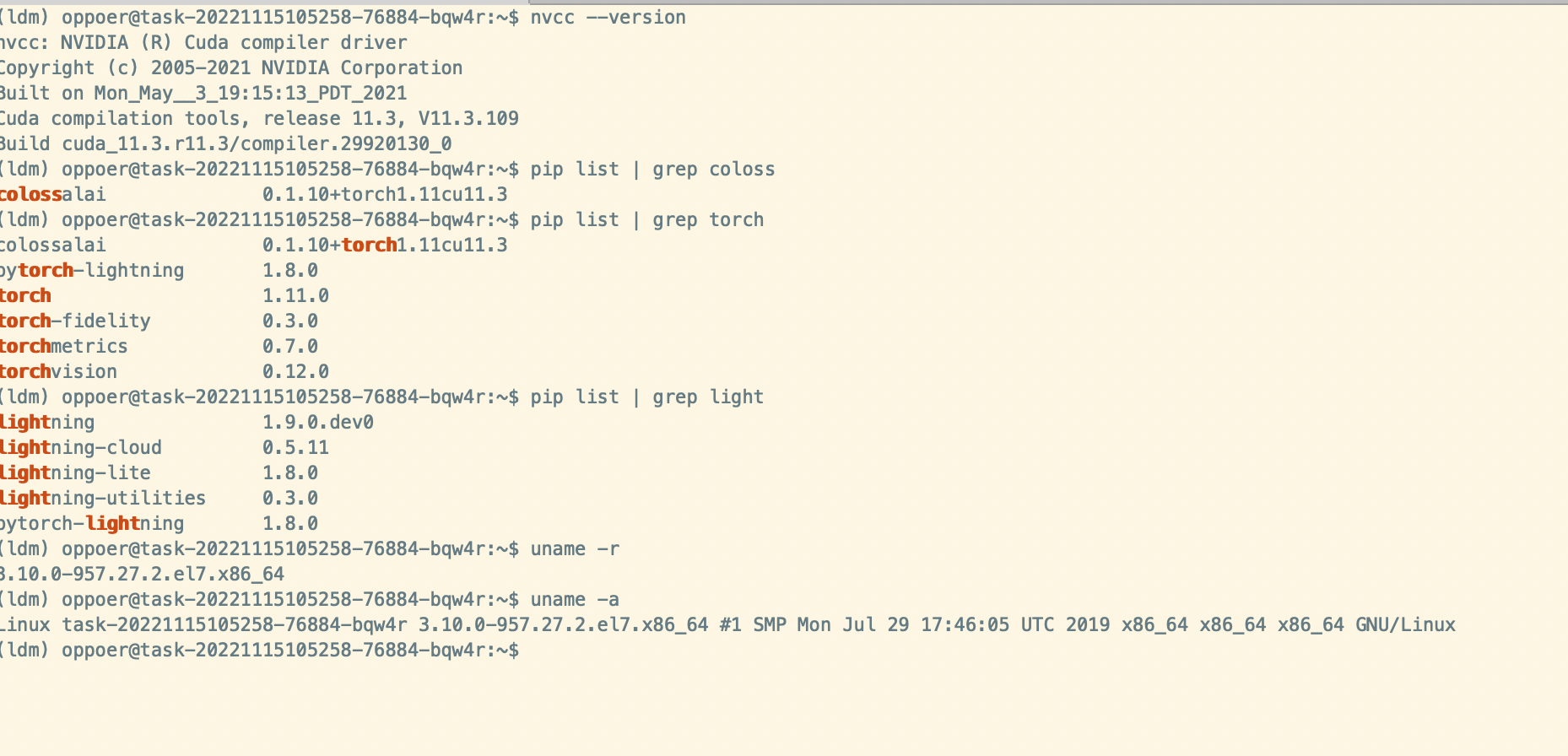

I ran the example of diffusion according to https://github.com/hpcaitech/ColossalAI/tree/main/examples/images/diffusion: steps: conda env create -f environment.yaml conda activate ldm pip install colossalai==0.1.10+torch1.11cu11.3 -f https://release.colossalai.org git clone https://github.com/Lightning-AI/lightning && cd lightning && git reset --hard b04a7aa pip install -r requirements.txt && pip install .

dataset: laion-400m

run: bash train.sh

failed info:

**/opt/conda/envs/ldm/lib/python3.9/site-packages/pytorch_lightning/strategies/ddp.py:438: UserWarning: Error handling mechanism for deadlock detection is uninitialized. Skipping check.

rank_zero_warn("Error handling mechanism for deadlock detection is uninitialized. Skipping check.")

Traceback (most recent call last):

File "/home/code/ColossalAI/examples/images/diffusion/main.py", line 811, in

Environment

Hi @GxjGit Thank you for your feedback. We will try to reproduce your issue and fix it soon.

can you upload your train.yaml

I have not modified the yaml setting.

In addition, As I can't find the training model, I have annotated the code of pretrained model loading of UNetModel and AutoencoderKL. I have no idea if it is concerned.

model:

base_learning_rate: 1.0e-04

target: ldm.models.diffusion.ddpm.LatentDiffusion

params:

linear_start: 0.00085

linear_end: 0.0120

num_timesteps_cond: 1

log_every_t: 200

timesteps: 1000

first_stage_key: image

cond_stage_key: caption

image_size: 64

channels: 4

cond_stage_trainable: false # Note: different from the one we trained before

conditioning_key: crossattn

monitor: val/loss_simple_ema

scale_factor: 0.18215

use_ema: False

scheduler_config: # 10000 warmup steps

target: ldm.lr_scheduler.LambdaLinearScheduler

params:

warm_up_steps: [ 1 ] # NOTE for resuming. use 10000 if starting from scratch

cycle_lengths: [ 10000000000000 ] # incredibly large number to prevent corner cases

f_start: [ 1.e-6 ]

f_max: [ 1.e-4 ]

f_min: [ 1.e-10 ]

unet_config:

target: ldm.modules.diffusionmodules.openaimodel.UNetModel

params:

image_size: 32 # unused

from_pretrained: '/data/scratch/diffuser/stable-diffusion-v1-4/unet/diffusion_pytorch_model.bin'

in_channels: 4

out_channels: 4

model_channels: 320

attention_resolutions: [ 4, 2, 1 ]

num_res_blocks: 2

channel_mult: [ 1, 2, 4, 4 ]

num_heads: 8

use_spatial_transformer: True

transformer_depth: 1

context_dim: 768

use_checkpoint: False

legacy: False

first_stage_config:

target: ldm.models.autoencoder.AutoencoderKL

params:

embed_dim: 4

from_pretrained: '/data/scratch/diffuser/stable-diffusion-v1-4/vae/diffusion_pytorch_model.bin'

monitor: val/rec_loss

ddconfig:

double_z: true

z_channels: 4

resolution: 256

in_channels: 3

out_ch: 3

ch: 128

ch_mult:

- 1

- 2

- 4

- 4

num_res_blocks: 2

attn_resolutions: []

dropout: 0.0

lossconfig:

target: torch.nn.Identity

cond_stage_config:

target: ldm.modules.encoders.modules.FrozenCLIPEmbedder

params:

use_fp16: True

data:

target: main.DataModuleFromConfig

params:

batch_size: 64

wrap: False

train:

target: ldm.data.base.Txt2ImgIterableBaseDataset

params:

file_path: "/home/notebook/data/group/huangxin/laion-400m/e-commerce/e-commerce-0.tsv"

world_size: 1

rank: 0

lightning:

trainer:

accelerator: 'gpu'

devices: 4

log_gpu_memory: all

max_epochs: 2

precision: 16

auto_select_gpus: False

strategy:

target: pytorch_lightning.strategies.ColossalAIStrategy

params:

use_chunk: False

enable_distributed_storage: True,

placement_policy: cuda

force_outputs_fp32: False

log_every_n_steps: 2

logger: True

default_root_dir: "/tmp/diff_log/"

profiler: pytorch

logger_config:

wandb:

target: pytorch_lightning.loggers.WandbLogger

params:

name: nowname

save_dir: "/tmp/diff_log/"

offline: opt.debug

id: nowname

may be you should download the pretrained model from https://huggingface.co/CompVis/stable-diffusion-v1-4

conda env create -f environment.yaml it give ResolvePackageNotFound:

- cudatoolkit=11.3

- libgcc-ng[version='>=9.3.0']

- __glibc[version='>=2.17']

- cudatoolkit=11.3

- libstdcxx-ng[version='>=9.3.0']

can it run CPU?

may be you should download the pretrained model from https://huggingface.co/CompVis/stable-diffusion-v1-4

ok, I am downloading it and trying it again.

conda env create -f environment.yaml it give ResolvePackageNotFound:

- cudatoolkit=11.3

- libgcc-ng[version='>=9.3.0']

- __glibc[version='>=2.17']

- cudatoolkit=11.3

- libstdcxx-ng[version='>=9.3.0']

can it run CPU?

I ran it in GPU environment.

may be you should download the pretrained model from https://huggingface.co/CompVis/stable-diffusion-v1-4

@Fazziekey I have update the pretrained model and code, but encountered the same problem.

How do we comprehend the this problem:

RuntimeError: Sizes of tensors must match except in dimension 1. Expected size 8 but got size 7 for tensor number 1 in the list.

"What does “size 8” stand for ?

Hi,could you please share the detailed link of pretrained model? I only fould some *.ckpt models. @GxjGit

Hi,could you please share the detailed link of pretrained model? I only fould some *.ckpt models. @GxjGit Look at this, click the tab of "Files and versions"

And you can also download by cmd:

may be you should download the pretrained model from https://huggingface.co/CompVis/stable-diffusion-v1-4

@Fazziekey I have update the pretrained model and code, but encountered the same problem.

How do we comprehend the this problem:

RuntimeError: Sizes of tensors must match except in dimension 1. Expected size 8 but got size 7 for tensor number 1 in the list.

"What does “size 8” stand for ?

@Fazziekey I have soveled my problem. The reason is the incorrect images size. As the size of images in my dataset is different, it repoted "RuntimeError: stack expects each tensor to be equal size, but got [140, 140, 3] at entry 0 and [300, 500, 3] at entry 1", so I resize it in 224 x 224, it report the error as above. when I change to 256 x 256 as the yaml setting, it run successfully.

But I can not find resize operation in the origin code, Are the images in your dataset in the fixed size of 256*256?

I suggest that a description of the resolution requirements for input dataset images can be added. Anyway, Thanks a lot.

Thanks! @GxjGit I download and meet some problems:1.Some weights of the model checkpoint at openai/clip-vit-large-patch14 were not used when initializing CLIPTextModelZero, 2.like followings:

may be you should download the pretrained model from https://huggingface.co/CompVis/stable-diffusion-v1-4

@Fazziekey I have update the pretrained model and code, but encountered the same problem. How do we comprehend the this problem: RuntimeError: Sizes of tensors must match except in dimension 1. Expected size 8 but got size 7 for tensor number 1 in the list. "What does “size 8” stand for ?

@Fazziekey I have soveled my problem. The reason is the incorrect images size. As the size of images in my dataset is different, it repoted "RuntimeError: stack expects each tensor to be equal size, but got [140, 140, 3] at entry 0 and [300, 500, 3] at entry 1", so I resize it in 224 x 224, it report the error as above. when I change to 256 x 256 as the yaml setting, it run successfully.

But I can not find resize operation in the origin code, Are the images in your dataset in the fixed size of 256*256?

I suggest that a description of the resolution requirements for input dataset images can be added. Anyway, Thanks a lot.

yes,the input image size must be 256*256 for Latency Diffusion