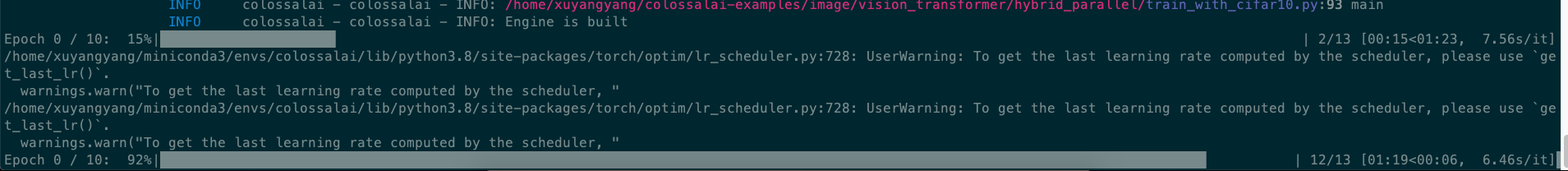

Training processs hangs on when training ViT with cifar data

I use two nodes, 2 processes for each node for training. However, the training processs hangs on when the iteration goes 12/13 as the picture shows.

config file: vit_pipeline.py

training command:

node 1:

TORCH_DISTRIBUTED_DEBUG=INFO NCCL_DEBUG_SUBSYS=ALL NCCL_DEBUG=INFO NCCL_SOCKET_IFNAME=bond0 cuda_visible_devices=4,5 torchrun --nproc_per_node=2 --nnodes=2 --node_rank=0 --rdzv_backend=c10d --rdzv_endpoint=172.27.231.79:29500 --rdzv_id=colossalai-default-job /home/xuyangyang/colossalai-examples/image/vision_transformer/hybrid_parallel/train_with_cifar10.py --config /home/xuyangyang/colossalai-examples/image/vision_transformer/hybrid_parallel/configs/vit_pipeline.py

node 2:

TORCH_DISTRIBUTED_DEBUG=INFO NCCL_DEBUG_SUBSYS=ALL NCCL_DEBUG=INFO NCCL_SOCKET_IFNAME=bond0 cuda_visible_devices=1,2 torchrun --nproc_per_node=2 --nnodes=2 --node_rank=1 --rdzv_backend=c10d --rdzv_endpoint=172.27.231.79:29500 --rdzv_id=colossalai-default-job /home/xuyangyang/colossalai-examples/image/vision_transformer/hybrid_parallel/train_with_cifar10.py --config /home/xuyangyang/colossalai-examples/image/vision_transformer/hybrid_parallel/configs/vit_pipeline.py

Hi,how is the processing of this issue? The situation is met on every run.

Hi, @frankxyy , let us try to reproduce this bug as it works fine on our machine. We are trying to see how to make it occur.

@FrankLeeeee The bug is reproduced in my machine when the bs is 16 and training to the last incomplete batch.

We have updated a lot. This issue was closed due to inactivity. Thanks.