torchrun command not found error when launching on multi-nodes

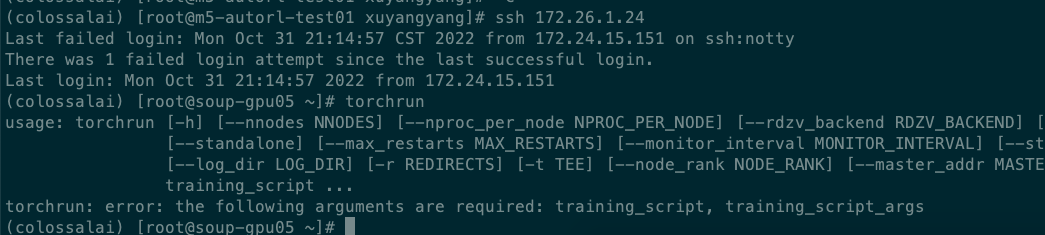

The error is as above. However,if i personnally ssh the worker node and run torchrun, this command exists. I have added conda activate into the .bashrc file.

Could you please test if you have already installed torchrun on host 172.27.231.79?

The fabric library should have propagated the $PATH variable to another machine.

@FrankLeeeee @Cypher30 I fixed this error by set publickey for 172.27.231.79 to visit itself.

Now another error occured:

(colossalai) [root@m5-autorl-test01 colossalai]# cuda_visible_devices=2,4 colossalai run --nproc_per_node 2 --host 172.27.231.79,172.26.1.24 --master_addr 172.27.231.79 run_resnet_cifar10_with_engine.py

_meta_registrations seems to be incompatible with PyTorch 1.11.0+cu113.

[11/02/22 15:23:07] INFO colossalai - paramiko.transport - INFO: Connected (version 2.0, client OpenSSH_7.4)

INFO colossalai - paramiko.transport - INFO: Authentication (publickey) successful!

WARNING:torch.distributed.run:

*****************************************

Setting OMP_NUM_THREADS environment variable for each process to be 1 in default, to avoid your system being overloaded, please further tune the variable for optimal performance in your application as needed.

*****************************************

[W socket.cpp:401] [c10d] The server socket has failed to bind to [::]:29500 (errno: 98 - Address already in use).

[W socket.cpp:401] [c10d] The server socket has failed to bind to 0.0.0.0:29500 (errno: 98 - Address already in use).

[E socket.cpp:435] [c10d] The server socket has failed to listen on any local network address.

ERROR:torch.distributed.elastic.multiprocessing.errors.error_handler:{

"message": {

"message": "RendezvousClosedError: ",

"extraInfo": {

"py_callstack": "Traceback (most recent call last):\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/multiprocessing/errors/__init__.py\", line 345, in wrapper\n return f(*args, **kwargs)\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/run.py\", line 724, in main\n run(args)\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/run.py\", line 715, in run\n elastic_launch(\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/launcher/api.py\", line 131, in __call__\n return launch_agent(self._config, self._entrypoint, list(args))\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/launcher/api.py\", line 236, in launch_agent\n result = agent.run()\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/metrics/api.py\", line 125, in wrapper\n result = f(*args, **kwargs)\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/agent/server/api.py\", line 709, in run\n result = self._invoke_run(role)\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/agent/server/api.py\", line 844, in _invoke_run\n self._initialize_workers(self._worker_group)\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/metrics/api.py\", line 125, in wrapper\n result = f(*args, **kwargs)\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/agent/server/api.py\", line 678, in _initialize_workers\n self._rendezvous(worker_group)\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/metrics/api.py\", line 125, in wrapper\n result = f(*args, **kwargs)\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/agent/server/api.py\", line 538, in _rendezvous\n store, group_rank, group_world_size = spec.rdzv_handler.next_rendezvous()\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/rendezvous/dynamic_rendezvous.py\", line 1024, in next_rendezvous\n self._op_executor.run(join_op, deadline)\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/rendezvous/dynamic_rendezvous.py\", line 634, in run\n raise RendezvousClosedError()\ntorch.distributed.elastic.rendezvous.api.RendezvousClosedError\n",

"timestamp": "1667373788"

}

}

}

Traceback (most recent call last):

File "/root/miniconda3/envs/colossalai/bin/torchrun", line 8, in <module>

sys.exit(main())

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/multiprocessing/errors/__init__.py", line 345, in wrapper

return f(*args, **kwargs)

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/run.py", line 724, in main

run(args)

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/run.py", line 715, in run

elastic_launch(

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/launcher/api.py", line 131, in __call__

return launch_agent(self._config, self._entrypoint, list(args))

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/launcher/api.py", line 236, in launch_agent

result = agent.run()

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/metrics/api.py", line 125, in wrapper

result = f(*args, **kwargs)

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/agent/server/api.py", line 709, in run

result = self._invoke_run(role)

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/agent/server/api.py", line 844, in _invoke_run

self._initialize_workers(self._worker_group)

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/metrics/api.py", line 125, in wrapper

result = f(*args, **kwargs)

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/agent/server/api.py", line 678, in _initialize_workers

self._rendezvous(worker_group)

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/metrics/api.py", line 125, in wrapper

result = f(*args, **kwargs)

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/agent/server/api.py", line 538, in _rendezvous

store, group_rank, group_world_size = spec.rdzv_handler.next_rendezvous()

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/rendezvous/dynamic_rendezvous.py", line 1024, in next_rendezvous

self._op_executor.run(join_op, deadline)

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/rendezvous/dynamic_rendezvous.py", line 634, in run

raise RendezvousClosedError()

torch.distributed.elastic.rendezvous.api.RendezvousClosedError

Error: failed to run torchrun --nproc_per_node=2 --nnodes=2 --node_rank=0 --rdzv_backend=c10d --rdzv_endpoint=172.27.231.79:29500 --rdzv_id=colossalai-default-job run_resnet_cifar10_with_engine.py on 172.27.231.79

WARNING:torch.distributed.run:

*****************************************

Setting OMP_NUM_THREADS environment variable for each process to be 1 in default, to avoid your system being overloaded, please further tune the variable for optimal performance in your application as needed.

*****************************************

ERROR:torch.distributed.elastic.multiprocessing.errors.error_handler:{

"message": {

"message": "RendezvousClosedError: ",

"extraInfo": {

"py_callstack": "Traceback (most recent call last):\n File \"/usr/local/lib64/python3.6/site-packages/torch/distributed/elastic/multiprocessing/errors/__init__.py\", line 345, in wrapper\n return f(*args, **kwargs)\n File \"/usr/local/lib64/python3.6/site-packages/torch/distributed/run.py\", line 719, in main\n run(args)\n File \"/usr/local/lib64/python3.6/site-packages/torch/distributed/run.py\", line 713, in run\n )(*cmd_args)\n File \"/usr/local/lib64/python3.6/site-packages/torch/distributed/launcher/api.py\", line 131, in __call__\n return launch_agent(self._config, self._entrypoint, list(args))\n File \"/usr/local/lib64/python3.6/site-packages/torch/distributed/launcher/api.py\", line 252, in launch_agent\n result = agent.run()\n File \"/usr/local/lib64/python3.6/site-packages/torch/distributed/elastic/metrics/api.py\", line 125, in wrapper\n result = f(*args, **kwargs)\n File \"/usr/local/lib64/python3.6/site-packages/torch/distributed/elastic/agent/server/api.py\", line 709, in run\n result = self._invoke_run(role)\n File \"/usr/local/lib64/python3.6/site-packages/torch/distributed/elastic/agent/server/api.py\", line 837, in _invoke_run\n self._initialize_workers(self._worker_group)\n File \"/usr/local/lib64/python3.6/site-packages/torch/distributed/elastic/metrics/api.py\", line 125, in wrapper\n result = f(*args, **kwargs)\n File \"/usr/local/lib64/python3.6/site-packages/torch/distributed/elastic/agent/server/api.py\", line 678, in _initialize_workers\n self._rendezvous(worker_group)\n File \"/usr/local/lib64/python3.6/site-packages/torch/distributed/elastic/metrics/api.py\", line 125, in wrapper\n result = f(*args, **kwargs)\n File \"/usr/local/lib64/python3.6/site-packages/torch/distributed/elastic/agent/server/api.py\", line 538, in _rendezvous\n store, group_rank, group_world_size = spec.rdzv_handler.next_rendezvous()\n File \"/usr/local/lib64/python3.6/site-packages/torch/distributed/elastic/rendezvous/dynamic_rendezvous.py\", line 1024, in next_rendezvous\n self._op_executor.run(join_op, deadline)\n File \"/usr/local/lib64/python3.6/site-packages/torch/distributed/elastic/rendezvous/dynamic_rendezvous.py\", line 634, in run\n raise RendezvousClosedError()\ntorch.distributed.elastic.rendezvous.api.RendezvousClosedError\n",

"timestamp": "1667374037"

}

}

}

Traceback (most recent call last):

File "/usr/local/bin/torchrun", line 11, in <module>

sys.exit(main())

File "/usr/local/lib64/python3.6/site-packages/torch/distributed/elastic/multiprocessing/errors/__init__.py", line 345, in wrapper

return f(*args, **kwargs)

File "/usr/local/lib64/python3.6/site-packages/torch/distributed/run.py", line 719, in main

run(args)

File "/usr/local/lib64/python3.6/site-packages/torch/distributed/run.py", line 713, in run

)(*cmd_args)

File "/usr/local/lib64/python3.6/site-packages/torch/distributed/launcher/api.py", line 131, in __call__

return launch_agent(self._config, self._entrypoint, list(args))

File "/usr/local/lib64/python3.6/site-packages/torch/distributed/launcher/api.py", line 252, in launch_agent

result = agent.run()

File "/usr/local/lib64/python3.6/site-packages/torch/distributed/elastic/metrics/api.py", line 125, in wrapper

result = f(*args, **kwargs)

File "/usr/local/lib64/python3.6/site-packages/torch/distributed/elastic/agent/server/api.py", line 709, in run

result = self._invoke_run(role)

File "/usr/local/lib64/python3.6/site-packages/torch/distributed/elastic/agent/server/api.py", line 837, in _invoke_run

self._initialize_workers(self._worker_group)

File "/usr/local/lib64/python3.6/site-packages/torch/distributed/elastic/metrics/api.py", line 125, in wrapper

result = f(*args, **kwargs)

File "/usr/local/lib64/python3.6/site-packages/torch/distributed/elastic/agent/server/api.py", line 678, in _initialize_workers

self._rendezvous(worker_group)

File "/usr/local/lib64/python3.6/site-packages/torch/distributed/elastic/metrics/api.py", line 125, in wrapper

result = f(*args, **kwargs)

File "/usr/local/lib64/python3.6/site-packages/torch/distributed/elastic/agent/server/api.py", line 538, in _rendezvous

store, group_rank, group_world_size = spec.rdzv_handler.next_rendezvous()

File "/usr/local/lib64/python3.6/site-packages/torch/distributed/elastic/rendezvous/dynamic_rendezvous.py", line 1024, in next_rendezvous

self._op_executor.run(join_op, deadline)

File "/usr/local/lib64/python3.6/site-packages/torch/distributed/elastic/rendezvous/dynamic_rendezvous.py", line 634, in run

raise RendezvousClosedError()

torch.distributed.elastic.rendezvous.api.RendezvousClosedError

Error: failed to run torchrun --nproc_per_node=2 --nnodes=2 --node_rank=1 --rdzv_backend=c10d --rdzv_endpoint=172.27.231.79:29500 --rdzv_id=colossalai-default-job run_resnet_cifar10_with_engine.py on 172.26.1.24

(colossalai) [root@m5-autorl-test01 colossalai]# WARNING:torch.distributed.elastic.agent.server.api:Received 2 death signal, shutting down workers

ERROR:torch.distributed.elastic.multiprocessing.errors.error_handler:{

"message": {

"message": "SignalException: Process 59481 got signal: 2",

"extraInfo": {

"py_callstack": "Traceback (most recent call last):\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/agent/server/api.py\", line 906, in _exit_barrier\n store_util.barrier(\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/utils/store.py\", line 67, in barrier\n synchronize(store, data, rank, world_size, key_prefix, barrier_timeout)\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/utils/store.py\", line 53, in synchronize\n agent_data = get_all(store, key_prefix, world_size)\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/utils/store.py\", line 31, in get_all\n data = store.get(f\"{prefix}{idx}\")\nRuntimeError: Socket Timeout\n\nDuring handling of the above exception, another exception occurred:\n\nTraceback (most recent call last):\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/multiprocessing/errors/__init__.py\", line 345, in wrapper\n return f(*args, **kwargs)\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/run.py\", line 724, in main\n run(args)\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/run.py\", line 715, in run\n elastic_launch(\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/launcher/api.py\", line 131, in __call__\n return launch_agent(self._config, self._entrypoint, list(args))\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/launcher/api.py\", line 236, in launch_agent\n result = agent.run()\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/metrics/api.py\", line 125, in wrapper\n result = f(*args, **kwargs)\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/agent/server/api.py\", line 709, in run\n result = self._invoke_run(role)\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/agent/server/api.py\", line 877, in _invoke_run\n self._exit_barrier()\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/agent/server/api.py\", line 906, in _exit_barrier\n store_util.barrier(\n File \"/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/multiprocessing/api.py\", line 60, in _terminate_process_handler\n raise SignalException(f\"Process {os.getpid()} got signal: {sigval}\", sigval=sigval)\ntorch.distributed.elastic.multiprocessing.api.SignalException: Process 59481 got signal: 2\n",

"timestamp": "1667373923"

}

}

}

Traceback (most recent call last):

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/agent/server/api.py", line 906, in _exit_barrier

store_util.barrier(

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/utils/store.py", line 67, in barrier

synchronize(store, data, rank, world_size, key_prefix, barrier_timeout)

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/utils/store.py", line 53, in synchronize

agent_data = get_all(store, key_prefix, world_size)

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/utils/store.py", line 31, in get_all

data = store.get(f"{prefix}{idx}")

RuntimeError: Socket Timeout

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/root/miniconda3/envs/colossalai/bin/torchrun", line 8, in <module>

sys.exit(main())

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/multiprocessing/errors/__init__.py", line 345, in wrapper

return f(*args, **kwargs)

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/run.py", line 724, in main

run(args)

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/run.py", line 715, in run

elastic_launch(

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/launcher/api.py", line 131, in __call__

return launch_agent(self._config, self._entrypoint, list(args))

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/launcher/api.py", line 236, in launch_agent

result = agent.run()

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/metrics/api.py", line 125, in wrapper

result = f(*args, **kwargs)

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/agent/server/api.py", line 709, in run

result = self._invoke_run(role)

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/agent/server/api.py", line 877, in _invoke_run

self._exit_barrier()

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/agent/server/api.py", line 906, in _exit_barrier

store_util.barrier(

File "/root/miniconda3/envs/colossalai/lib/python3.8/site-packages/torch/distributed/elastic/multiprocessing/api.py", line 60, in _terminate_process_handler

raise SignalException(f"Process {os.getpid()} got signal: {sigval}", sigval=sigval)

torch.distributed.elastic.multiprocessing.api.SignalException: Process 59481 got signal: 2

This error is solved as I change one of the machine. However, root cuase is unknown.

We have updated a lot. This issue was closed due to inactivity. Thanks.