ColossalAI

ColossalAI copied to clipboard

[BUG]: weight duplicate in ParallelFreqAwareEmbeddingBag

🐛 Describe the bug

I suppose ParallelFreqAwareEmbeddingBag._weight should be a ColoTensor, otherwise it would be moved to GPU when some Model containing this embedding uses .cuda().

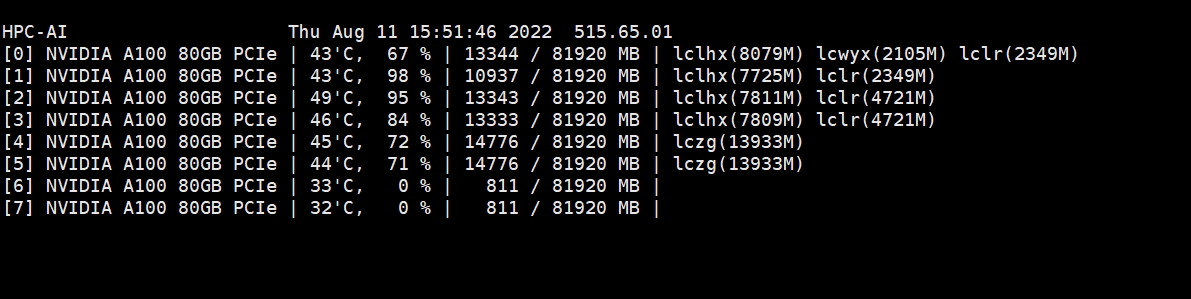

That's why I observed the memory leakage:

Besides, as I've indicated, this weight is also duplicated in CachedParamMgr in this Line

In a word, I believe another design of the weight shared between ParallelFreqAwareEmbeddingBag and CachedParamMgr is in need, and I'm working on it.

Environment

No response