Fix Bayesian sensor to use negative observations

Breaking change

Bayesian sensor has been updated:

prob_given_falseis now a required configuration variable as there was no mathematical rationale for the previous default value.numeric_state,template, andstateentries with only oneto_stateconfigured will also now update the prior probability accordingly if the observation is false. Those who have used duplicate, mirroredstateconfigurations as a work-around will have their functionality preserved. However fornumeric_stateandtemplateentries this will cause duplication of the Bayesian updating.

Proposed change

This change will be breaking, the previous iterations of this integration only used an observation to update the prior if the observation evaluated to True and ignored it if the observation was False or None/Unavailable.

Many users may have tweaked their probabilities to get the desired behaviour, or made duplicate, mirrored, entries (one for each binary sensor state - the advocated work-around in this community post) in their bayesian config.yml in order to get the desired behaviour. This fix will ~likely change the behaviour of their bayesian sensors - by duplicating the effect of each observation.~ will preserve these configurations in commit 0b366b5ddca5ed2485232f25bc51d5dc224cee36 for 'state' entries but may break configurations for 'numeric_state' and 'template' entries, to fix simply remove the mirrored entry.

According to the documentation page this integration is only used by approx 600 active users.

Fixes #67478 The Bayesian sensor purports to use bayes' rule to combine sensor information in order to create a composite, probabilistic, sensor. However as currently configured it does so in a one-sided fashion that is mathematically and practically incorrect.

A worded example:

I want to know if my internet is likely to be working for the devices connected to my wifi. I have a variety of HA sensors that are correlated; can I ping google.com, can I ping 1.1.1.1, can I ping my local DNS servers, can I ping my local router. Any one of these devices can, in varying regularity, fail to respond to pings, however if a number at the same time fail to respond then the issue is probably my connection and not the devices/services.

As currently configured Bayesian 'observations' only affect the probability if the sensor returns the configured to_state state and ignores any other state. However, according to bayes' rule, if I CAN connect to 1.1.1.1, that tells me something about my connection and if I CANNOT that also tells me something - both scenarios should 'update my priors'. If the sensor in question fails or returns unavailable - then fine, ignore it.

Click to expand - Current functionality: One-sided bayesian updating

binary_sensor:

- platform: ping

name: Internet_Connection_Google

host: 8.8.8.8

count: 3

scan_interval: 30

- platform: ping

name: Internet_Connection_Cloudflared

host: 1.1.1.1

count: 3

scan_interval: 30

- platform: ping

name: Wireguard

host: 10.10.0.1

count: 3

scan_interval: 30

- platform: ping

name: router

host: 192.168.0.1

count: 3

scan_interval: 30

- platform: ping

name: pihole

host: 192.168.0.28

count: 3

scan_interval: 30

# bayesian sensore

- platform: "bayesian"

name: "internet_working" # maybe sensible to switch these in seperate errors - DNS, Switch, Gl-inet

prior: 0.99 # internet has high up time

probability_threshold: 0.95 # by defualt assume the internet is up

observations:

- platform: "state"

entity_id: "binary_sensor.internet_connection_cloudflared"

prob_given_true: 0.997 # cloudflare is rarely unavailible when the internet is up

prob_given_false: 0.01 # it is highly likely that cloudflare will be unreachable when the internet is down - unless it is a dns issue

to_state: "on"

- platform: "state"

entity_id: "binary_sensor.internet_connection_google"

prob_given_true: 0.994

prob_given_false: 0.01

to_state: "on"

- platform: "state"

entity_id: "binary_sensor.router"

prob_given_true: 0.995

prob_given_false: 0.4 # slight majority of time internet is down router will be as well

to_state: "on"

- platform: "template" # evaluates to true when both the greeni and wireguard are down

prob_given_true: 0.0001 # if the internet is working then it is very unlikely both are down

prob_given_false: 0.10 # if the internet is not working then there is 10% chance they are both unreachable

value_template: >-

{% if is_state('binary_sensor.pihole', 'off')

and is_state('binary_sensor.wireguard', 'off') %}

true

{% else %}

false

{% endif %}

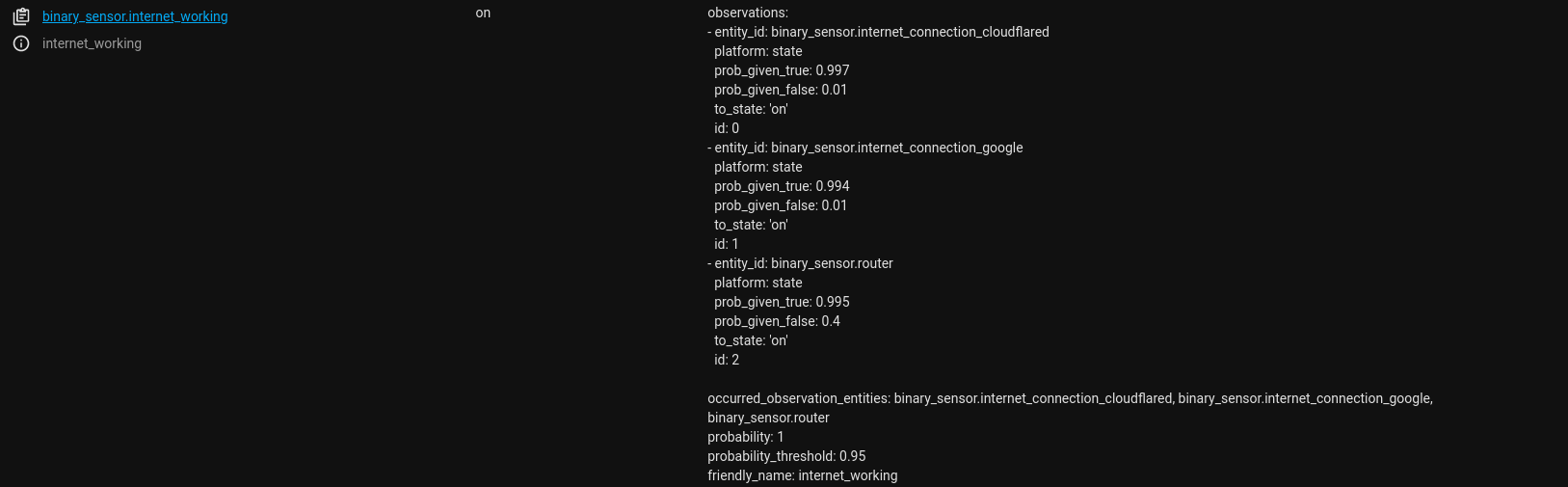

Creates this when pings are returned successfully:

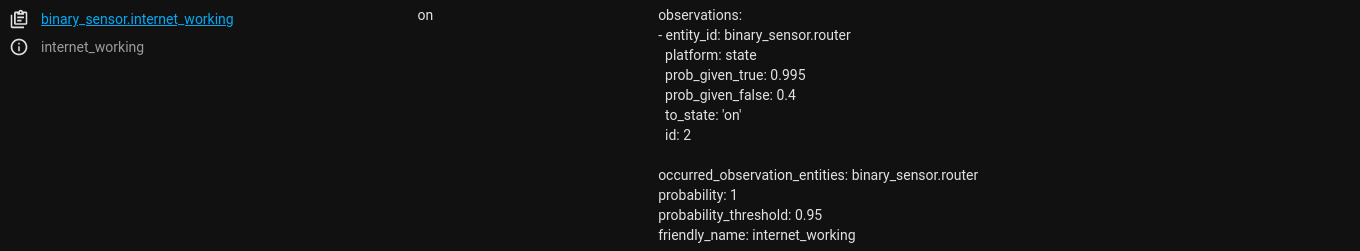

And this when I pull out my WAN cable - note how the observations 'disappear':

Click to expand - PR functionality: Two-sided bayesian updating

Same yml as above

binary_sensor:

- platform: ping

name: Internet_Connection_Google

host: 8.8.8.8

count: 3

scan_interval: 30

- platform: ping

name: Internet_Connection_Cloudflared

host: 1.1.1.1

count: 3

scan_interval: 30

- platform: ping

name: Wireguard

host: 10.10.0.1

count: 3

scan_interval: 30

- platform: ping

name: router

host: 192.168.0.1

count: 3

scan_interval: 30

- platform: ping

name: pihole

host: 192.168.0.28

count: 3

scan_interval: 30

# bayesian sensore

- platform: "bayesian"

name: "internet_working" # maybe sensible to switch these in seperate errors - DNS, Switch, Gl-inet

prior: 0.99 # internet has high up time

probability_threshold: 0.95 # by defualt assume the internet is up

observations:

- platform: "state"

entity_id: "binary_sensor.internet_connection_cloudflared"

prob_given_true: 0.997 # cloudflare is rarely unavailible when the internet is up

prob_given_false: 0.01 # it is highly likely that cloudflare will be unreachable when the internet is down - unless it is a dns issue

to_state: "on"

- platform: "state"

entity_id: "binary_sensor.internet_connection_google"

prob_given_true: 0.994

prob_given_false: 0.01

to_state: "on"

- platform: "state"

entity_id: "binary_sensor.router"

prob_given_true: 0.995

prob_given_false: 0.4 # slight majority of time internet is down router will be as well

to_state: "on"

- platform: "template" # evaluates to true when both the greeni and wireguard are down

prob_given_true: 0.0001 # if the internet is working then it is very unlikely both are down

prob_given_false: 0.10 # if the internet is not working then there is 10% chance they are both unreachable

value_template: >-

{% if is_state('binary_sensor.pihole', 'off')

and is_state('binary_sensor.wireguard', 'off') %}

true

{% else %}

false

{% endif %}

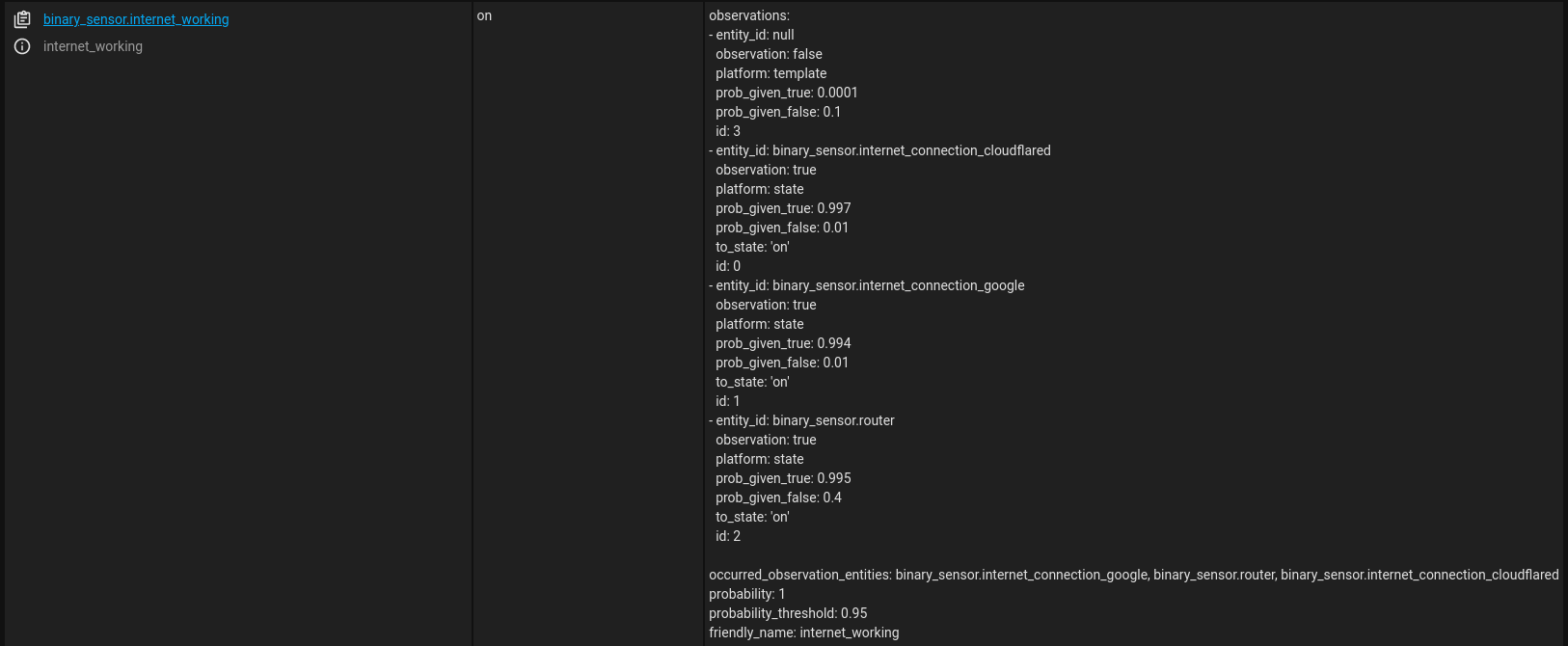

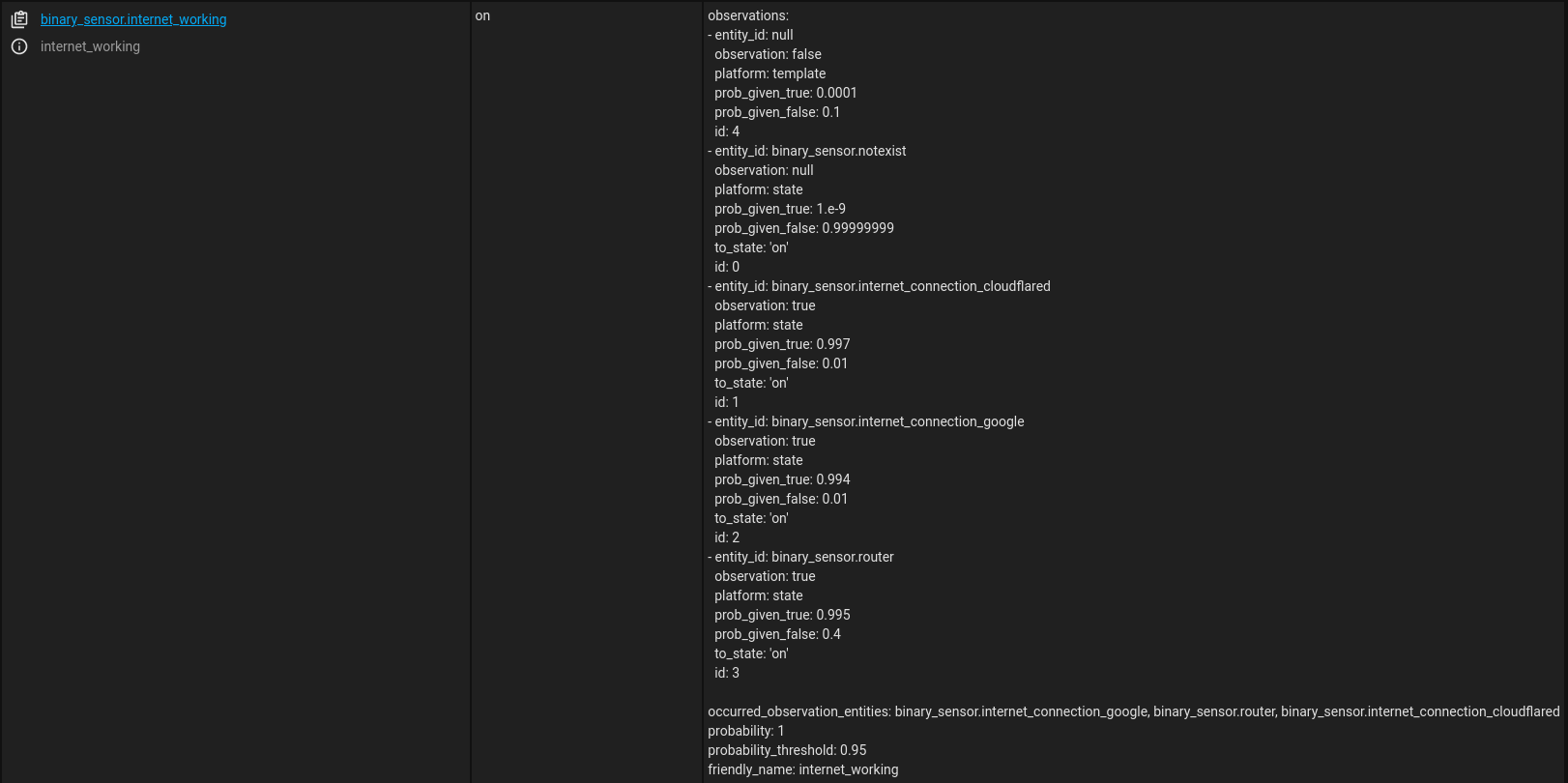

Creates this when pings are returned successfully:

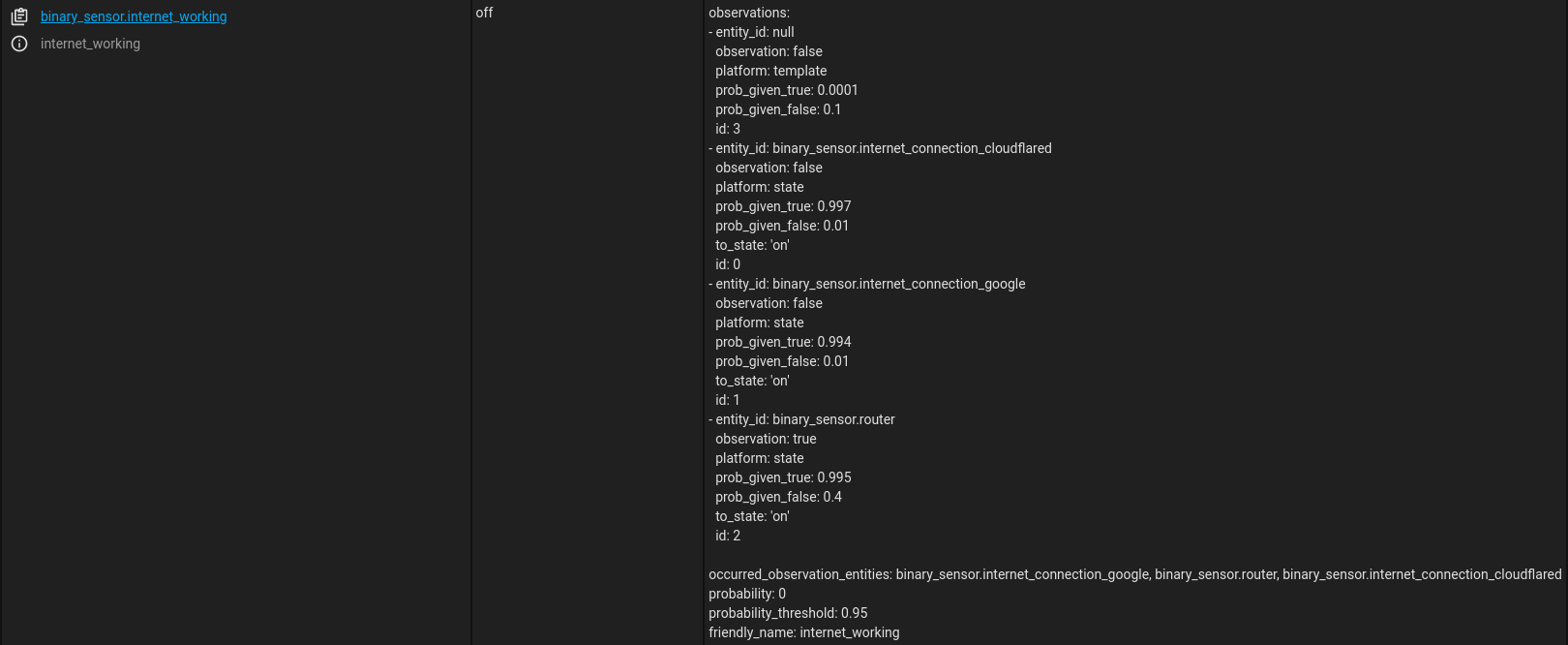

And this when not: (Note how the state change is detected and the probabilities update appropriately)

Type of change

- [ ] Dependency upgrade

- [x] Bugfix (non-breaking change which fixes an issue)

- [ ] New integration (thank you!)

- [ ] New feature (which adds functionality to an existing integration)

- [x] Breaking change (fix/feature causing existing functionality to break)

- [ ] Code quality improvements to existing code or addition of tests

Additional information

- This PR fixes or closes issue: fixes #67478

- This PR is related to issue:

- Link to documentation pull request: https://github.com/home-assistant/home-assistant.io/pull/21899

Checklist

- [x] The code change is tested and works locally.

- [x] Local tests pass. Your PR cannot be merged unless tests pass

- [x] There is no commented out code in this PR.

- [x] I have followed the development checklist

- [x] The code has been formatted using Black (

black --fast homeassistant tests) - [x] ~Tests have been added to verify that the new code works.~ No new tests required

If user exposed functionality or configuration variables are added/changed:

- [x] ~Documentation added/updated for www.home-assistant.io~ None changed

If the code communicates with devices, web services, or third-party tools:

- [x] The manifest file has all fields filled out correctly.

Updated and included derived files by running:python3 -m script.hassfest. - [x] ~New or updated dependencies have been added to

requirements_all.txt.

Updated by runningpython3 -m script.gen_requirements_all.~ No new deps - [x] ~For the updated dependencies - a link to the changelog, or at minimum a diff between library versions is added to the PR description.~ No change to deps

- [x] ~Untested files have been added to

.coveragerc.~ No new files

The integration reached or maintains the following Integration Quality Scale:

- [x] No score or internal

To help with the load of incoming pull requests:

- [x] I have reviewed two other open pull requests in this repository.

In c03552b and e547429 I choose not to completely ignore unavailable entries as it could frustrate debugging. Now they will be displayed as such

- platform: mqtt

state_topic: "does/state/notexist"

name: "notexist"

qos: 1

payload_on: "ON"

payload_off: "OFF"

device_class: motion

- platform: "bayesian"

name: "internet_working" # maybe sensible to switch these in seperate errors - DNS, Switch, Gl-inet

prior: 0.99 # internet has high up time

probability_threshold: 0.95 # by defualt assume the internet is up

observations:

- platform: "state"

entity_id: "binary_sensor.notexist"

prob_given_true: 0.000000001 # cloudflare is rarely unavailible when the internet is up

prob_given_false: 0.99999999 # it is highly likely that cloudflare will be unreachable when the internet is down - unless it is a dns issue

to_state: "on"

- platform: "state"

entity_id: "binary_sensor.internet_connection_cloudflared"

...

Will show the unobserved in dev tools now

And put info in the log:

I'm not sure what the policy is for writing code to aid migration/mitigate breaking changes. This would be one such way to do so.

I assume many people used duplicate, mirrored entries to get around this bug. It is advocated for in this post https://community.home-assistant.io/t/how-bayes-sensors-work-from-a-statistics-professor-with-working-google-sheets/143177

def _is_mirrored_entry(self, value, key):

possible_dup = []

for entry in self._observations:

if entry.get(key, None) is not None:

if entry[key] == value:

possible_dup.append(entry)

if len(possible_dup) != 2:

return False

true_sum = possible_dup[0]["prob_given_true"] + possible_dup[1]["prob_given_true"]

if true_sum > 0.95 and true_sum <1.05:

_LOGGER.warning(

"Two entries found for '%s' and their prob_given_true sum to approx 1, duplicate entries for inverse states is no long required",

value,

)

return True

return False

Calling the function as such

def _build_observations_by_entity(self):

"""

Build and return data structure of the form below.

{

"sensor.sensor1": [{"id": 0, ...}, {"id": 1, ...}],

"sensor.sensor2": [{"id": 2, ...}],

...

}

Each "observation" must be recognized uniquely, and it should be possible

for all relevant observations to be looked up via their `entity_id`.

"""

observations_by_entity = {}

seen = set()

for ind, obs in enumerate(self._observations):

if obs.get("entity_id", None) in seen:

print("checking for mirrored entry")

if self._is_mirrored_entry(obs["entity_id"], "entity_id"):

continue

elif obs.get("entity_id", None) is not None:

seen.add(obs["entity_id"])

obs["id"] = ind

This detects and ignores mirrors using some fuzzy logic, it would make the change less breaking but it is not pretty

@frenck I see the smash label - not sure if I should feel fear or excitement

Some builds failing - is that in issue at my end or the CI stack, looks as if it is not a problem with my code?

Run echo "Failed to restore Python virtual environment from cache"

echo "Failed to restore Python virtual environment from cache"

exit 1

shell: sh -e {0}

env:

CACHE_VERSION: 9

PIP_CACHE_VERSION: 3

HA_SHORT_VERSION: [2](https://github.com/home-assistant/core/runs/5643792261?check_suite_focus=true#step:5:2)022.4

DEFAULT_PYTHON: [3](https://github.com/home-assistant/core/runs/5643792261?check_suite_focus=true#step:5:3).[9](https://github.com/home-assistant/core/runs/5643792261?check_suite_focus=true#step:5:9)

PRE_COMMIT_CACHE: ~/.cache/pre-commit

PIP_CACHE: /tmp/pip-cache

SQLALCHEMY_WARN_20: 1

PYTHONASYNCIODEBUG: 1

Failed to restore Python virtual environment from cache

Error: Process completed with exit code 1.

see the smash label - not sure if I should feel fear or excitement

Neither :)

@HarvsG I've restarted CI now. You can also trigger it yourself by closing, then reopening the PR.

0b366b5 Makes changes no longer breaking. If a user defines multiple entries for a single entity, the previous behaviour is preserved it will only affect the posterior probability if the 'state' matches the 'to_state', this gives the added advantage that users can use entities that have more than two states by specifying probabilities for each state

All tests now passing.

N.B: In order to make the target probabilities for the tests I used online, independent, Bayes Theorem calculators rather than just plugging the output back in as the solution.

@frenck Could you kindly move the PR to 'needs review' or 'review in progress' on the projects board please. I closed and re-opened as per @emontnemery 's suggestion to force a re-run of tests. https://github.com/home-assistant/core/projects/4#card-78597739

Tagging reviewers @dgomes and @emontnemery

Bumping as has been another week @dgomes @emontnemery @frenck

Bumping as has been another 3 weeks @dgomes @emontnemery @frenck

@HarvsG Will you have time to adjust the tests so there's full coverage anytime soon?

@HarvsG need help finishing this PR ?

@HarvsG need help finishing this PR ?

~Yup just the changes to the tests. I have not done much work on tests before and a bit pressed for time at the moment~

Now has 100% Code coverage

tagging @dgomes and @emontnemery for re-review.

@dgomes I have done all your suggestions. I have tried adding myself as a codeowner but pre-commit checks disallow it:

hassfest.................................................................Failed

- hook id: hassfest

- files were modified by this hook

Sorry to have lead you in the wrong direction, you add yourself through the manifest file

Sorry to have lead you in the wrong direction, you add yourself through the manifest file

Turns out I had to ad the modified CODEOWNERS file as well as the committed manifest

Edit: @dgomes, all done now, could you start the workflow?

@dgomes Are you happy I have resolved your suggestions and queries? Build ~should now pass~ now passes as well.

Great. I notice another reviewer is listed but I don't know if they are required. So apologies for (possibly) requesting a review unnecessarily.

Anything else I need to do before merge?

We are missing emontnemery approval

This might take sometime, but don't worry

I trust @dgomes on this one 👍

Thanks for the merge. And thank you all for your time on this one. My first contrib to core 😄

It was a good one :) Congratulations!

Now you must stick around for part 2: handling user submitted issues 😅